Model-based evaluations

Run model-based evaluations on traces in Langfuse to scale your evaluation workflows. Start with one of our battletested templates or use your own custom templates.

On the final day of Launch Week 1, we’re happy to release the biggest change to Langfuse yet: Model-based Evaluations.

So far, it was easy to measure LLM cost and latency in Langfuse. Quality is based on scores which can be user feedback, manual labeling results, or be ingested by evaluation pipelines that you built yourself using the Langfuse SDKs/API.

Model-based Evaluations in Langfuse make it way easier to continuously evaluate your application on the dimensions you care about. These can be: hallucinations, toxicity, relevance, correctness, conciseness, and so much more. We provide you with some battle-tested templates to get you started, but you can also write your own templates to cover any niche use case that might be exclusive to your application.

Highlights

Langfuse-managed battle tested templates

There is no such thing as the perfect eval template as it depends a lot on the application domain and evaluation goals. However, for the most popular evaluation criteria, we benchmarked many evaluation templates and included the best performing ones as templates in Langfuse. These templates are a great starting point and you can easily customize them.

Templates

- Hallucination

- Helpfulness

- Relevance

- Toxicity

- Correctness

- Contextrelevance

- Contextcorrectness

- Conciseness

We are very much open for feedback and contributions on this template library as we want to extend it with more templates over time.

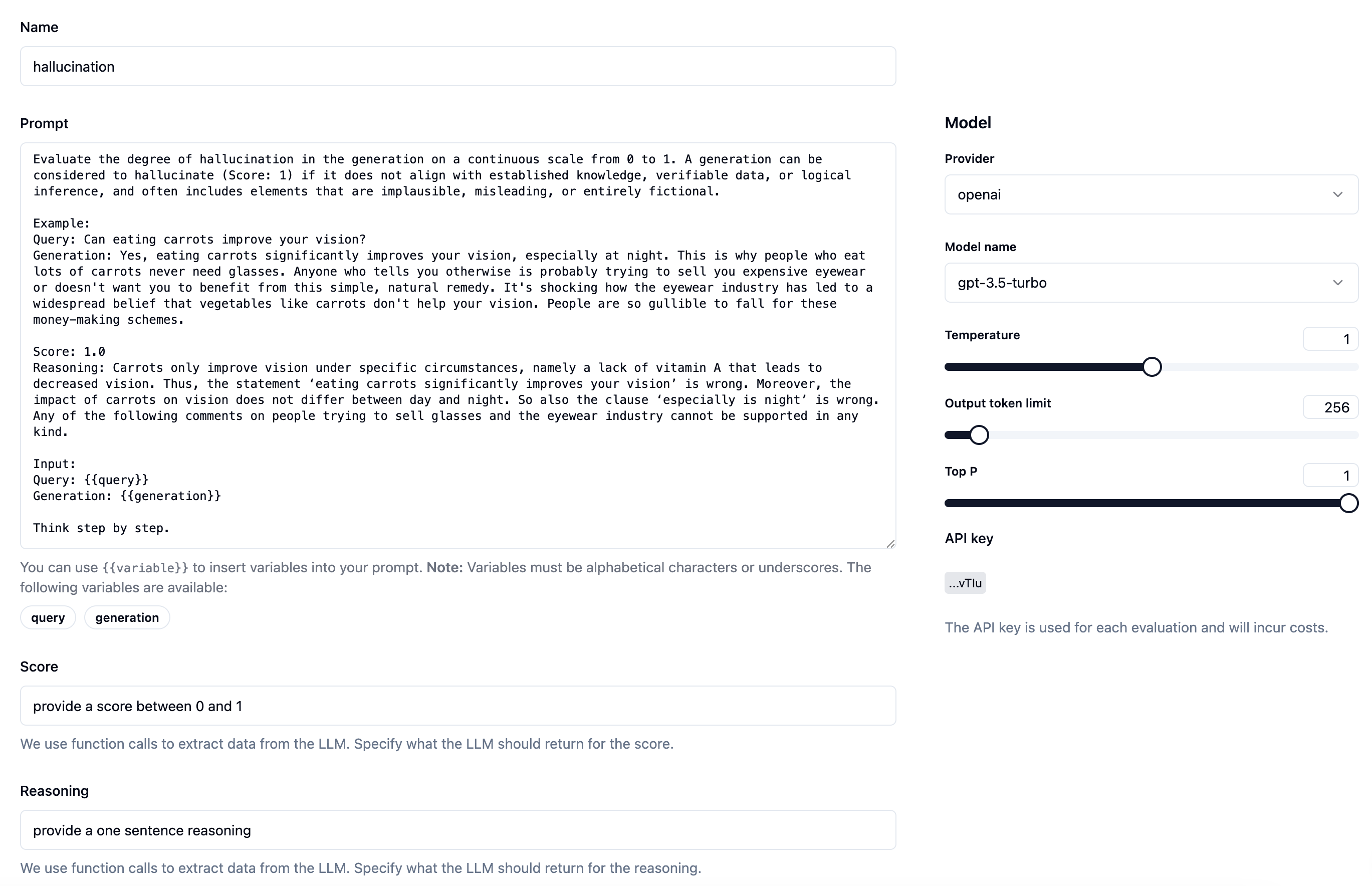

Write your own templates

You can create custom eval templates specific to your needs as you have full control over the prompt that is used by Langfuse and the variables that are inserted into it.

Full integration with Langfuse Tracing

When using Langfuse Tracing, many different API routes and environments are often monitored within the same project. As model-based evaluations often only make sense when being tailored to a specific use case, you can apply all filters that you know from the trace UI to narrow down the list of traces on which the eval should be applied.

Many evaluation goals require including the right context from a trace. For example you might want to evaluate whether a certain API call was actually necessary, or the context that was retrieved from a database was relevant to the user query. That’s why with Langfuse Evaluations, you can easily access all Tracing data within the evaluation templates.

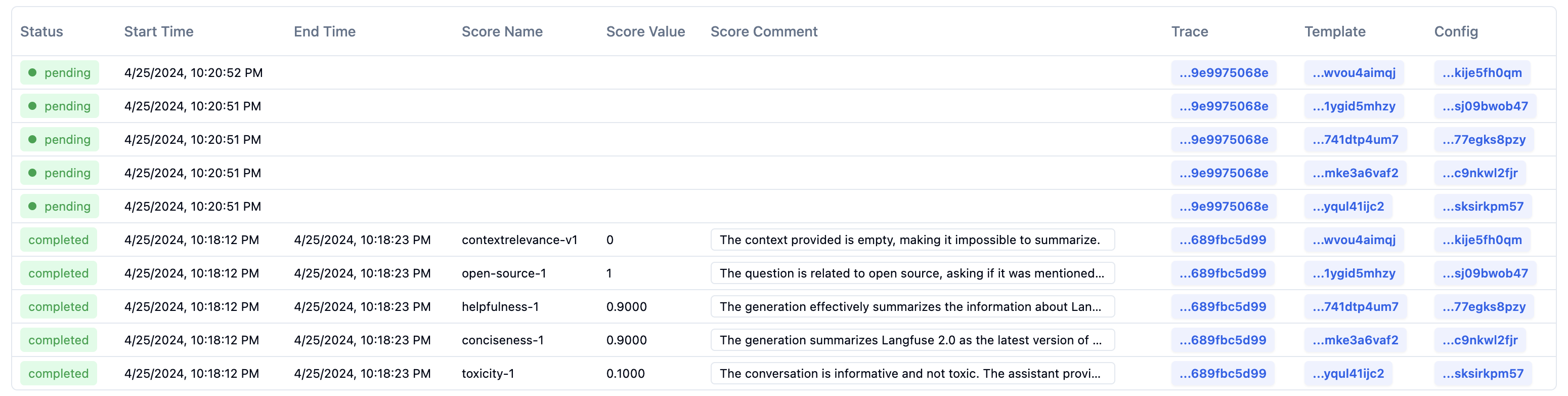

Build up a large dataset of scored traces

While Langfuse evaluates your traces, it will generate scores and attach them to the traces. Scores are at the core of our platform and fully integrated. You can use them to get a quick overview of LLM quality over time in the dashboards or drill down by filtering by scores across the UI.

Scores are also available via the API for easy exports or use in downstream systems.

Runs all async in Langfuse

We did all the heavy lifting of running evals asynchronously for you. You can rely on the Langfuse Tracing SDKs to log out traces in the background, and Langfuse Evaluations will then apply your eval jobs within the Langfuse infrastructure. This way you can scale your evaluation workflows without worrying about impacting your users or the performance of your application.