Blog

The latest updates from Langfuse. See Changelog for more product updates.

Using Agent Skills to Automatically Improve your Prompts

Use the Langfuse skill for Claude Code to analyze trace feedback and iteratively improve your prompts. Read more →

2026/02/16by Lotte

Will you be my CLI? Making Agents fall in love with Langfuse.

How we optimized Langfuse for AI agents with CLI, Skills, markdown endpoints, public RAG endpoint, llms.txt, and MCP servers. A love letter to agents that need great developer tools. Read more →

2026/02/14by Felix, Lotte, Nimar, Marc

Langfuse joins ClickHouse

Our goal continues to be building the best LLM engineering platform Read more →

2026/01/16by Clemens, Marc, Max

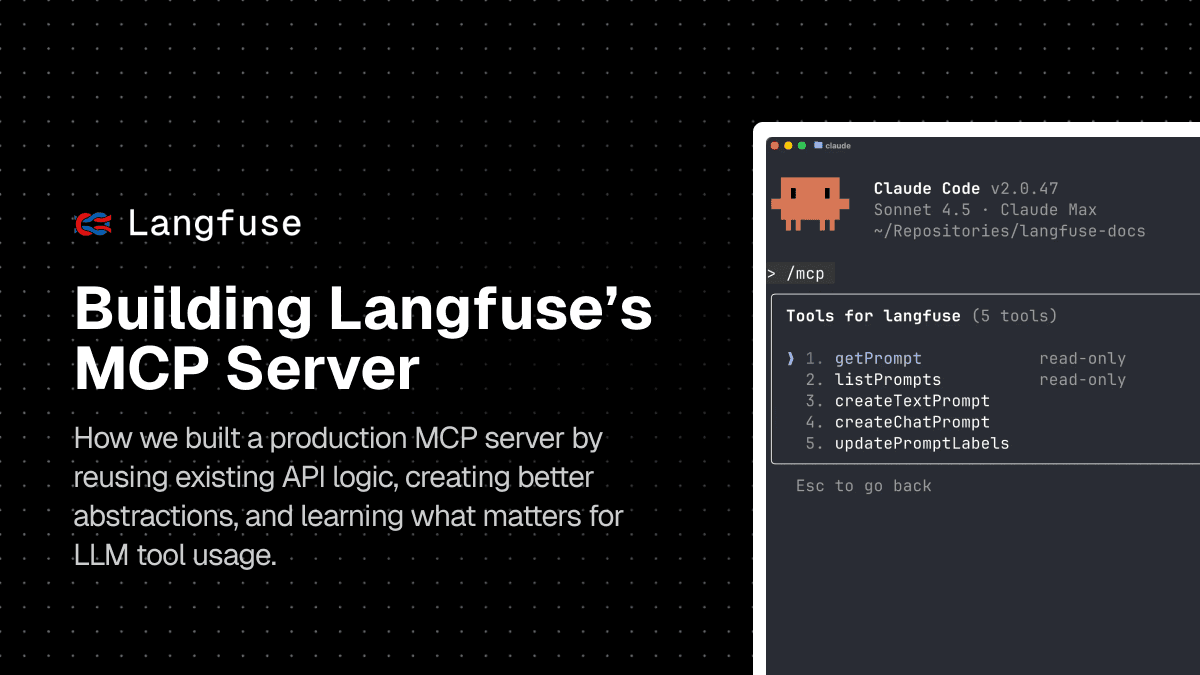

Building Langfuse's MCP Server: Code Reuse and Developer Experience

How we built a production MCP server by reusing existing API logic, creating better abstractions, and learning what matters for LLM tool usage. Read more →

2025/12/09by Michael

Langfuse November Update

Agent Tracing, Model Pricing Tiers in Cost Tracking, Score Analytics, Langfuse MCP Server & more Read more →

2025/11/30by Marc

Vibe Coding a Custom Annotation UI

A practical guide to building a customized annotation UI in minutes using Langfuse Read more →

2025/11/25by Abdallah

Incident Report for Nov 18, 2025

Postmortem report for the outage which caused multiple hours of downtime. Read more →

2025/11/20by Max

Evaluating LLM Applications: A Comprehensive Roadmap

A practical guide to systematically evaluating LLM applications through observability, error analysis, testing, synthetic datasets, and experiments. Read more →

2025/11/12by Abdallah

Systematic Evaluation of AI Agents

A practical guide to running and interpreting experiments in Langfuse. Read more →

2025/11/06by Marlies

Langfuse Launch Week 4

A week of new features releases focused on collaboratively tracing, evaluating, and iterating on agents. Read more →

2025/10/29by Clemens, Marc, Max

RAG Observability and Evals

A practical guide to RAG observability and evals. Read more →

2025/10/28by Abdallah

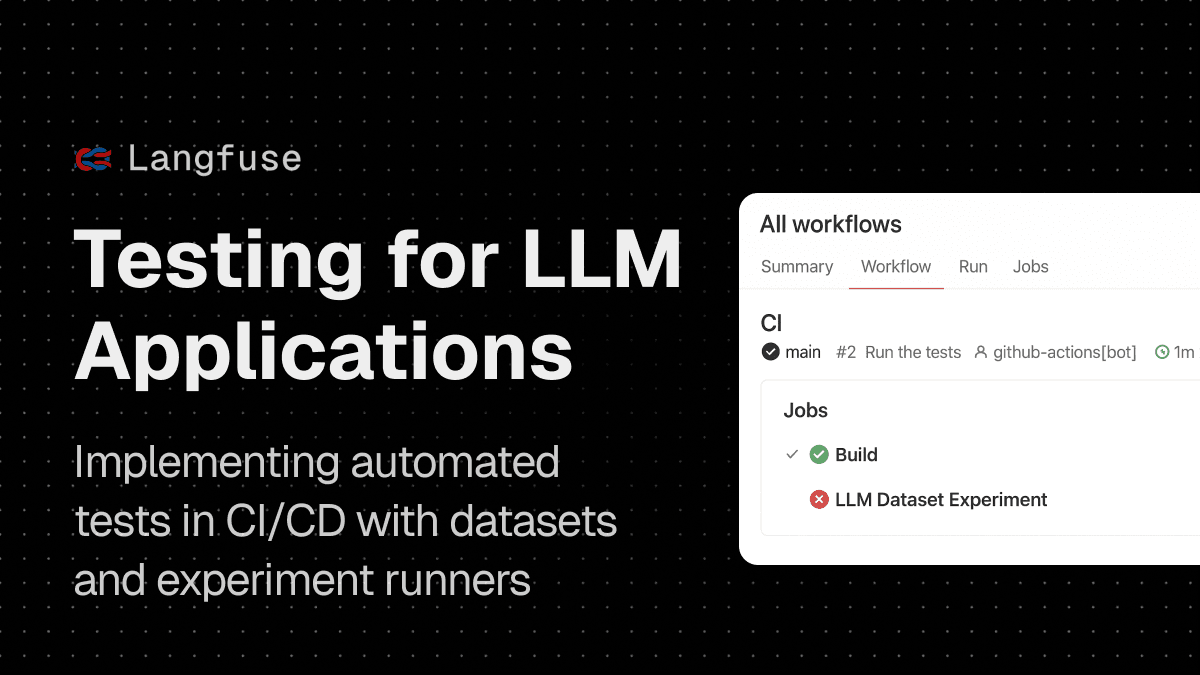

LLM Testing: A Practical Guide to Automated Testing for LLM Applications

Learn how to test LLM applications with automated evaluation, datasets, and experiment runners. A practical guide to LLM testing strategies. Read more →

2025/10/21by Abdallah

State of LLMs on the Application Layer

We have analyzed model adoption of 20.000+ organizations building LLM applications and agents in the last 12 months. Here is what we found. Read more →

2025/10/13by felixkrauth

Evaluating Multi-Turn Conversations

A practical guide of the different ways to evaluate multi-turn conversation agents (a.k.a. chatbots) Read more →

2025/10/09by Abdallah

Langfuse September Update

Langfuse September Update: AI Filters, Experiment Runner SDK, Structured Outputs for Experiments, TypeScript SDK v4 GA, price drop, and more. Read more →

2025/09/30by Marc

Automated Evaluations of LLM Applications

A practical guide to setting up automated evaluations for LLM applications and AI agents using Langfuse. Read more →

2025/09/12by Jannik

Langfuse August Update

Langfuse August Update: New Observation Types, End-to-End Walkthrough Videos, Agent Demo Project, and more. Read more →

2025/08/31by Marc

Error Analysis to Evaluate LLM Applications

A practical guide to identifying, categorizing, and analyzing failure modes in LLM applications using Langfuse. Read more →

2025/08/29by Jannik

Evaluating Model Performance Across Clouds

This guide shows you how to use an automated benchmarking script in Shadeform that will help you measure self-hosted model performance across clouds. Read more →

2025/08/13by Jannik

Langfuse July Update

Langfuse July Update: Playground Side-by-Side Comparison, real-time alerts (Webhooks & Slack) for prompt changes, usage alerts on Langfuse Cloud, and one-click remote experiments for non-technical teammates. Read more →

2025/07/31by Marc

Langfuse June Update

Langfuse June Update: Lower prices across every Cloud plan, long-awaited features, team growth & more! Read more →

2025/06/30by Marc

Doubling Down on Open Source

Open Sourcing all Product Features in Langfuse under the MIT license Read more →

2025/06/04by Clemens, Marc, Max

Langfuse May Update

Langfuse May Update: Python SDK v3 GA, Launch Week #3 Recap, Custom Dashboards & More! Read more →

2025/05/31by Marc

How we Built Scalable & Customizable Dashboards

A technical deep dive into the core abstractions and architecture Read more →

2025/05/21by Steffen

Langfuse Launch Week #3

A new feature launch every day. A week dedicated to deeper insights and seamless integrations. Read more →

2025/05/19by Clemens, Marc, Max

Langfuse April Update

Langfuse April Update: 10,000 GitHub Stars, Langfuse User Meetup, Q2 Roadmap Highlights & More! Read more →

2025/04/30by Marc

How we use LLMs to build and scale Langfuse

Building a fast-growing open-source project with a small team is fun. These are some of the ways how we use LLMs to accelerate. Read more →

2025/04/24by Marc

Langfuse March Update

Langfuse March Update: New UI, Prompt Composability, Environments & More! Read more →

2025/03/31by Marc

Comparing Open-Source AI Agent Frameworks

Get an overview of the leading open-source AI agent frameworks—LangGraph, OpenAI Agents SDK, Google ADK, Smolagents, CrewAI, AutoGen, Semantic Kernel, Strands Agents, Pydantic AI, Agno, Mastra, and Microsoft Agent Framework. Compare features, learn when to use each, and see how to track agent behavior with Langfuse Read more →

2025/03/19by Jannik

AI Agent Observability, Tracing & Evaluation with Langfuse

Trace, monitor, evaluate, and test AI agents in production. Learn about agent observability strategies, evaluation techniques, and how to use Langfuse with LangGraph, OpenAI Agents, Pydantic AI, CrewAI, and more. Read more →

2025/03/16by Jannik

🤗 Hugging Face and 🪢 Langfuse: 5 Ways to use them Together

Hugging Face and Langfuse are popular platforms to develop and run LLM applications. This guide shows you 5 ways how to use both platforms together to simplify open source LLM development. Read more →

2025/03/13by Jannik

LLM Evaluation 101: Best Practices, Challenges & Proven Techniques

Explore proven strategies for LLM evaluation — from offline and online benchmarking – this post briefs you on the state of the art. Read more →

2025/03/04by Jannik

Langfuse February Update

We've had a busy February at Langfuse, filled with new integrations, features, and announcements. Read more →

2025/02/28by Marc

The Agent Deep Dive: David Zhang’s Open Deep Research

Exploring Zhang’s open-source Deep Research. We look at the prompting, search executions, and report creation, and compare it to both OpenAI’s and Perplexity’s Deep Research solutions. Read more →

2025/02/20by Jannik

Evaluating and Monitoring Voice AI Agents

Learn how to use Langfuse in your Voice AI development workflow for comprehensive voice agent testing and optimization. Read more →

2025/01/22by Marc

From Zero to Scale: Langfuse's Infrastructure Evolution

A deep dive into how Langfuse's infrastructure evolved from a simple prototype to a scalable observability platform. Read more →

2024/12/17by Steffen, Max

Langfuse Launch Week #2

A new feature launch every day. Dedicated to unlocking model capabilities and integrating Langfuse tightly in your development loop. Read more →

2024/11/17by Clemens, Marc, Max

LLM Product Development for Product Managers

Learnings from working with hundreds of teams on how to build great AI products. Read more →

2024/11/13by Marc

Langfuse is the #1 most used Open Source LLMOps Product

Langfuse is the leading Open Source LLMOps product, trusted by thousands of teams for its robust tracing and evaluation, seamless integrations, and extensive community. Read more →

2024/11/05by Marc, Clemens, Max

OpenTelemetry (OTel) for LLM Observability

Explore the challenges of LLM observability and the current state of using OpenTelemetry (OTel) for standardized instrumentation. Read more →

2024/10/14by Marc

Observability in Multi-Step LLM Systems

Optimize multi-step LLM systems with advanced observability, feedback, and testing strategies for better performance and reliability. Read more →

2024/10/07by Marc

Should you use an LLM Proxy to Build your Application?

We go over the advantages and disadvantages of using an LLM proxy. Read more →

2024/09/23by Clemens, Marc

Dify x Langfuse: Built in observability & analytics

The popular LLM development framework Dify.AI now integrates with Langfuse out of the box and in one click. Read more →

2024/07/01by Clemens

Monitoring LLM Security & Reducing LLM Risks

LLM security requires effective run-time checks and ex-post monitoring and evaluation. Learn how to use Langfuse together with popular security libraries to protect prevent common security risks. Read more →

2024/06/06by Lydia You

Haystack <> Langfuse Integration

Easily monitor and trace your Haystack pipelines with this new Langfuse integration. Read more →

2024/05/16by Lydia

Introducing Langfuse 2.0: the LLM Engineering Platform

Extending Langfuse’s core tracing with evaluations, prompt management, LLM playground and datasets. Read more →

2024/04/26by Clemens

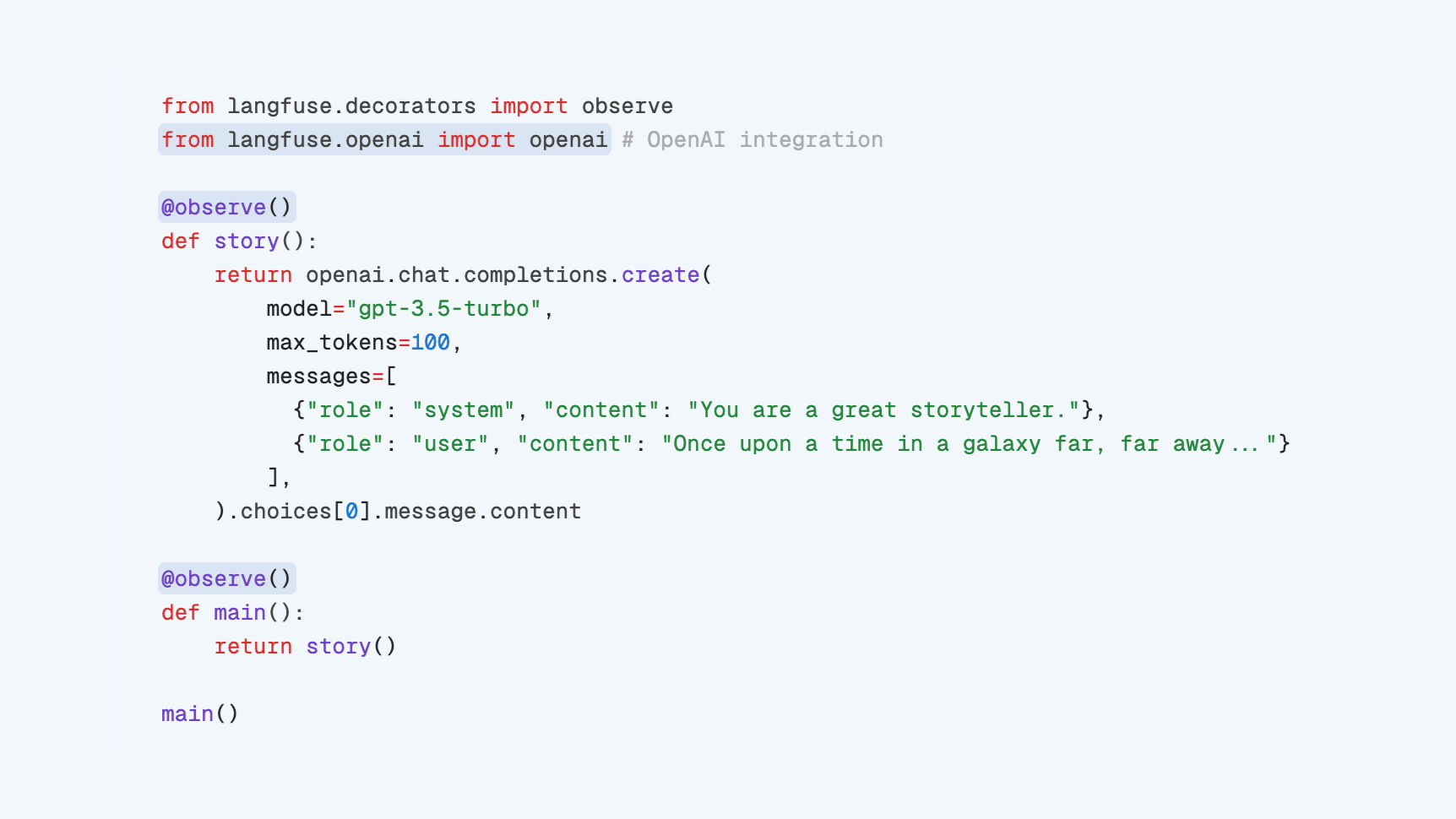

Trace complex LLM applications with the Langfuse decorator (Python)

When building RAG or agents, lots of LLM calls and non-LLM inputs feeds into the final output. The Langfuse decorator allows you to trace and evaluate holistically. Read more →

2024/04/24by Marc

Langfuse Launch Week #1

Unveiling Langfuse 2.0 in a week of releases Read more →

2024/04/19by Clemens

Native LlamaIndex Integration

Context retrieval is a mainstay in LLM engineering. This latest integration brings Langfuse's observability to LlamaIndex applications for simple tracing, monitoring and evaluation of RAG applications. Read more →

2024/03/06by Clemens

Langfuse raises $4M

We're thrilled to announce that Langfuse, the open source observability and analytics solution for LLM applications, has raised a $4m seed round from Lightspeed Venture Partners, La Famiglia and Y Combinator. Read more →

2023/11/07by Marc

Langfuse Update — October 2023

Improved dashboard, OpenAI integration, RAG evals, doubled API speed, simplified Docker deployments. Read more →

2023/11/01by Marc

Langfuse Update — September 2023

Model-based evals, datasets, core improvements (query engine, complex filters, exports, sharing) and new integrations (Langflow, Flowise, LiteLLM). Read more →

2023/10/02by Marc

Langflow x Langfuse

Build in no-code in Langflow, observe and analyze in Langfuse Read more →

2023/09/21by Max

Langfuse Update — August 2023

Improved data ingestion, integrations and UI. Read more →

2023/09/06by Max

Langfuse Update — July 2023

Async SDKs, automated token counts, new Trace UI, Langchain integration, public GET API, new reports, ... Read more →

2023/08/02by Marc

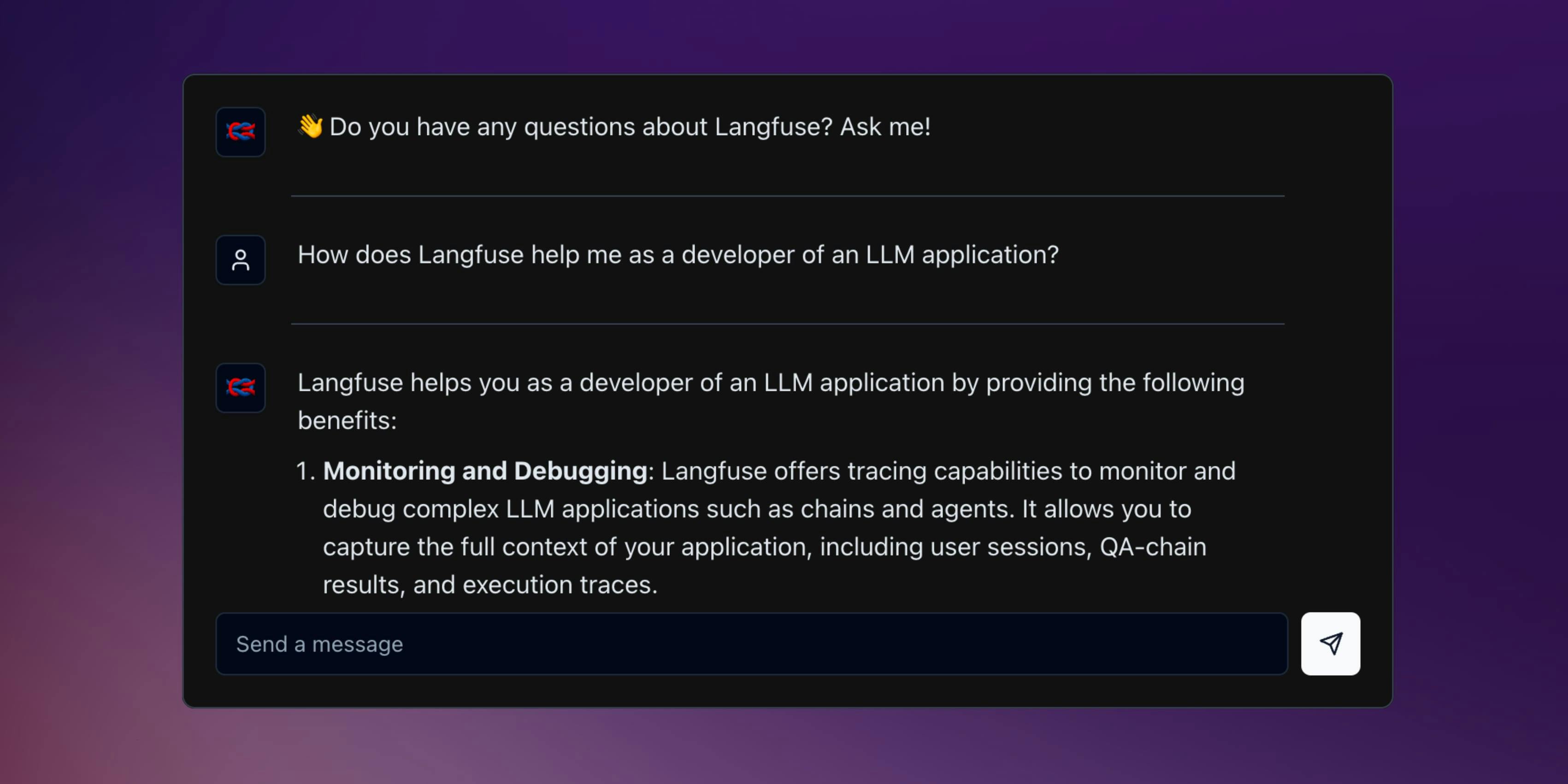

🤖 Q&A Chatbot for Langfuse Docs

Summary of how we've built a Q&A chatbot for the Langfuse docs and how Langfuse helps us to improve it. Read more →

2023/07/31by Marc

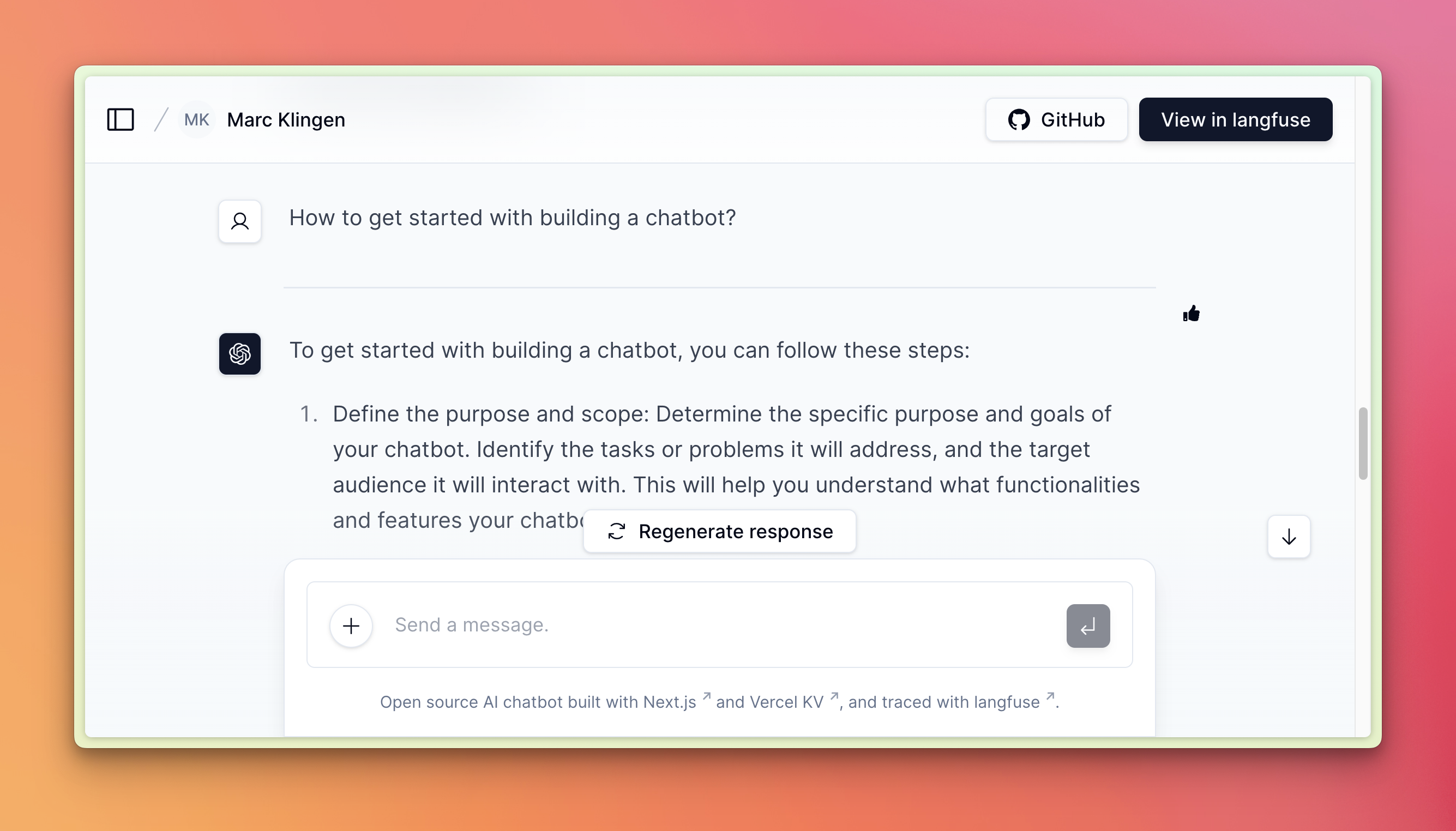

LLM Chatbot Showcase

We integrated langfuse with the Vercel AI Chatbot. This example includes streaming responses, automated token counts, collection of user feedback in the frontend, grouping of thread into a single trace, and more. Read more →

2023/07/21by Marc

Launch YC

Cross-post of our Launch YC (W23) post which explains why we're building Langfuse. Read more →

2023/07/19by Clemens