Will you be my CLI? Making Agents fall in love with Langfuse.

How we optimized Langfuse for AI agents with CLI, Skills, markdown endpoints, public RAG endpoint, llms.txt, and MCP servers. A love letter to agents that need great developer tools.

Valentine’s Day is here, and while most people are thinking about flowers and chocolates, we’ve been thinking about something more romantic: making AI agents fall in love with Langfuse.

Over the past months, we’ve shipped a series of features designed specifically to make Langfuse the perfect companion for AI agents, whether they’re coding in your IDE, running in the terminal, or helping you debug your LLM applications.

This post walks through our “agent love language”: the technical decisions and implementations that make Langfuse easy for agents to discover, understand, and integrate with.

Agents Need Love Too

AI agents are increasingly doing real work: writing code, debugging applications, searching documentation, and even building entire features. But here’s the thing: agents are only as good as the tools they can use.

When an agent encounters our product, it needs to quickly discover, understand, and interact with our website, docs, and APIs.

We realized that if we wanted agents to successfully integrate Langfuse into codebases, we needed to optimize for these needs.

This is how we try to be a good catch for agents:

- Skills - Agent Skills that offer context and structured workflows for agents

- Langfuse CLI - Together with Skills, the CLI opens up all of Langfuse to be used by agents

- Markdown Endpoints - Docs as raw markdown, not just HTML, giving agents faster and better structured access to our documentation

- Public RAG Endpoint - RAG endpoint available to everyone via public API, letting agents search through all indexed docs and GitHub issues/discussions

- llms.txt - A machine-readable documentation index, giving agents a high-level overview and links to detailed documentation

- Docs MCP Server - Docs search and access for agents that prefer MCP over Skills/CLI

- Authenticated MCP Server - For agents that want to use Prompts from Langfuse Prompt Management, we built an authenticated MCP server

Let’s dive into each one.

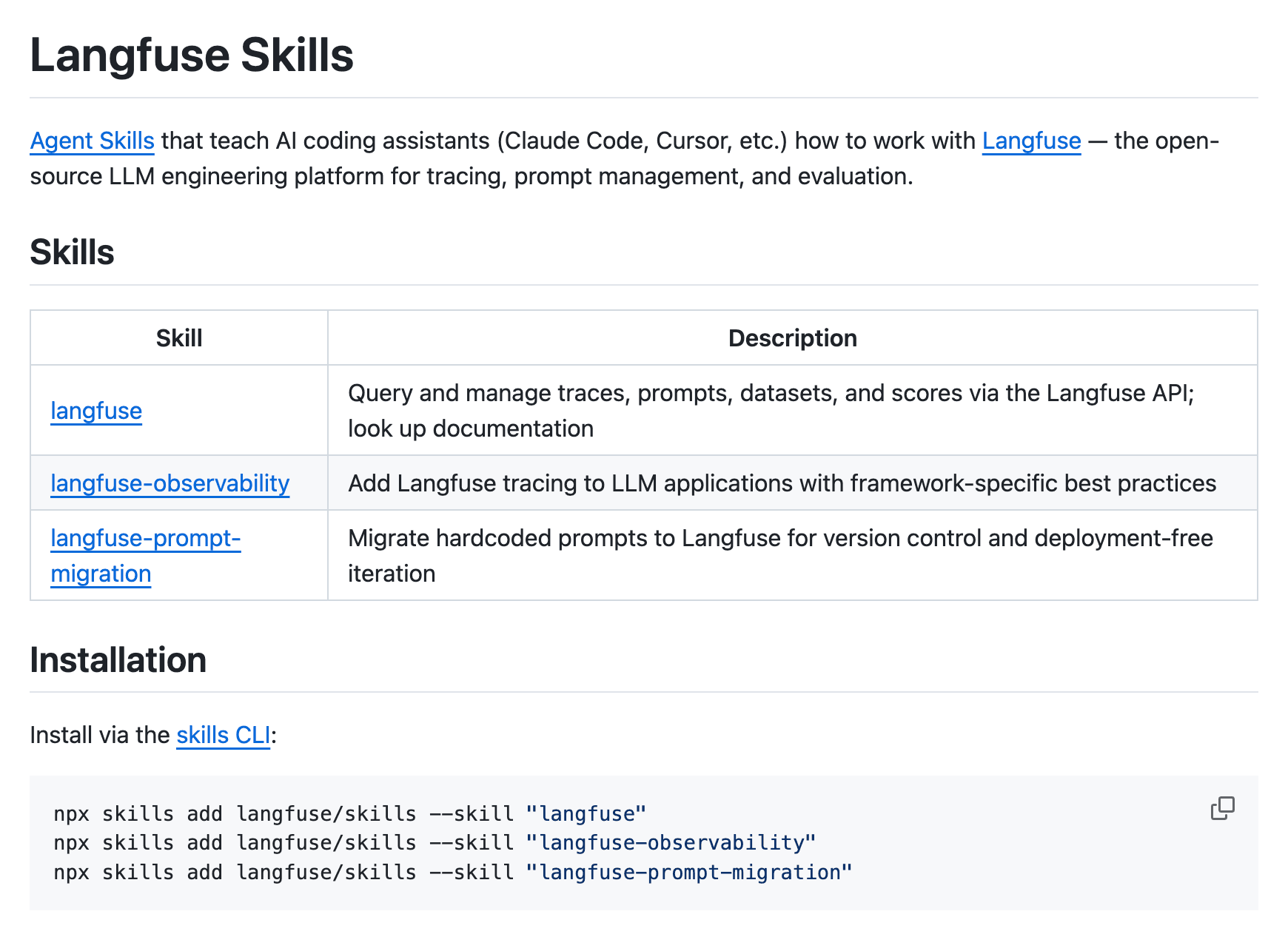

Skills

How can agents learn not just what Langfuse is, but how to actually use it in common scenarios?

Agent Skills, especially in combination with a CLI (see more below), are the most effective pattern for this. We created a skills repo with structured workflows for the most common Langfuse integration tasks.

We’ve published three Langfuse-specific skills to start with, more will follow:

What are Skills?

Skills follow the open Agent Skills standard. Each skill is a self-contained folder with a SKILL.md entrypoint that combines YAML frontmatter (metadata and trigger conditions) with markdown instructions. Skills can optionally bundle supporting files like reference docs, templates, and executable scripts. They work across Claude Code, Cursor, and other compatible AI coding agents. (For a deep dive, see this guide to building skills.)

How They Work

Skills use a progressive disclosure model: the YAML frontmatter (name, description, trigger phrases) is always loaded into the agent’s context so it knows when a skill is relevant, but the full instructions are only loaded on demand. This keeps context usage low while giving agents access to specialized knowledge. Unlike documentation that tells you what is possible, skills provide step-by-step workflows with decision trees, error handling, and best practices — they tell agents how to accomplish a specific goal. Multiple skills can be loaded simultaneously, and they compose well with CLI tools and MCP servers.

Here’s an excerpt from the main Langfuse skill showing how the skill is structured and how it guides agents through the different capabilities:

---

name: langfuse

description: Interact with Langfuse and access its documentation. Use when needing to (1) query or modify Langfuse data programmatically via the CLI — traces, prompts, datasets, scores, sessions, and any other API resource, (2) look up Langfuse documentation, concepts, integration guides, or SDK usage, or (3) understand how any Langfuse feature works. This skill covers CLI-based API access (via npx) and multiple documentation retrieval methods.

---

# Langfuse

Two core capabilities: **programmatic API access** via the Langfuse CLI, and **documentation retrieval** via llms.txt, page fetching, and search.

## 1. Langfuse API via CLI

Use the `langfuse-cli` to interact with the full Langfuse REST API from the command line. Run via npx (no install required):

```bash

npx langfuse-cli api <resource> <action>

```

### Quickstart

...[redacted for brevity]

### Detailed CLI Reference

For common workflows, tips, and full usage patterns, see [references/cli.md](references/cli.md).

## 2. Langfuse Documentation

Three methods to access Langfuse docs, in order of preference:

### 2a. Documentation Index (llms.txt)

Fetch the full index of all documentation pages:

```bash

curl -s https://langfuse.com/llms.txt

```

Returns a structured list of every doc page with titles and URLs. Use this to discover the right page for a topic, then fetch that page directly.

Alternatively, you can start on `https://langfuse.com/docs` and explore the site to find the page you need.

### 2b. Fetch Individual Pages as Markdown

Any page listed in llms.txt can be fetched as markdown by appending `.md` to its path or by using Accept: text/markdown in the request headers. Use this when you know which page contains the information needed. Returns clean markdown with code examples and configuration details.

```bash

curl -s "https://langfuse.com/docs/observability/overview.md"

curl -s "https://langfuse.com/docs/observability/overview" -H "Accept: text/markdown"

```

### 2c. Search Documentation

When you need to find information across all docs and github issues/discussions without knowing the specific page:

```bash

curl -s "https://langfuse.com/api/search-docs?query=<url-encoded-query>"

```

Example:

```bash

curl -s "https://langfuse.com/api/search-docs?query=How+do+I+trace+LangGraph+agents"

```

Returns a JSON response with:

- `query`: the original query

- `answer`: a JSON string containing an array of matching documents, each with:

- `url`: link to the doc page

- `title`: page title

- `source.content`: array of relevant text excerpts from the page

Search is a great fallback if you cannot find the relevant pages or need more context. Especially useful when debugging issues as all GitHub Issues and Discussions are also indexed. Responses can be large — extract only the relevant portions.

### Documentation Workflow

1. Start with **llms.txt** to orient — scan for relevant page titles

2. **Fetch specific pages** when you identify the right one

3. Fall back to **search** when the topic is unclear and you want more contextLangfuse CLI

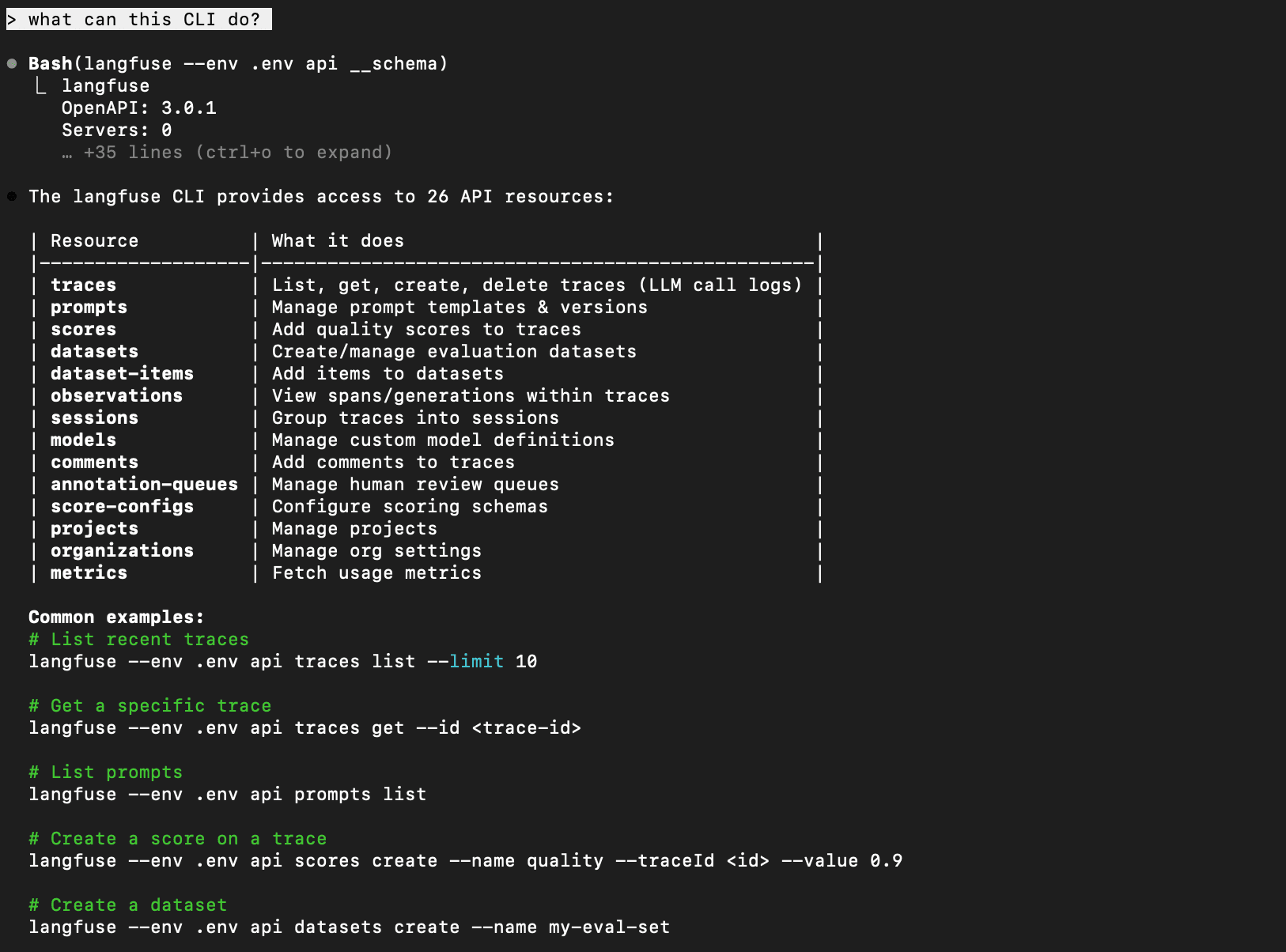

Agents often work in terminal environments and Langfuse has APIs for all core features. How can we help Agents interact with Langfuse without writing HTTP requests manually?

We built langfuse-cli, a command-line interface that wraps the entire Langfuse API, auto-generated from our OpenAPI spec with some modifications.

It’s a CLI tool built on specli that generates commands directly from the OpenAPI specification. Every API endpoint becomes a CLI command with proper flags, validation, and help text (that again helps with progressive disclosure).

For Agents: Reference in the Langfuse Skill

In the main Langfuse Skill (see above) we include a reference that teaches AI agents how to use the CLI:

# Langfuse CLI Reference

## Install

```bash

# Run directly (recommended)

npx langfuse-cli api <resource> <action>

bunx langfuse-cli api <resource> <action>

# Or install globally

npm i -g langfuse-cli

langfuse api <resource> <action>

```

## Discovery

```bash

# List all resources and auth info

langfuse api __schema

# List actions for a resource

langfuse api <resource> --help

# Show args/options for a specific action

langfuse api <resource> <action> --help

# Preview the curl command without executing

langfuse api <resource> <action> --curl

```

...[redacted for brevity]Agents can read this file to understand all available commands, flags, and usage patterns.

Why Auto-Generate from OpenAPI? By generating the CLI from the OpenAPI spec, we get:

- Always in sync - CLI updates automatically when the API changes

- Complete coverage - Every endpoint becomes a command

- Proper validation - Request schemas enforce correct input

- Built-in help - Auto-generated help text for every command

- Type safety - Flags match the API parameter types exactly

Technical Implementation

The CLI is built using a patched OpenAPI spec. We flatten discriminated unions (oneOf with allOf) into plain objects so specli can generate proper command flags:

// scripts/patch-openapi.ts

// Flattens complex union types for CLI generation

export function patchOpenAPI(spec: OpenAPISpec) {

// Convert oneOf/allOf patterns to flat object schemas

// This makes endpoints like `prompts create` generate proper flags

return flattenedSpec;

}Every Page as Markdown

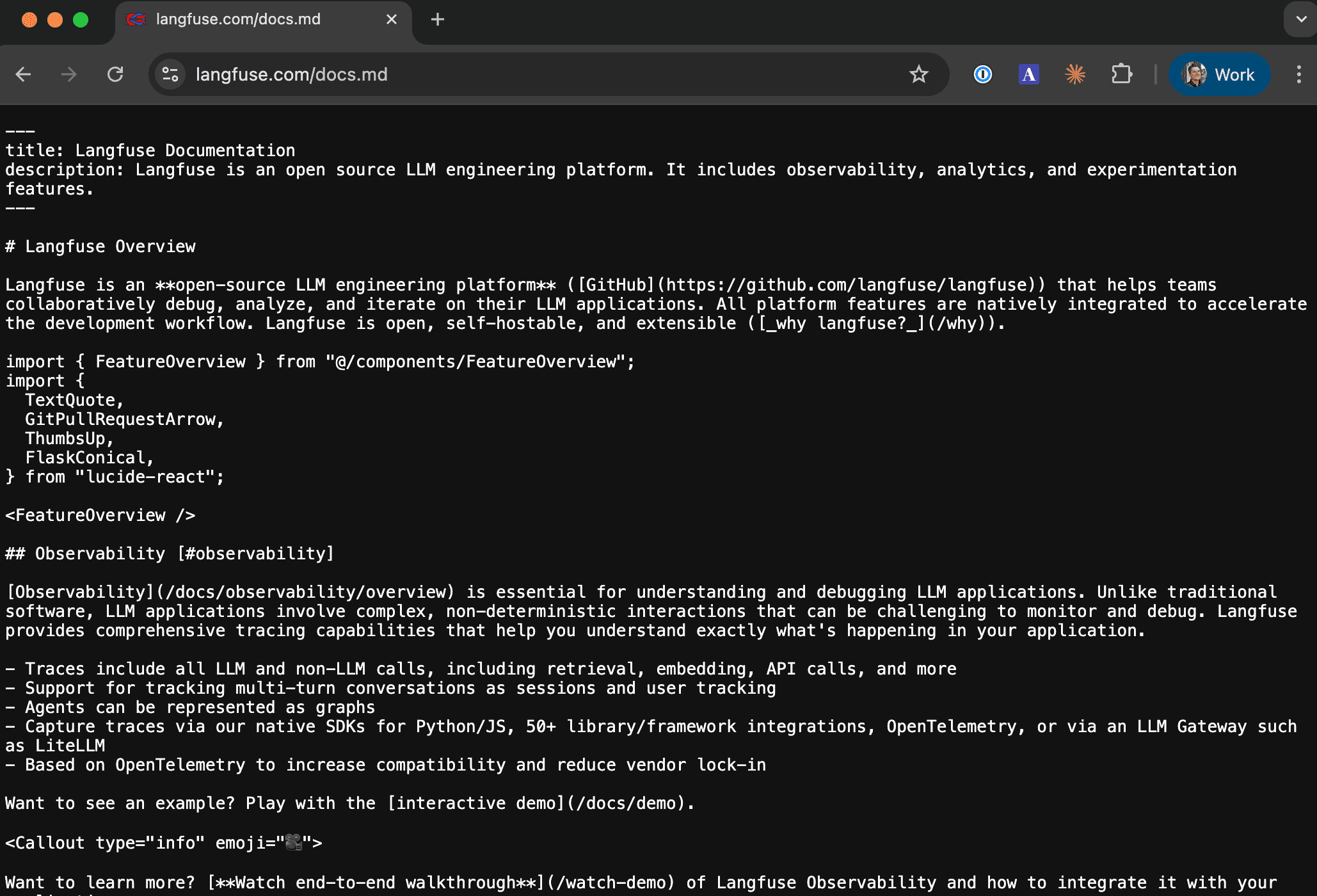

Markdown has quickly become the lingua franca for AI agents. When an agent fetches a typical documentation page as HTML, most of the response is noise: navigation chrome, script tags, style attributes, SVG icons, data attributes, and deeply nested div structures that carry no semantic value. The actual content the agent needs — the text, code examples, and document structure — is buried inside all of that. Feeding raw HTML to an LLM is essentially spending tokens on packaging instead of the content inside.

Markdown solves this by preserving the semantic structure that matters (headings, lists, code blocks, links) while stripping away everything else. This has two practical effects: it dramatically reduces token consumption, and it produces cleaner context that leads to better agent outputs. A typical documentation page served as markdown is 3–5x smaller in token count than its HTML equivalent.

Two Ways to Access

We support two methods for fetching any Langfuse documentation or website page as markdown, covering different agent patterns:

-

URL extension — Append

.mdto any page path. This is the simplest approach and works well for agents that construct URLs directly (e.g., from anllms.txtindex or a skill reference). -

Content negotiation — Send an

Accept: text/markdownrequest header. This follows the HTTP content negotiation standard and is the approach used by agents like Claude Code and OpenCode that automatically request markdown from any server that supports it. No URL rewriting needed — the same canonical URL returns markdown or HTML depending on the client’s preference.

# Option 1: URL extension

curl https://langfuse.com/docs/observability/overview.md

# Option 2: Content negotiation

curl -H "Accept: text/markdown" https://langfuse.com/docs/observability/overview

How It Works

At build time, we convert all of our MDX pages to plain markdown and write them to static files. Next.js rewrites then handle routing: requests with an .md extension or Accept: text/markdown header are rewritten to serve the pre-built markdown file. Because the conversion happens at build time rather than on each request, responses are fast and cacheable.

This powers a number of workflows across the ecosystem:

- Coding agents like Claude Code and OpenCode that automatically include

Accept: text/markdownin their requests - Skills and CLI workflows that

curlspecific.mdURLs referenced in the skill file - Our MCP server’s

getLangfuseDocsPagetool, which fetches pages as markdown under the hood - IDE integrations in Cursor, Windsurf, and others that pull documentation into agent context

- A “Copy as Markdown” button in our docs UI for humans who want clean content too

Public RAG Endpoint

How can agents quickly find relevant information across all documentation?

We exposed a public RAG endpoint as a REST API and included it in our Skill. This way, agents can not only browse the docs manually but also query all indexed docs and GitHub issues/discussions.

Instead of reading through dozens of documentation pages, agents can ask natural language questions and get synthesized answers with source citations.

The RAG API

Any agent (or human) can now query Langfuse docs without authentication:

curl "https://langfuse.com/api/search-docs?query=How%20do%20I%20trace%20LangGraph%20agents"Response:

{

"query": "How do I trace LangGraph agents",

"answer": "To trace LangGraph agents with Langfuse, use the native integration...",

"metadata": {

"sources": [

{

"title": "LangGraph Integration",

"url": "https://langfuse.com/docs/integrations/langgraph"

}

]

}

}Implementation

We are using Inkeep to power Ask AI on our website and it also exposes a RAG API with raw access to the data:

// pages/api/search-docs.ts

export default async function handler(

req: NextApiRequest,

res: NextApiResponse,

) {

const query = req.query.query as string;

if (!query) {

return res.status(400).json({ error: "Missing query parameter" });

}

const inkeepResult = await searchLangfuseDocsWithInkeep(query);

return res.status(200).json({

query,

answer: inkeepResult.answer,

metadata: inkeepResult.metadata,

});

}llms.txt

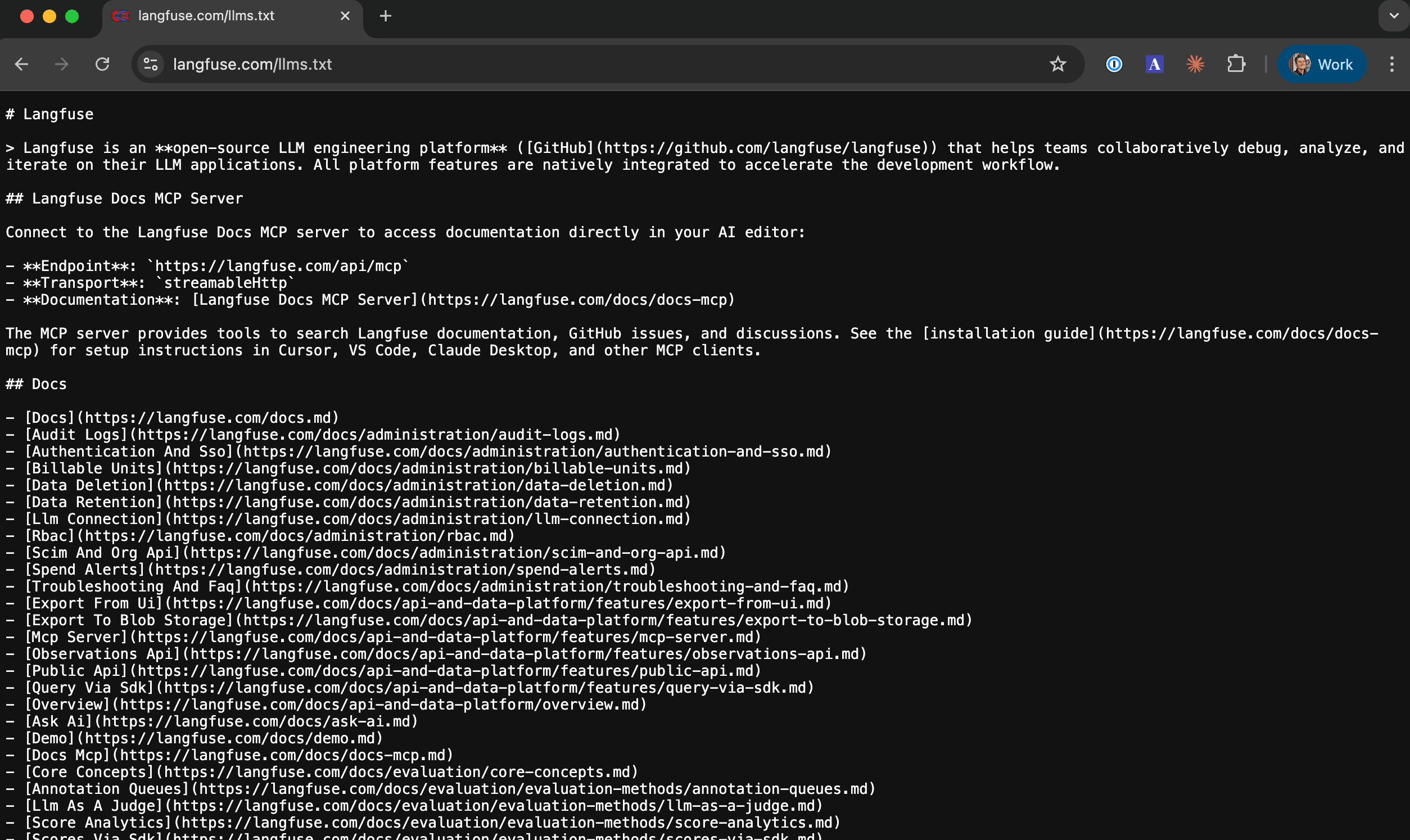

How does an agent quickly understand what Langfuse is and what documentation is available?

We implemented llms.txt, a standard proposed by llmstxt.org that provides a machine-readable index of your documentation. When an agent first encounters Langfuse, it can immediately understand the entire documentation structure without crawling hundreds of pages. Of course, we also mention it in our Skills file as the main entrypoint to the documentation.

What is llms.txt?

It’s a simple markdown file that gives LLMs a high-level overview and links to detailed documentation—all in one place. Here’s what ours looks like:

# Langfuse

> Langfuse is an **open-source LLM engineering platform** that helps teams

> collaboratively debug, analyze, and iterate on their LLM applications.

## Langfuse Docs MCP Server

Connect to the Langfuse Docs MCP server to access documentation...

## Docs

- [Observability Overview](/docs/observability/overview.md)

- [Prompt Management](/docs/prompt-management/overview.md)

- [Evaluation](/docs/evaluation/overview.md)

...Implementation

We auto-generate this file from our sitemap using a simple Node.js script that runs at build time:

// scripts/generate_llms_txt.js

async function generateLLMsList() {

const sitemapContent = fs.readFileSync("public/sitemap-0.xml", "utf-8");

const result = await parser.parseStringPromise(sitemapContent);

let markdownContent = `# Langfuse\n\n`;

markdownContent += `> ${INTRO_DESCRIPTION}\n\n`;

// Group URLs by section (docs, self-hosting, etc.)

urls.forEach((url) => {

const section = new URL(url).pathname.split("/")[1];

// ... categorize and format

});

fs.writeFileSync("public/llms.txt", markdownContent);

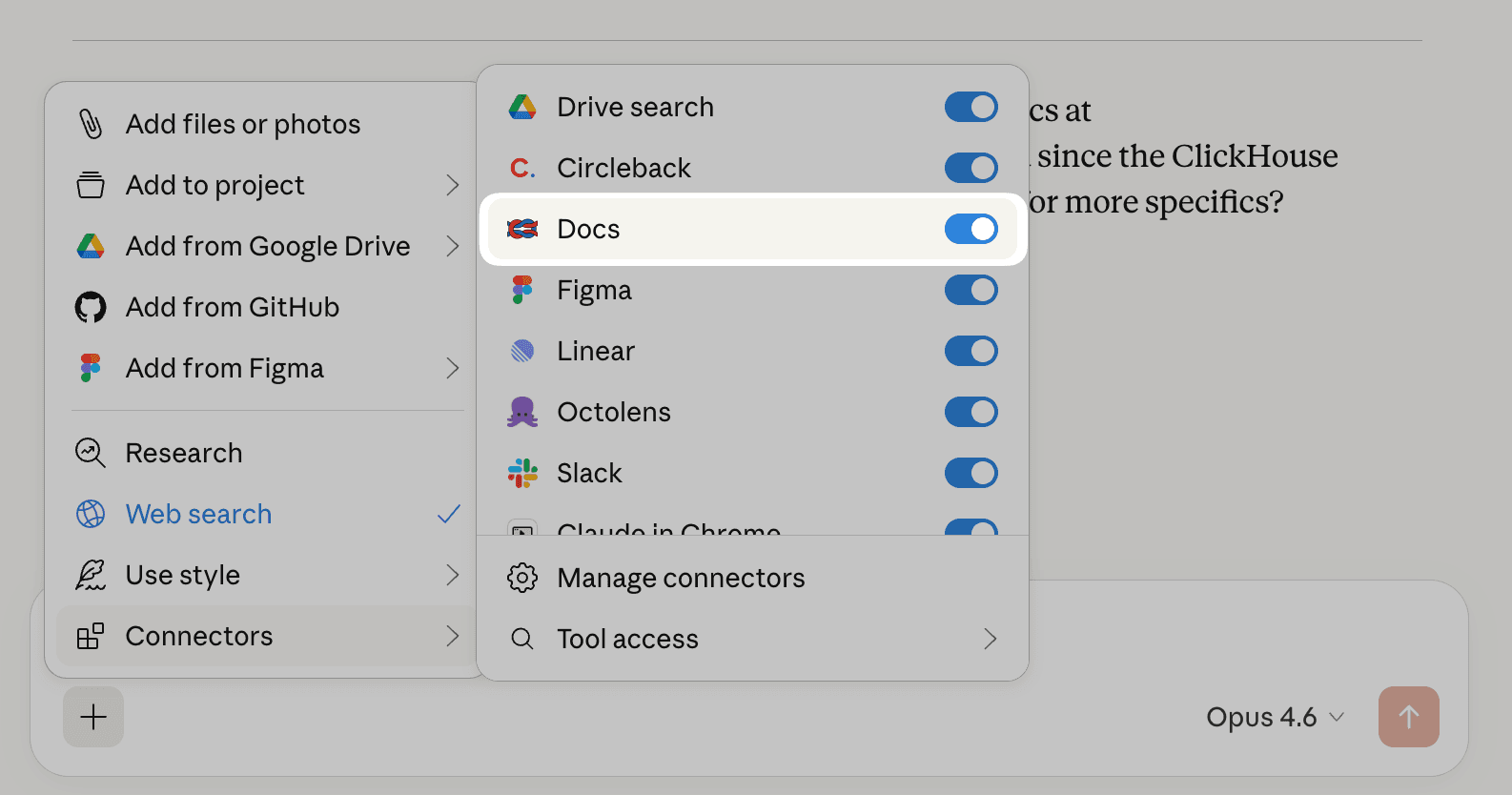

}Docs MCP Server

We also built a public, unauthenticated MCP server specifically for documentation access.

What It Provides

The docs MCP server exposes three tools:

searchLangfuseDocs- Semantic search over all documentationgetLangfuseDocsPage- Fetch specific pages as markdowngetLangfuseOverview- Get the documentation index (llms.txt)

Installation

No authentication required. Works in Cursor, VS Code, Claude Desktop, and any MCP-compatible client:

{

"mcpServers": {

"langfuse-docs": {

"url": "https://langfuse.com/api/mcp",

"transport": "streamableHttp"

}

}

}Example Usage

User: "Add Langfuse tracing to my LangGraph application"

Agent: [Calls searchLangfuseDocs with query: "LangGraph integration"]

Agent: [Calls getLangfuseDocsPage with path: "/docs/integrations/langgraph"]

Agent: Based on the documentation, here's how to add tracing...Follow this guide to set up the MCP

The MCP server is also used in our public example project that answers any question about Langfuse.

Authenticated MCP Server

How can non-coding agents (ChatGPT, Claude, …) interact with your Langfuse project data and especially use prompts from Langfuse Prompt Management?

For this, we built an authenticated MCP server that provides access to your Langfuse data through the Model Context Protocol (MCP).

We have written in more detail about how it works and how we built it here: Blog: Building Langfuse’s MCP Server

Authentication

The MCP server uses your Langfuse API keys (public and secret key) to authenticate and access your project data. This gives agents the same level of access you have through the web UI or API.

{

"mcpServers": {

"langfuse": {

"url": "https://cloud.langfuse.com/api/mcp",

"transport": "streamableHttp",

"auth": {

"username": "pk-lf-...",

"password": "sk-lf-..."

}

}

}

}Here is the installation guide

So, Will You Be My CLI?

We’ve put a lot of love into making Langfuse agent-friendly. Whether you’re an AI coding assistant, an autonomous agent, or a developer using these tools, we want you to have the best possible experience integrating and using Langfuse.

Try it out and let us know what you think. And if you build something cool with these capabilities, we’d love to hear about it!

Happy Valentine’s Day from the Langfuse team! ❤️