Metrics

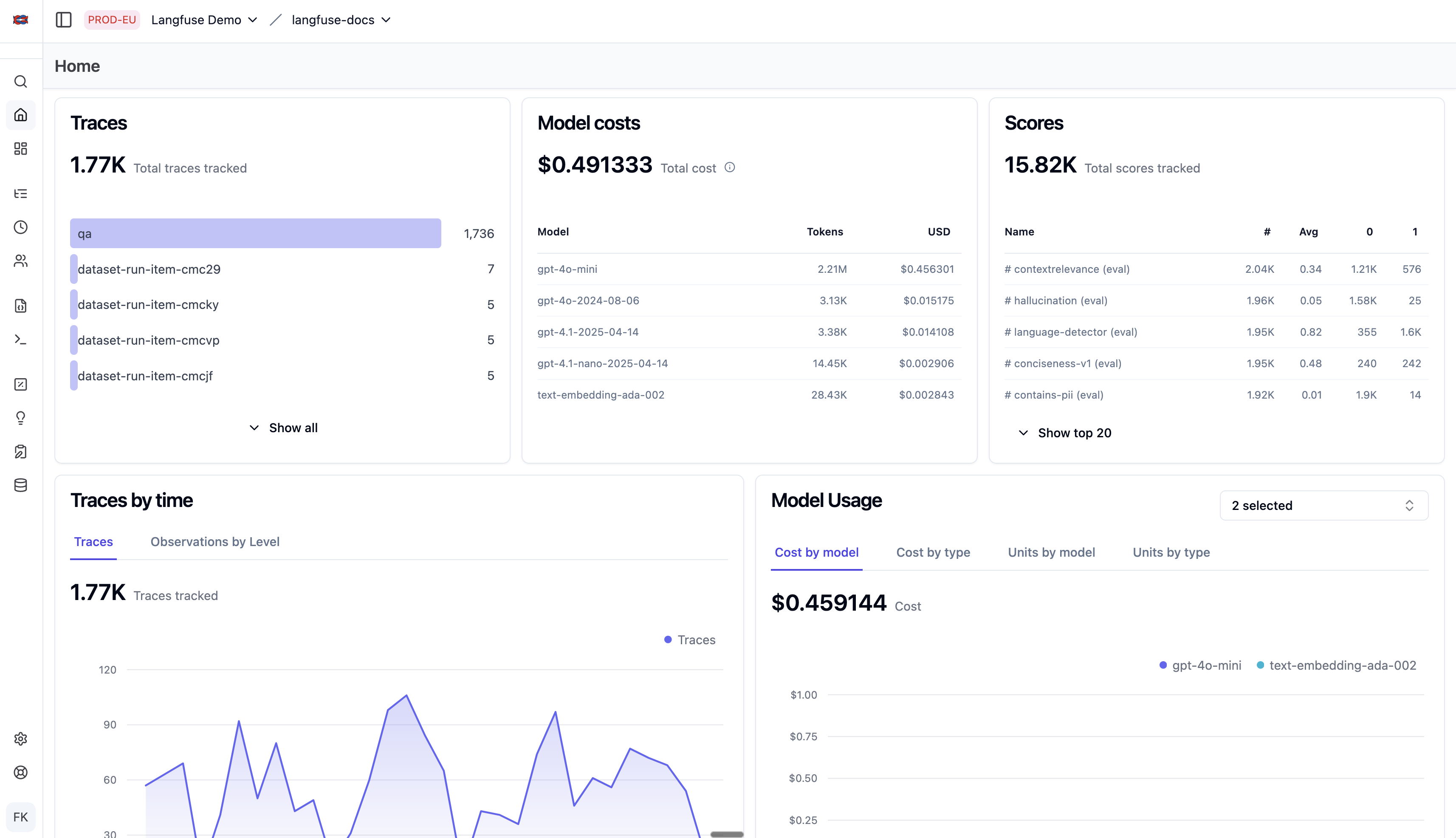

Langfuse metrics derive actionable insights from observability and evaluation traces.

Metrics can be sliced and diced via the customizable dashboards and the metrics API.

Features

Metrics & Dimensions

Metrics:

- Quality is measured through user feedback, model-based scoring, human-in-the-loop scored samples or custom scores via SDKs/API (see scores). Quality is assessed over time as well as across prompt versions, LLMs and users.

- Cost and Latency are accurately measured and broken down by user, session, geography, feature, model and prompt version.

- Volume based on the ingested traces and tokens used.

Dimensions:

- Trace name: differentiate between different use cases, features, etc. by adding a

namefield to your traces. - User: track usage and cost by user. Just add a

userIdto your traces (docs). - Tags: filter different use cases, features, etc. by adding tags to your traces.

- Release and version numbers: track how changes to the LLM application affected your metrics.

For an exact definition, please refer to the metrics API docs.

Was this page helpful?