Press & Media

Want to talk about press opportunities? Email clemens@langfuse.com and we’ll be in touch shortly. To keep in touch with Langfuse, follow us on Twitter, LinkedIn, GitHub, and Discord.

Company Facts

Langfuse is building the open source LLM engineering platform to help teams build production-grade LLM applications faster. The platform provides:

- Observability & Tracing — Production tracing, metrics, and analytics for LLM applications

- Prompt Management — Version control and deployment of prompts with integrated monitoring

- Evaluations — LLM-as-a-Judge evaluations, datasets, and structured evaluation processes

- Collaboration — Shareable views, dashboards, and team collaboration features

Company

- Founded: 2023

- Headquarters: Berlin, Germany (Product & Engineering) and San Francisco, CA (Marketing & Sales)

- Team Size: 14+ full-time employees

- Y Combinator: Winter 2023 batch (W23)

- Public Company Handbook: langfuse.com/handbook

Funding

- $4M Seed Round (November 2023)

- Investors: Lightspeed Venture Partners, General Catalyst (La Famiglia), Y Combinator, plus angels and operators in ML, Open Source, and Developer Tools

Most Important Links

- Video Demo

- Company Handbook: langfuse.com/handbook

- Changelog: langfuse.com/changelog

- Enterprise: langfuse.com/enterprise

- Documentation: langfuse.com/docs

- Roadmap: langfuse.com/docs/roadmap

- Blog: langfuse.com/blog

Why does Langfuse exist?

Langfuse exists to accelerate the deployment of reliable, safe, explainable and cost-effective AI applications and agents.

AI will create meaningful value for society and drive economic growth and we are still in the early days of seeing this impact. Over time, every successful company will be an AI company, with AI at the core of its strategy, value creation, and business processes. Most value creation will happen at the application-layer, split between incumbents and AI-native startups.

We’re building an integrated and open tooling layer to help teams with:

- Visibility & explainability → Langfuse Observability (production tracing, metrics, and analytics)

- Collaboration across disciplines → Prompt management, shareable views & dashboards

- Evaluation & data operations → Langfuse Evaluations (evals, datasets, labeling)

We are independent, vendor-neutral, and available as cloud or self-hosted at production scale.

Why do customers choose Langfuse?

Customers choose Langfuse because we are…

- The most used open-source LLM Engineering platform (blog post)

- Model and framework agnostic with 80+ integrations

- Built for production & scale

- Designed for complex agents and multi-step workflows

- Offering a complete toolbox for AI Engineering

- Incrementally adoptable, start with one feature and expand to the full platform over time

- API-first, all features are available via API for custom integrations

- Based on OpenTelemetry for interoperability

- Easy to self-host

Langfuse is the most widely adopted LLM Engineering platform:

- 22,248 GitHub stars

- 23.1M+ SDK installs per month

- 6M+ Docker pulls

- Trusted by 19 of the Fortune 50 and 63 of the Fortune 500

Select customers include:

Key Milestones

A more detailed timeline is available in our handbook.

2023

- January: Joined Y Combinator W23 batch, moved to San Francisco

- August: Public launch as open source project — Product Hunt Product of the Day

- November: Raised $4M seed round led by Lightspeed Venture Partners, La Famiglia, and Y Combinator; Reached 1,000 GitHub Stars

2024

- January: Launched first version of Prompt Management feature

- April: Langfuse 2.0 launch — “The LLM Engineering Platform” with evaluations, datasets, and playground. Product Hunt Product of the Day again

- December: Langfuse v3 — Major infrastructure upgrade with migration from PostgreSQL to ClickHouse

2025

- February: Opened San Francisco office

- April: Reached 10,000 GitHub Stars

- May: Launch Week 3 with full-text search, saved/shared table views, and custom dashboards

- June: Open sourced all product features under MIT license (LLM-as-a-Judge, playground, experiments, annotations)

- August: Featured in Handelsblatt covering the Langfuse story and journey

- October: Launch Week 4 with advanced filtering, team collaboration features, and Mixpanel integration

The Team

| Name | Role | Social Links |

|---|---|---|

| Marc Klingen | Co-Founder & CEO | Twitter GitHub |

| Max Deichmann | Co-Founder & CTO | Twitter GitHub |

| Clemens Rawert | Co-Founder & COO | Twitter GitHub |

| Marlies Mayerhofer | Founding Engineer | Twitter GitHub |

| Hassieb Pakzad | Founding Engineer | Twitter GitHub |

| Steffen Schmitz | Backend Engineer | LinkedIn GitHub |

| Jannik Maierhöfer | Growth Engineer | Twitter GitHub |

| Felix Krauth | Growth | Twitter GitHub |

| Akio Nuernberger | Founding GTM Engineer | Twitter |

| Nimar Blume | Product Engineer | Twitter GitHub |

| Valeriy Meleshkin | Backend Engineer | LinkedIn GitHub |

| Lotte Verheyden | DevRel | LinkedIn GitHub |

| Leonard Wolters | Ops | LinkedIn GitHub |

Media Assets

All logos are available for download in both SVG (vector) and PNG (raster) formats.

| Asset | PNG | SVG | Notes |

|---|---|---|---|

| Full Color Logo | Download | Download | Primary brand mark for light backgrounds |

| White Logo | Download | Download | For dark backgrounds |

| Icon Only | Download | Download | Compact icon without wordmark |

2023: Founders during Y Combinator W23

2025: Team Offsite in Portugal

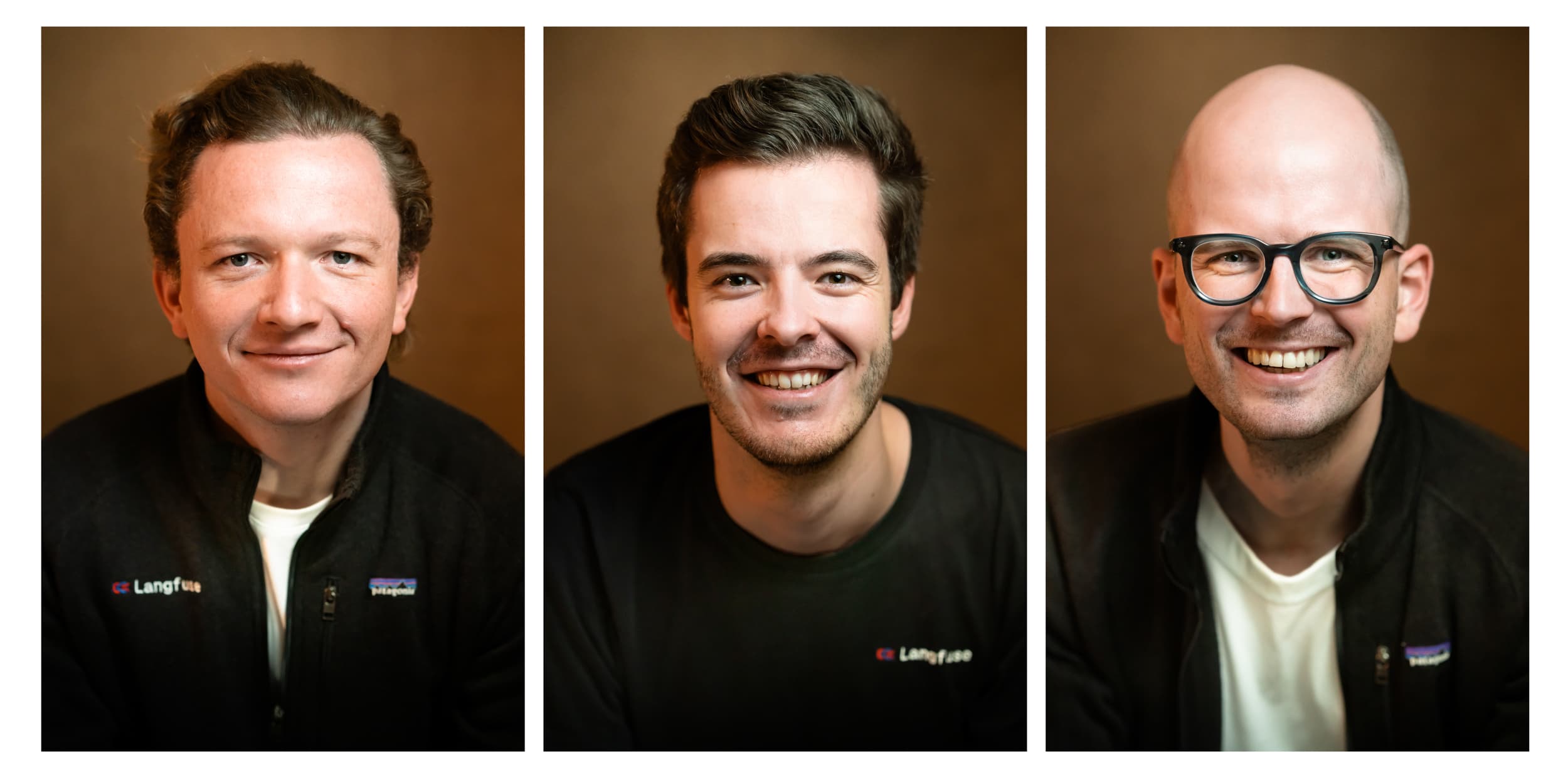

2025: Founder team

2025: Clemens

2025: Marc

2025: Max

Customer Stories

Learn how leading companies use Langfuse to build production-grade AI applications.

"Langfuse hits the sweet spot between engineering requirements and empowerment of non-technical users to contribute their domain expertise."

"Langfuse has enabled our developers to get extremely fast feedback. It's fundamental to how our developers understand their AI implementations."

"When a customer pings us, we pop open Langfuse to understand what's going on. It keeps customer support resolutions at less than 8 minutes on average."

"Langfuse enables us to track every prompt, response, cost, and latency in real time, turning black-box models into auditable, optimizable assets."

"Building on Langfuse we saved 30% of external BPO cost by deflecting 50% of support conversations to AI."