Hosted MCP Server for Langfuse Prompt Management

Langfuse now includes a native Model Context Protocol (MCP) server with write capabilities, enabling AI agents to fetch and update prompts directly.

We’re excited to announce that Langfuse now includes a native MCP server built directly into the platform. We release it with support for Prompt Management and will extend it to the rest of the Langfuse data platform in the future.

Our previous Prompt MCP server (changelog) was a node package. The new one uses StreamableHttp to communicate with the Langfuse API directly, no need for building or installing any external dependencies.

What’s New

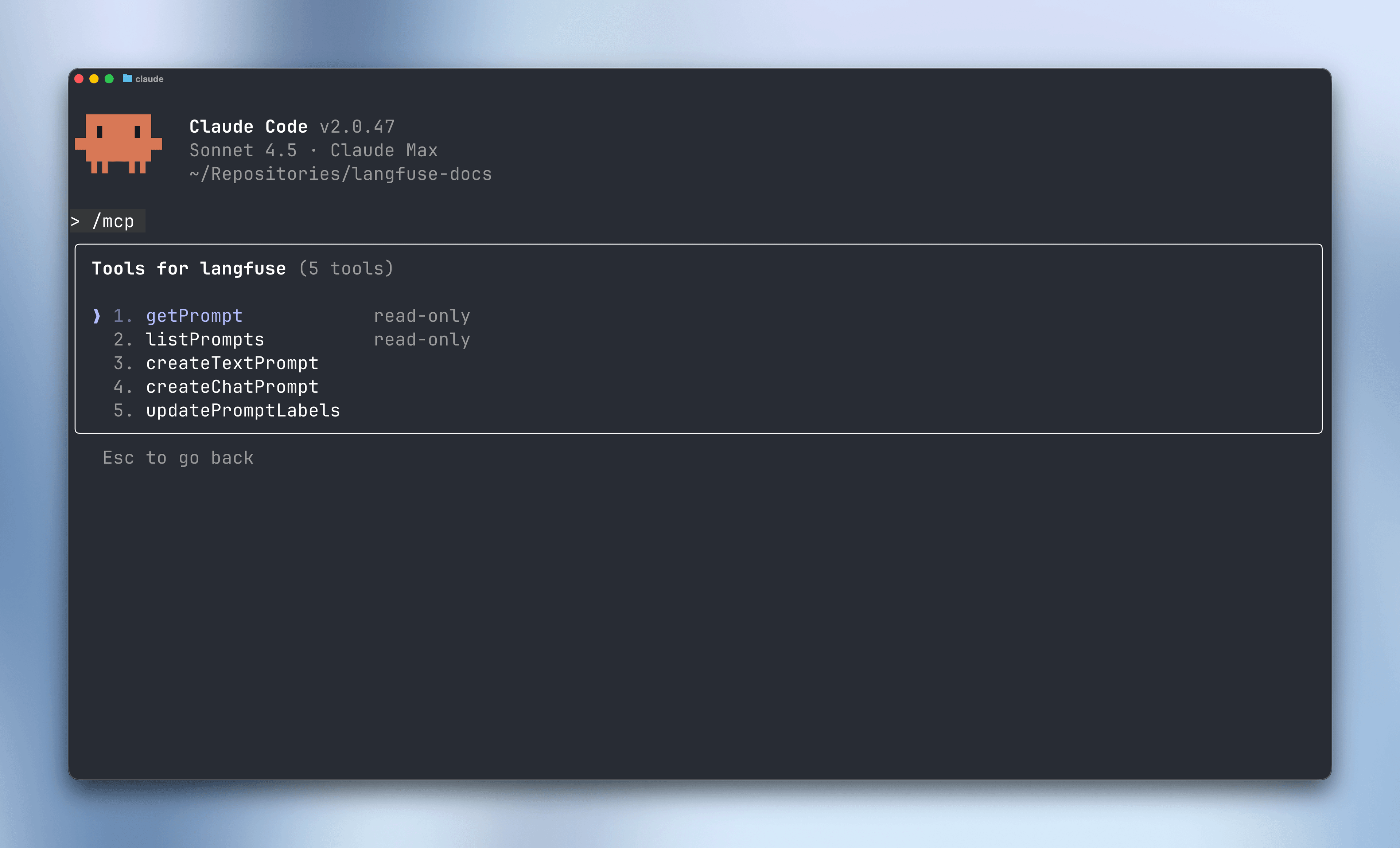

The native MCP server is available at /api/public/mcp and provides five tools for comprehensive prompt management:

Read Operations:

getPrompt- Fetch specific prompts by name, label, or versionlistPrompts- Browse all prompts with filtering and pagination

Write Operations:

createTextPrompt- Create new text prompt versionscreateChatPrompt- Create new chat prompt versions (OpenAI-style messages)updatePromptLabels- Manage labels across prompt versions

Setup

Simply configure your MCP client with BasicAuth credentials:

# Claude Code - one command

claude mcp add --transport http langfuse https://cloud.langfuse.com/api/public/mcp \

--header "Authorization: Basic {your-base64-token}"The native server uses a stateless architecture, ensuring clean separation between projects and reliable operation across all deployment types (Cloud, self-hosted, or local development).

See documentation for more details.

Feedback

We’d love to hear about your experience with the Langfuse MCP server. Share your feedback, ideas, and use cases in our GitHub Discussion.

Get Started

- MCP Server Documentation - Complete setup guide

- Prompt Management with MCP - Prompt-specific workflows