Observability for Haystack

This cookbook demonstrates how to use Langfuse to gain real-time observability for your Haystack Application.

What is Haystack? Haystack is the open-source Python framework developed by deepset. Its modular design allows users to implement custom pipelines to build production-ready LLM applications, like retrieval-augmented generative pipelines and state-of-the-art search systems. It integrates with Hugging Face Transformers, Elasticsearch, OpenSearch, OpenAI, Cohere, Anthropic and others, making it an extremely popular framework for teams of all sizes.

What is Langfuse? Langfuse is the open source LLM engineering platform. It helps teams to collaboratively manage prompts, trace applications, debug problems, and evaluate their LLM system in production.

Get Started

We’ll walk through a simple example of using Haystack and integrating it with Langfuse.

Step 1: Install Dependencies

%pip install haystack-ai langfuse openinference-instrumentation-haystackStep 2: Set Up Environment Variables

Configure your Langfuse API keys. You can get them by signing up for Langfuse Cloud or self-hosting Langfuse.

import os

# Get keys for your project from the project settings page: https://cloud.langfuse.com

os.environ["LANGFUSE_PUBLIC_KEY"] = "pk-lf-..."

os.environ["LANGFUSE_SECRET_KEY"] = "sk-lf-..."

os.environ["LANGFUSE_BASE_URL"] = "https://cloud.langfuse.com" # 🇪🇺 EU region

# os.environ["LANGFUSE_BASE_URL"] = "https://us.cloud.langfuse.com" # 🇺🇸 US region

# Your openai and serperdev api key

os.environ["OPENAI_API_KEY"] = "sk-proj-..."

os.environ["SERPERDEV_API_KEY"] = "..."With the environment variables set, we can now initialize the Langfuse client. get_client() initializes the Langfuse client using the credentials provided in the environment variables.

from langfuse import get_client

langfuse = get_client()

# Verify connection

if langfuse.auth_check():

print("Langfuse client is authenticated and ready!")

else:

print("Authentication failed. Please check your credentials and host.")Step 3: Initialize Haystack Instrumentation

Now, we initialize the OpenInference Haystack instrumentation. This third-party instrumentation automatically captures Haystack operations and exports OpenTelemetry (OTel) spans to Langfuse.

from openinference.instrumentation.haystack import HaystackInstrumentor

# Instrument the Haystack application

HaystackInstrumentor().instrument()Step 4: Create a Simple Haystack Application

Now we create a simple Haystack application using an OpenAI model and the SerperDev search API.

import os

from haystack.components.agents import Agent

from haystack.components.generators.chat import OpenAIChatGenerator

from haystack.dataclasses import ChatMessage

from haystack.tools import ComponentTool

from haystack.components.websearch import SerperDevWebSearch

search_tool = ComponentTool(component=SerperDevWebSearch())

basic_agent = Agent(

chat_generator=OpenAIChatGenerator(model="gpt-4o-mini"),

system_prompt="You are a helpful web agent.",

tools=[search_tool],

)

result = basic_agent.run(messages=[ChatMessage.from_user("When was the first version of Haystack released?")])

print(result['last_message'].text)

langfuse.flush()

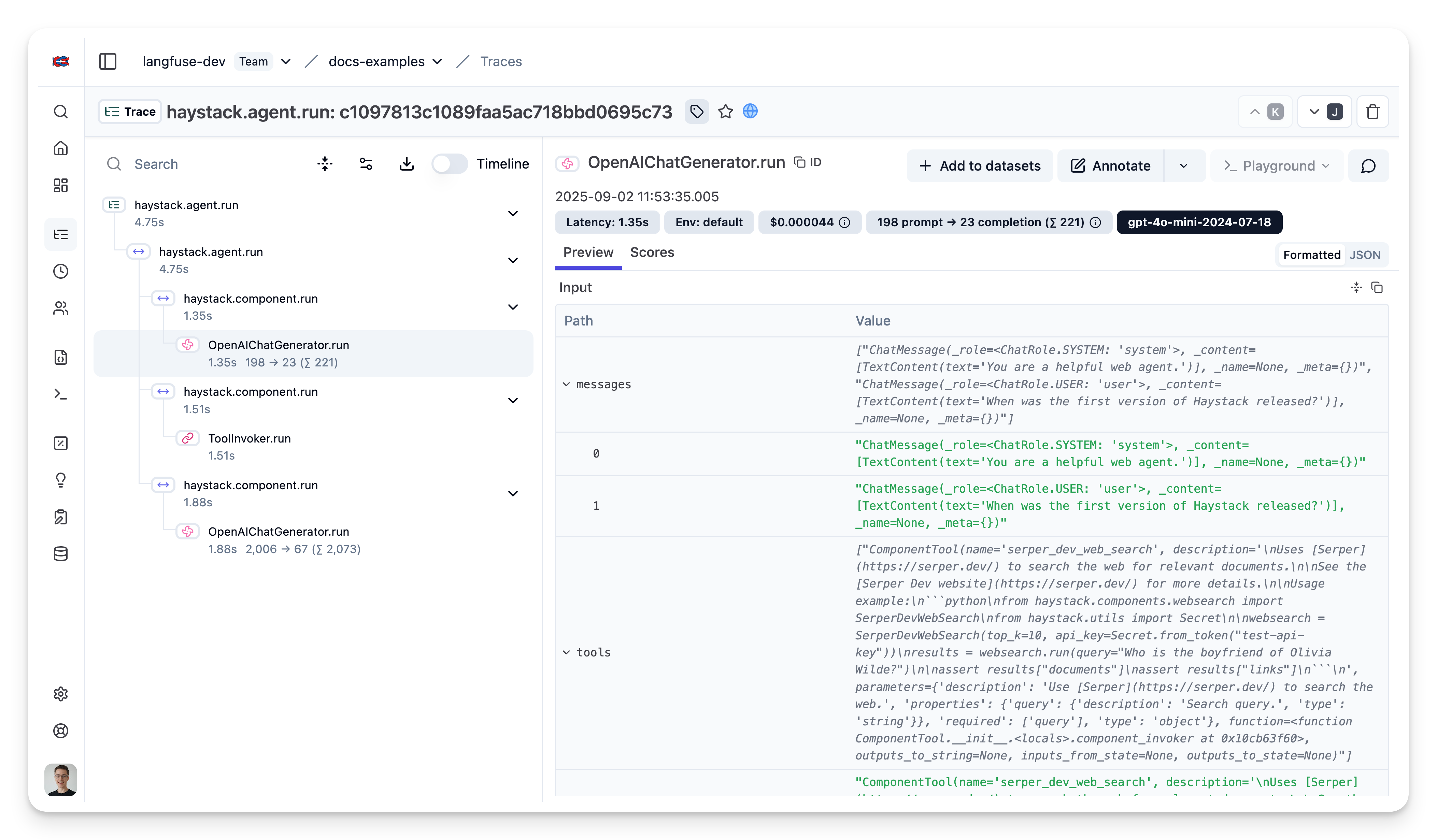

Step 5: View Traces in Langfuse

After running your workflow, log in to Langfuse to explore the generated traces. You will see logs for each workflow step along with metrics such as token counts, latencies, and execution paths.

Interoperability with the Python SDK

You can use this integration together with the Langfuse SDKs to add additional attributes to the trace.

The @observe() decorator provides a convenient way to automatically wrap your instrumented code and add additional attributes to the trace.

from langfuse import observe, propagate_attributes, get_client

langfuse = get_client()

@observe()

def my_llm_pipeline(input):

# Add additional attributes (user_id, session_id, metadata, version, tags) to all spans created within this execution scope

with propagate_attributes(

user_id="user_123",

session_id="session_abc",

tags=["agent", "my-trace"],

metadata={"email": "user@langfuse.com"},

version="1.0.0"

):

# YOUR APPLICATION CODE HERE

result = call_llm(input)

# Update the trace input and output

langfuse.update_current_trace(

input=input,

output=result,

)

return resultLearn more about using the Decorator in the Langfuse SDK instrumentation docs.

Next Steps

Once you have instrumented your code, you can manage, evaluate and debug your application: