Observability and Tracing for the Vercel AI SDK

This notebook demonstrates how to integrate Langfuse with the Vercel AI SDK to monitor, debug, and evaluate your LLM-powered applications and AI agents.

What is the Vercel AI SDK?: The Vercel AI SDK is a lightweight toolkit that lets developers call and stream responses from AI models (like OpenAI, Anthropic, or any compliant provider) directly in web apps with simple server/client functions.

What is Langfuse?: Langfuse is an open-source observability platform for AI agents and LLM applications. It helps you visualize and monitor LLM calls, tool usage, cost, latency, and more.

How do they work together? The Vercel AI SDK has built-in telemetry based on OpenTelemetry. Langfuse also uses OpenTelemetry, which means they integrate seamlessly. When you enable telemetry in the Vercel AI SDK and add the Langfuse span processor, your AI calls automatically flow into Langfuse where you can analyze them.

Steps to integrate Langfuse with the Vercel AI SDK

TL;DR

Here’s the flow of how Langfuse and the Vercel AI SDK work together:

- You enable telemetry in the AI SDK (

experimental_telemetry: { isEnabled: true }) - The AI SDK creates spans for each operation (model calls, tool executions, etc.)

- The LangfuseSpanProcessor intercepts these spans and sends them to Langfuse

- Langfuse stores and visualizes the data in traces you can explore

This integration uses OpenTelemetry, an observability standard. The Vercel AI SDK’s telemetry feature is documented in the Vercel AI SDK documentation on Telemetry.

Let’s walk through the steps in more detail.

1. Install Dependencies

Install the Vercel AI SDK, OpenTelemetry, and Langfuse:

npm install ai

npm install @ai-sdk/openai

npm install @langfuse/tracing @langfuse/otel @opentelemetry/sdk-nodeNote: While this example uses @ai-sdk/openai, you can use any AI provider supported by the Vercel AI SDK (Anthropic, Google, Mistral, etc.). The integration works the same way.

2. Configure Environment & API Keys

Set up your Langfuse and LLM provider credentials (this example uses OpenAI). You can get Langfuse keys by signing up for a free Langfuse Cloud account or by self-hosting Langfuse.

LANGFUSE_SECRET_KEY = "sk-lf-..."

LANGFUSE_PUBLIC_KEY = "pk-lf-..."

LANGFUSE_BASE_URL = "https://cloud.langfuse.com" # 🇪🇺 EU region

# LANGFUSE_BASE_URL = "https://us.cloud.langfuse.com" # 🇺🇸 US region

OPENAI_API_KEY = "sk-proj-"3. Initialize Langfuse with OpenTelemetry

Langfuse’s tracing is built on OpenTelemetry. To connect Langfuse with the Vercel AI SDK, you need to set up the OpenTelemetry SDK with the LangfuseSpanProcessor.

The LangfuseSpanProcessor is the component that captures telemetry data (called “spans” in OpenTelemetry) and sends it to Langfuse for storage and visualization.

import { NodeSDK } from "@opentelemetry/sdk-node";

import { LangfuseSpanProcessor } from "@langfuse/otel";

const sdk = new NodeSDK({

spanProcessors: [new LangfuseSpanProcessor()],

});

sdk.start();4. Enable telemetry in your AI SDK calls

Simply pass { experimental_telemetry: { isEnabled: true }} to your AI SDK functions. The AI SDK will automatically create telemetry spans, which the LangfuseSpanProcessor (from step 3) captures and sends to Langfuse.

import { generateText, tool } from 'ai';

import { openai } from '@ai-sdk/openai';

import { z } from 'zod';

const { text } = await generateText({

model: openai("gpt-5.1"),

prompt: 'What is the weather like today in San Francisco?',

tools: {

getWeather: tool({

description: 'Get the weather in a location',

inputSchema: z.object({

location: z.string().describe('The location to get the weather for'),

}),

execute: async ({ location }) => ({

location,

temperature: 72 + Math.floor(Math.random() * 21) - 10,

}),

}),

},

experimental_telemetry: { isEnabled: true },

});That’s it! This works for all AI SDK functions including generateText, streamText, generateObject, and tool calls.

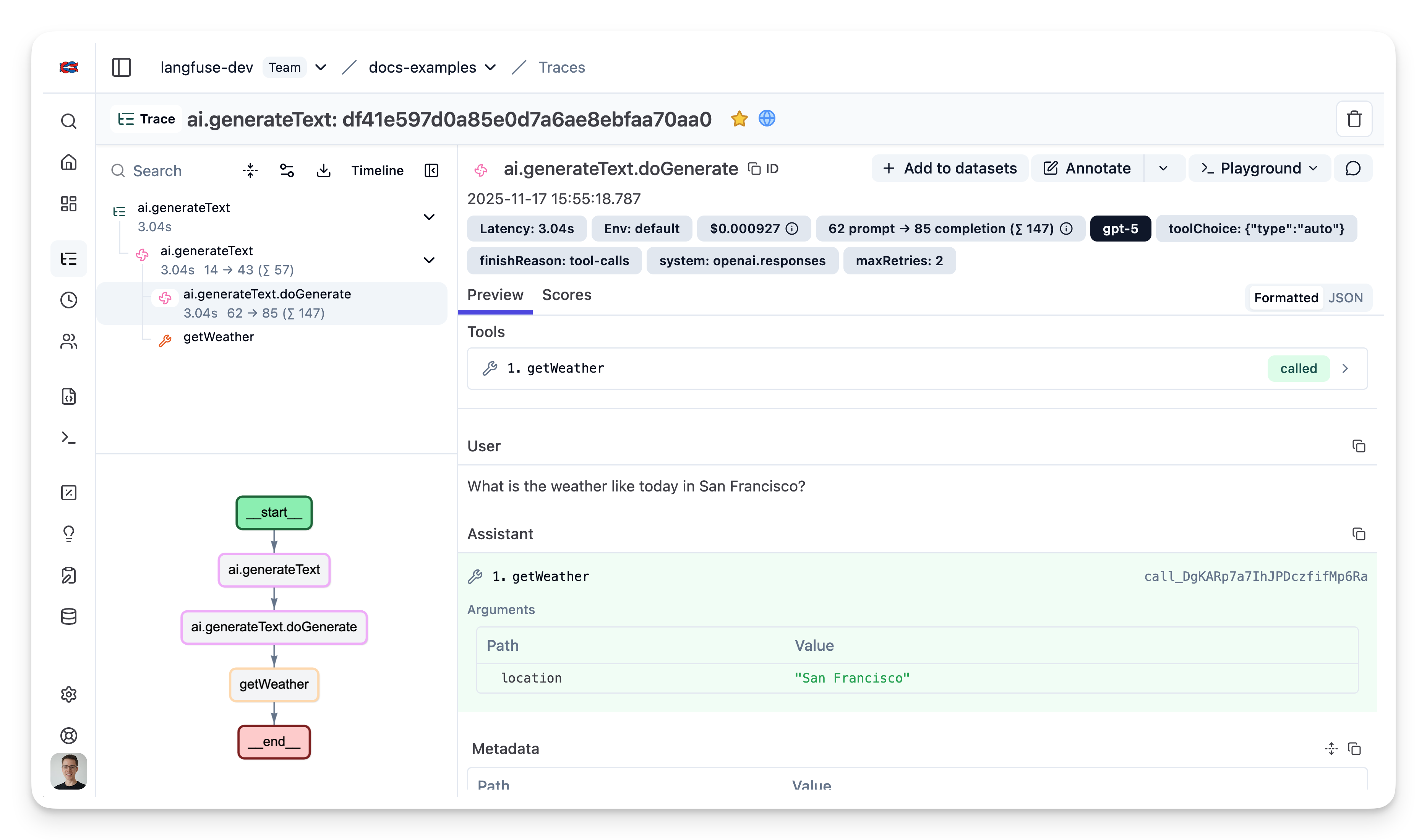

5. See traces in Langfuse

After running the workflow, you can view the complete trace in Langfuse:

Example Trace: View in Langfuse

Combining with Prompt Management

If you’re using Langfuse Prompt Management to version and manage your prompts, it’s recommended to link your prompts to traces. This allows you to see which prompt version was used for each generation, in a trace.

To link a prompt to a trace, pass the prompt metadata in the experimental_telemetry field:

import { generateText } from "ai";

import { openai } from "@ai-sdk/openai";

import { LangfuseClient } from "@langfuse/client"; // Add this import

const langfuse = new LangfuseClient();

// Get current `production` version

const prompt = await langfuse.prompt.get("movie-critic");

// Insert variables into prompt template

const compiledPrompt = prompt.compile({

someVariable: "example-variable",

});

const { text } = await generateText({

model: openai("gpt-5"),

prompt: compiledPrompt,

experimental_telemetry: {

isEnabled: true,

metadata: {

langfusePrompt: prompt.toJSON() // This links the Generation to your prompt in Langfuse

},

},

});Once linked, you’ll see the prompt version displayed in the trace details in Langfuse.

Setup with Next.js

For production Next.js applications, you’ll need a few additional configurations to handle streaming responses properly and ensure traces are sent before serverless functions terminate.

This example shows how to:

- Set up OpenTelemetry instrumentation in Next.js (using the

instrumentation.tsfile) - Handle streaming responses with proper span lifecycle

- Add session and user tracking to traces

- Ensure traces are flushed in serverless environments

Setup instrumentation

Create a new file instrumentation.ts in your project root. This file runs when your Next.js app starts up, initializing the OpenTelemetry setup:

// instrumentation.ts

import { LangfuseSpanProcessor, ShouldExportSpan } from "@langfuse/otel";

import { NodeTracerProvider } from "@opentelemetry/sdk-trace-node";

// Optional: filter our NextJS infra spans

const shouldExportSpan: ShouldExportSpan = (span) => {

return span.otelSpan.instrumentationScope.name !== "next.js";

};

export const langfuseSpanProcessor = new LangfuseSpanProcessor({

shouldExportSpan,

});

const tracerProvider = new NodeTracerProvider({

spanProcessors: [langfuseSpanProcessor],

});

tracerProvider.register();If you are using Next.js, please use a manual OpenTelemetry setup via the

NodeTracerProvider rather than via registerOTel from @vercel/otel. This

is because the @vercel/otel package does not yet support the OpenTelemetry

JS SDK v2 on which the

@langfuse/tracing and @langfuse/otel packages are based.

Create API route with streaming

The example below shows a chat endpoint that uses the Langfuse observe() wrapper to create a trace, adds session and user metadata, and properly handles streaming responses. Concretely:

observe()creates a Langfuse trace around your handlerupdateActiveTrace()adds session and user metadata for better trace organizationforceFlush()ensures traces are sent before the serverless function terminatesendOnExit: falsekeeps the observation open until the streaming response completes

// app/api/chat/route.ts

import { streamText } from "ai";

import { after } from "next/server";

import { openai } from "@ai-sdk/openai";

import {

observe,

updateActiveObservation,

updateActiveTrace,

} from "@langfuse/tracing";

import { trace } from "@opentelemetry/api";

import { langfuseSpanProcessor } from "@/src/instrumentation";

const handler = async (req: Request) => {

const {

messages,

chatId,

userId,

}: { messages: UIMessage[]; chatId: string; userId: string } =

await req.json();

// Set session id and user id on active trace

const inputText = messages[messages.length - 1].parts.find(

(part) => part.type === "text"

)?.text;

// Add session and user context to the trace

updateActiveObservation({

input: inputText,

});

updateActiveTrace({

name: "chat-message",

sessionId: chatId, // Groups related messages together

userId, // Track which user made the request

input: inputText,

});

const result = streamText({

model: openai("gpt-5.1"),

messages,

experimental_telemetry: {

isEnabled: true,

},

onFinish: async (result) => {

// Update trace with final output after stream completes

updateActiveObservation({

output: result.content,

});

updateActiveTrace({

output: result.content,

});

// End span manually after stream has finished

trace.getActiveSpan().end();

},

onError: async (error) => {

updateActiveObservation({

output: error,

level: "ERROR"

});

updateActiveTrace({

output: error,

});

// Manually end the span since we're streaming

trace.getActiveSpan()?.end();

},

});

// Critical for serverless: flush traces before function terminates

after(async () => await langfuseSpanProcessor.forceFlush());

return result.toUIMessageStreamResponse();

};

// Wrap handler with observe() to create a Langfuse trace

export const POST = observe(handler, {

name: "handle-chat-message",

endOnExit: false, // Don't end observation until stream finishes

});Usage together with other observability tools

The Vercel AI SDK uses OpenTelemetry for tracing (instrumentation scope: ai). If you’re also using Sentry, Datadog, or other OTEL-based tools, you may need additional configuration to avoid conflicts. See Using Langfuse with an Existing OpenTelemetry Setup.

Interoperability with the JS/TS SDK

You can use this integration together with the Langfuse SDKs to add additional attributes or group observations into a single trace.

The Context Manager allows you to wrap your instrumented code using context managers (with with statements), which allows you to add additional attributes to the trace. Any observation created inside the callback will automatically be nested under the active observation, and the observation will be ended when the callback finishes.

import { startActiveObservation, propagateAttributes } from "npm:@langfuse/tracing";

await startActiveObservation("context-manager", async (span) => {

span.update({

input: { query: "What is the capital of France?" },

});

// Propagate userId to all child observations

await propagateAttributes(

{

userId: "user-123",

sessionId: "session-123",

metadata: {

source: "api",

region: "us-east-1",

},

tags: ["api", "user"],

version: "1.0.0",

},

async () => {

// YOUR CODE HERE

const { text } = await generateText({

model: openai("gpt-5"),

prompt: "What is the capital of France?",

experimental_telemetry: { isEnabled: true },

});

}

);

span.update({ output: "Paris" });

});Learn more about using the Context Manager in the Langfuse SDK instrumentation docs.

Troubleshooting

No traces appearing

First, enable debug mode in the JS/TS SDK:

export LANGFUSE_LOG_LEVEL="DEBUG"Then run your application and check the debug logs:

- OTel spans appear in the logs: Your application is instrumented correctly but traces are not reaching Langfuse. To resolve this:

- Call

forceFlush()at the end of your application to ensure all traces are exported. This is especially important in short-lived environments like serverless functions. - Verify that you are using the correct API keys and base URL.

- Call

- No OTel spans in the logs: Your application is not instrumented correctly. Make sure the instrumentation runs before your application code.

Unwanted observations in Langfuse

The Langfuse SDK is based on OpenTelemetry. Other libraries in your application may emit OTel spans that are not relevant to you. These still count toward your billable units, so you should filter them out. See Unwanted spans in Langfuse for details.

Missing attributes

Some attributes may be stored in the metadata object of the observation rather than being mapped to the Langfuse data model. If a mapping or integration does not work as expected, please raise an issue on GitHub.

Next Steps

Once you have instrumented your code, you can manage, evaluate and debug your application: