Evaluate Langfuse LLM Traces with UpTrain

UpTrain’s open-source library offers a series of evaluation metrics to assess LLM applications.

This notebook demonstrates how to run UpTrain’s evaluation metrics on the traces generated by Langfuse. In Langfuse you can then monitor these scores over time or use them to compare different experiments.

Setup

You can get your Langfuse API keys here and OpenAI API key here

%pip install langfuse datasets uptrain litellm openai rouge_score --upgradeimport os

# Get keys for your project from the project settings page: https://cloud.langfuse.com

os.environ["LANGFUSE_PUBLIC_KEY"] = "pk-lf-..."

os.environ["LANGFUSE_SECRET_KEY"] = "sk-lf-..."

os.environ["LANGFUSE_BASE_URL"] = "https://cloud.langfuse.com" # 🇪🇺 EU region

# os.environ["LANGFUSE_BASE_URL"] = "https://us.cloud.langfuse.com" # 🇺🇸 US region

# Your openai key

os.environ["OPENAI_API_KEY"] = "sk-proj-..."Sample Dataset

We use this dataset to represent traces that you have logged to Langfuse. In a production environment, you would use your own data.

data = [

{

"question": "What are the symptoms of a heart attack?",

"context": "A heart attack, or myocardial infarction, occurs when the blood supply to the heart muscle is blocked. Chest pain is a good symptom of heart attack, though there are many others.",

"response": "Symptoms of a heart attack may include chest pain or discomfort, shortness of breath, nausea, lightheadedness, and pain or discomfort in one or both arms, the jaw, neck, or back."

},

{

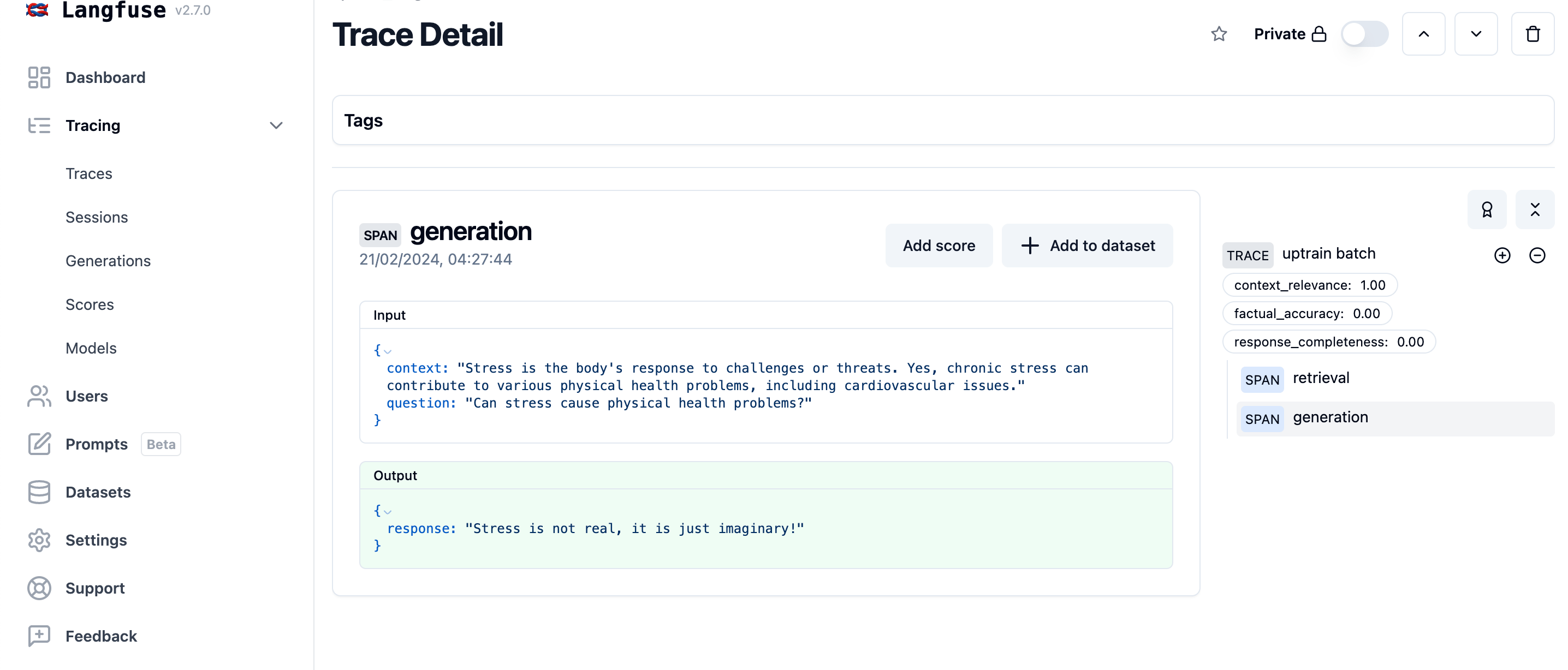

"question": "Can stress cause physical health problems?",

"context": "Stress is the body's response to challenges or threats. Yes, chronic stress can contribute to various physical health problems, including cardiovascular issues.",

"response": "Yes, chronic stress can contribute to various physical health problems, including cardiovascular issues, and a weakened immune system."

},

{

'question': "What are the symptoms of a heart attack?",

'context': "A heart attack, or myocardial infarction, occurs when the blood supply to the heart muscle is blocked. Symptoms of a heart attack may include chest pain or discomfort, shortness of breath and nausea.",

'response': "Heart attack symptoms are usually just indigestion and can be relieved with antacids."

},

{

'question': "Can stress cause physical health problems?",

'context': "Stress is the body's response to challenges or threats. Yes, chronic stress can contribute to various physical health problems, including cardiovascular issues.",

'response': "Stress is not real, it is just imaginary!"

}

]Run Evaluations using UpTrain

We have used the following 3 metrics from UpTrain’s open-source library:

-

Context Relevance: Evaluates how relevant the retrieved context is to the question specified.

-

Factual Accuracy: Evaluates whether the response generated is factually correct and grounded by the provided context.

-

Response Completeness: Evaluates whether the response has answered all the aspects of the question specified.

You can look at the complete list of UpTrain’s supported metrics here

from uptrain import EvalLLM, Evals

import json

import pandas as pd

eval_llm = EvalLLM(openai_api_key=os.environ["OPENAI_API_KEY"])

res = eval_llm.evaluate(

data = data,

checks = [Evals.CONTEXT_RELEVANCE, Evals.FACTUAL_ACCURACY, Evals.RESPONSE_COMPLETENESS]

)[32m2025-06-17 10:43:14.568[0m | [33m[1mWARNING [0m | [36muptrain.operators.language.llm[0m:[36mfetch_responses[0m:[36m268[0m - [33m[1mDetected a running event loop, scheduling requests in a separate thread.[0m

100%|██████████| 4/4 [00:01<00:00, 2.85it/s]

/Users/jannik/Documents/GitHub/langfuse-docs/.venv/lib/python3.13/site-packages/uptrain/operators/language/llm.py:271: RuntimeWarning: coroutine 'LLMMulticlient.async_fetch_responses' was never awaited

with ThreadPoolExecutor(max_workers=1) as executor:

RuntimeWarning: Enable tracemalloc to get the object allocation traceback

[32m2025-06-17 10:43:15.996[0m | [33m[1mWARNING [0m | [36muptrain.operators.language.llm[0m:[36mfetch_responses[0m:[36m268[0m - [33m[1mDetected a running event loop, scheduling requests in a separate thread.[0m

100%|██████████| 4/4 [00:01<00:00, 2.74it/s]

[32m2025-06-17 10:43:17.464[0m | [33m[1mWARNING [0m | [36muptrain.operators.language.llm[0m:[36mfetch_responses[0m:[36m268[0m - [33m[1mDetected a running event loop, scheduling requests in a separate thread.[0m

100%|██████████| 4/4 [00:03<00:00, 1.19it/s]

[32m2025-06-17 10:43:20.860[0m | [33m[1mWARNING [0m | [36muptrain.operators.language.llm[0m:[36mfetch_responses[0m:[36m268[0m - [33m[1mDetected a running event loop, scheduling requests in a separate thread.[0m

100%|██████████| 4/4 [00:01<00:00, 3.13it/s]

[32m2025-06-17 10:43:22.148[0m | [1mINFO [0m | [36muptrain.framework.evalllm[0m:[36mevaluate[0m:[36m376[0m - [1mLocal server not running, start the server to log data and visualize in the dashboard![0mUsing Langfuse

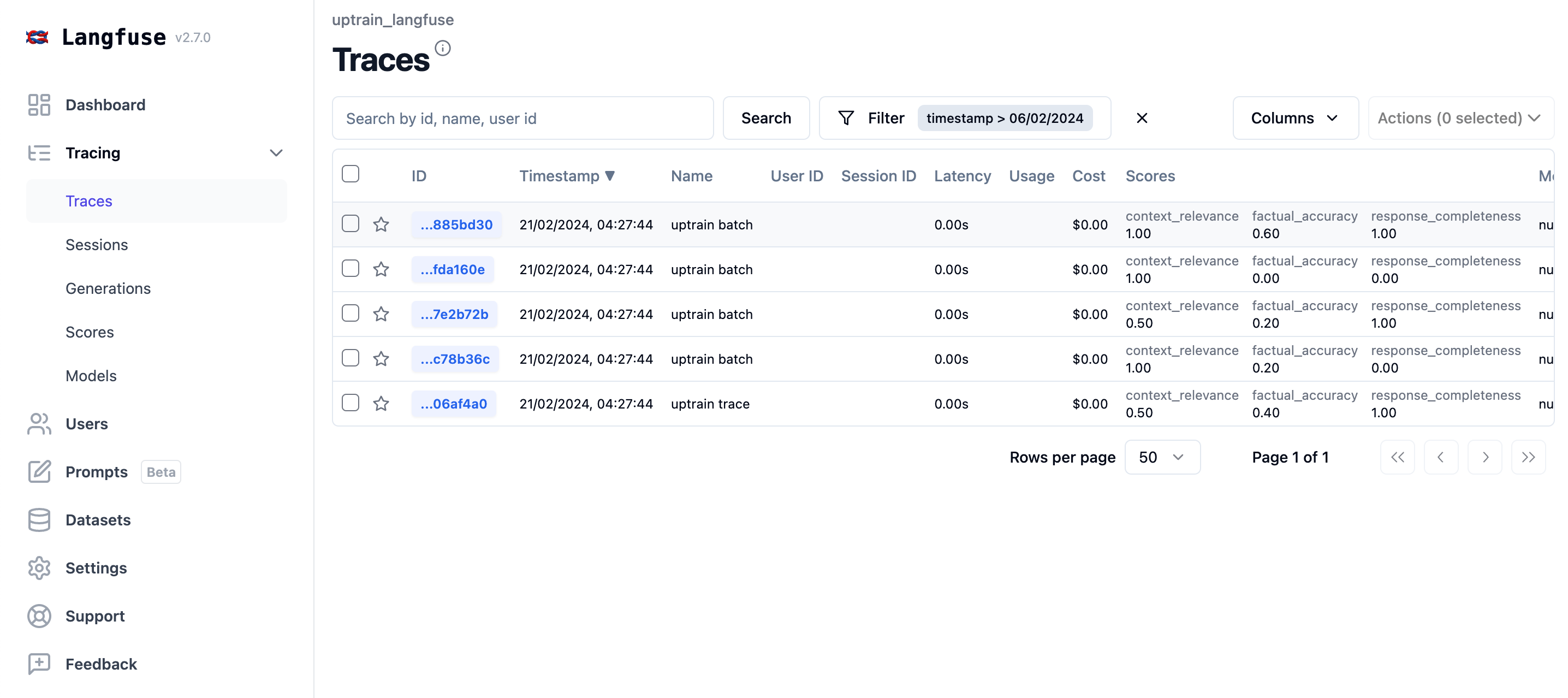

There are two main ways to run evaluations:

-

Score each Trace (in development): This means you will run the UpTrain evaluations for each trace item.

-

Score in Batches (in production): In this method we will simulate fetching production traces on a periodic basis to score them using the UpTrain evaluators. Often, you’ll want to sample the traces instead of scoring all of them to control evaluation costs.

Development: Score each trace while it’s created

from langfuse import get_client

langfuse = get_client()

# Verify connection

if langfuse.auth_check():

print("Langfuse client is authenticated and ready!")

else:

print("Authentication failed. Please check your credentials and host.")Langfuse client is authenticated and ready!We mock the instrumentation of your application by using the sample dataset. See the quickstart to integrate Langfuse with your application.

# start a new trace when you get a question

question = data[0]['question']

context = data[0]['context']

response = data[0]['response']

with langfuse.start_as_current_observation(as_type="span", name="uptrain trace") as trace:

# Store trace_id for later use

trace_id = trace.trace_id

# retrieve the relevant chunks

# chunks = get_similar_chunks(question)

# pass it as span

with trace.start_as_current_observation(

name="retrieval",

input={'question': question},

output={'context': context}

):

pass

# use llm to generate a answer with the chunks

# answer = get_response_from_llm(question, chunks)

with trace.start_as_current_observation(

name="generation",

input={'question': question, 'context': context},

output={'response': response}

):

passWe reuse the scores previously calculated for the traces in the sample dataset. In development, you would run the UpTrain evaluations for the single trace as it’s created.

langfuse.create_score(name='context_relevance', value=res[0]['score_context_relevance'], trace_id=trace_id)

langfuse.create_score(name='factual_accuracy', value=res[0]['score_factual_accuracy'], trace_id=trace_id)

langfuse.create_score(name='response_completeness', value=res[0]['score_response_completeness'], trace_id=trace_id)

Production: Add scores to traces in batches

To simulate a production environment, we will log our sample dataset to Langfuse.

for interaction in data:

with langfuse.start_as_current_observation(as_type="span", name="uptrain batch") as trace:

with trace.start_as_current_observation(

name="retrieval",

input={'question': interaction['question']},

output={'context': interaction['context']}

):

pass

with trace.start_as_current_observation(

name="generation",

input={'question': interaction['question'], 'context': interaction['context']},

output={'response': interaction['response']}

):

pass

# await that Langfuse SDK has processed all events before trying to retrieve it in the next step

langfuse.flush()We can now retrieve the traces like regular production data and evaluate them using UpTrain.

def get_traces(name=None, limit=10000, user_id=None):

all_data = []

page = 1

while True:

response = langfuse.api.trace.list(

name=name, page=page, user_id=user_id, order_by=None

)

if not response.data:

break

page += 1

all_data.extend(response.data)

if len(all_data) > limit:

break

return all_data[:limit]Optional: create a random sample to reduce evaluation costs.

from random import sample

NUM_TRACES_TO_SAMPLE = 4

traces = get_traces(name="uptrain batch")

traces_sample = sample(traces, NUM_TRACES_TO_SAMPLE)Convert the data into a dataset to be used for evaluation with UpTrain.

evaluation_batch = {

"question": [],

"context": [],

"response": [],

"trace_id": [],

}

for t in traces_sample:

observations = [langfuse.api.observations.get(o) for o in t.observations]

for o in observations:

if o.name == 'retrieval':

question = o.input['question']

context = o.output['context']

if o.name=='generation':

answer = o.output['response']

evaluation_batch['question'].append(question)

evaluation_batch['context'].append(context)

evaluation_batch['response'].append(response)

evaluation_batch['trace_id'].append(t.id)

data = [dict(zip(evaluation_batch,t)) for t in zip(*evaluation_batch.values())]Evaluate the batch using UpTrain.

res = eval_llm.evaluate(

data = data,

checks = [Evals.CONTEXT_RELEVANCE, Evals.FACTUAL_ACCURACY, Evals.RESPONSE_COMPLETENESS]

)[32m2025-06-17 10:46:35.647[0m | [33m[1mWARNING [0m | [36muptrain.operators.language.llm[0m:[36mfetch_responses[0m:[36m268[0m - [33m[1mDetected a running event loop, scheduling requests in a separate thread.[0m

100%|██████████| 4/4 [00:01<00:00, 3.06it/s]

/Users/jannik/Documents/GitHub/langfuse-docs/.venv/lib/python3.13/site-packages/uptrain/operators/language/llm.py:271: RuntimeWarning: coroutine 'LLMMulticlient.async_fetch_responses' was never awaited

with ThreadPoolExecutor(max_workers=1) as executor:

RuntimeWarning: Enable tracemalloc to get the object allocation traceback

[32m2025-06-17 10:46:36.963[0m | [33m[1mWARNING [0m | [36muptrain.operators.language.llm[0m:[36mfetch_responses[0m:[36m268[0m - [33m[1mDetected a running event loop, scheduling requests in a separate thread.[0m

100%|██████████| 4/4 [00:02<00:00, 1.88it/s]

[32m2025-06-17 10:46:39.097[0m | [33m[1mWARNING [0m | [36muptrain.operators.language.llm[0m:[36mfetch_responses[0m:[36m268[0m - [33m[1mDetected a running event loop, scheduling requests in a separate thread.[0m

100%|██████████| 4/4 [00:03<00:00, 1.12it/s]

[32m2025-06-17 10:46:42.703[0m | [33m[1mWARNING [0m | [36muptrain.operators.language.llm[0m:[36mfetch_responses[0m:[36m268[0m - [33m[1mDetected a running event loop, scheduling requests in a separate thread.[0m

100%|██████████| 4/4 [00:01<00:00, 3.87it/s]

[32m2025-06-17 10:46:43.749[0m | [1mINFO [0m | [36muptrain.framework.evalllm[0m:[36mevaluate[0m:[36m376[0m - [1mLocal server not running, start the server to log data and visualize in the dashboard![0mAdd the trace_id back to the dataset as it was omitted in the previous step to be compatible with UpTrain.

df = pd.DataFrame(res)

# add the langfuse trace_id to the result dataframe

df["trace_id"] = [d['trace_id'] for d in data]

df.head()| question | context | response | trace_id | score_context_relevance | explanation_context_relevance | score_factual_accuracy | explanation_factual_accuracy | score_response_completeness | explanation_response_completeness | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Can stress cause physical health problems? | Stress is the body's response to challenges or... | Symptoms of a heart attack may include chest p... | a105ba2b-337f-4af7-a367-663df325b44d | 0.5 | {\n "Reasoning": "The given context can giv... | 0.0 | {\n "Result": [\n {\n "Fa... | 0.0 | {\n "Reasoning": "The given response does n... |

| 1 | What are the symptoms of a heart attack? | A heart attack, or myocardial infarction, occu... | Symptoms of a heart attack may include chest p... | 66730079-4f83-40ff-9eb6-2fbf07b79bf1 | 1.0 | {\n "Reasoning": "The given context provide... | 0.6 | {\n "Result": [\n {\n "Fa... | 1.0 | {\n "Reasoning": "The given response is com... |

| 2 | Can stress cause physical health problems? | Stress is the body's response to challenges or... | Symptoms of a heart attack may include chest p... | 7206b436f865f4a5fe892f2b5ec4cbe6 | 0.5 | {\n "Reasoning": "The given context can giv... | 0.0 | {\n "Result": [\n {\n "Fa... | 0.0 | {\n "Reasoning": "The given response does n... |

| 3 | Can stress cause physical health problems? | Stress is the body's response to challenges or... | Symptoms of a heart attack may include chest p... | ec78d1929443997d1dbbdef2822b37dd | 1.0 | {\n "Reasoning": "The given context can ans... | 0.0 | {\n "Result": [\n {\n "Fa... | 0.0 | {\n "Reasoning": "The given response does n... |

Now that we have the evaluations, we can add them back to the traces in Langfuse as scores.

for _, row in df.iterrows():

for metric_name in ["context_relevance", "factual_accuracy","response_completeness"]:

langfuse.create_score(

name=metric_name,

value=row["score_"+metric_name],

trace_id=row["trace_id"]

)In Langfuse, you can now see the scores for each trace and monitor them over time.