Get Started with Tracing

This guide walks you through ingesting your first trace into Langfuse. If you’re looking to understand what tracing is and why it matters, check out the Observability Overview first. For details on how traces are structured in Langfuse and how it works in the background, see Core Concepts.

Get API keys

- Create Langfuse account or self-host Langfuse.

- Create new API credentials in the project settings.

Ingest your first trace

If you’re using one of our supported integrations, following their specific guide will be the fastest way to get started with minimal code changes. For more control, you can instrument your application directly using the Python or JS/TS SDKs.

Langfuse’s OpenAI SDK is a drop-in replacement for the OpenAI client that automatically records your model calls without changing how you write code. If you already use the OpenAI python SDK, you can start using Langfuse with minimal changes to your code.

Start by installing the Langfuse OpenAI SDK. It includes the wrapped OpenAI client and sends traces in the background.

pip install langfuseSet your Langfuse credentials as environment variables so the SDK knows which project to write to.

LANGFUSE_SECRET_KEY = "sk-lf-..."

LANGFUSE_PUBLIC_KEY = "pk-lf-..."

LANGFUSE_BASE_URL = "https://cloud.langfuse.com" # 🇪🇺 EU region

# LANGFUSE_BASE_URL = "https://us.cloud.langfuse.com" # 🇺🇸 US regionSwap the regular OpenAI import to Langfuse’s OpenAI drop-in. It behaves like the regular OpenAI client while also recording each call for you.

from langfuse.openai import openaiUse the OpenAI SDK as you normally would. The wrapper captures the prompt, model and output and forwards everything to Langfuse.

completion = openai.chat.completions.create(

name="test-chat",

model="gpt-4o",

messages=[

{"role": "system", "content": "You are a very accurate calculator. You output only the result of the calculation."},

{"role": "user", "content": "1 + 1 = "}],

metadata={"someMetadataKey": "someValue"},

)Langfuse’s JS/TS OpenAI SDK wraps the official client so your model calls are automatically traced and sent to Langfuse. If you already use the OpenAI JavaScript SDK, you can start using Langfuse with minimal changes to your code.

First install the Langfuse OpenAI wrapper. It extends the official client to send traces in the background.

Install package

npm install @langfuse/openaiAdd credentials

Add your Langfuse credentials to your environment variables so the SDK knows which project to write to.

LANGFUSE_SECRET_KEY = "sk-lf-..."

LANGFUSE_PUBLIC_KEY = "pk-lf-..."

LANGFUSE_BASE_URL = "https://cloud.langfuse.com" # 🇪🇺 EU region

# LANGFUSE_BASE_URL = "https://us.cloud.langfuse.com" # 🇺🇸 US regionInitialize OpenTelemetry

Install the OpenTelemetry SDK, which the Langfuse integration uses under the hood to capture the data from each OpenAI call.

npm install @opentelemetry/sdk-nodeNext is initializing the Node SDK. You can do that either in a dedicated instrumentation file or directly at the top of your main file.

The inline setup is the simplest way to get started. It works well for projects where your main file is executed first and import order is straightforward.

We can now initialize the LangfuseSpanProcessor and start the SDK. The LangfuseSpanProcessor is the part that takes that collected data and sends it to your Langfuse project.

Important: start the SDK before initializing the logic that needs to be traced to avoid losing data.

import { NodeSDK } from "@opentelemetry/sdk-node";

import { LangfuseSpanProcessor } from "@langfuse/otel";

const sdk = new NodeSDK({

spanProcessors: [new LangfuseSpanProcessor()],

});

sdk.start();The instrumentation file often preferred when you’re using frameworks that have complex startup order (Next.js, serverless, bundlers) or if you want a clean, predictable place where tracing is always initialized first.

Create an instrumentation.ts file, which sets up the collector that gathers data about each OpenAI call. The LangfuseSpanProcessor is the part that takes that collected data and sends it to your Langfuse project.

import { NodeSDK } from "@opentelemetry/sdk-node";

import { LangfuseSpanProcessor } from "@langfuse/otel";

const sdk = new NodeSDK({

spanProcessors: [new LangfuseSpanProcessor()],

});

sdk.start();Import the instrumentation.ts file first so all later imports run with tracing enabled.

import "./instrumentation"; // Must be the first importWrap your normal OpenAI client. From now on, each OpenAI request is automatically collected and forwarded to Langfuse.

Wrap OpenAI client

import OpenAI from "openai";

import { observeOpenAI } from "@langfuse/openai";

const openai = observeOpenAI(new OpenAI());

const res = await openai.chat.completions.create({

messages: [{ role: "system", content: "Tell me a story about a dog." }],

model: "gpt-4o",

max_tokens: 300,

});Langfuse’s Vercel AI SDK integration uses OpenTelemetry to automatically trace your AI calls. If you already use the Vercel AI SDK, you can start using Langfuse with minimal changes to your code.

Install packages

Install the Vercel AI SDK, OpenTelemetry, and the Langfuse integration packages.

npm install ai @ai-sdk/openai @langfuse/tracing @langfuse/otel @opentelemetry/sdk-nodeAdd credentials

Set your Langfuse credentials as environment variables so the SDK knows which project to write to.

LANGFUSE_SECRET_KEY = "sk-lf-..."

LANGFUSE_PUBLIC_KEY = "pk-lf-..."

LANGFUSE_BASE_URL = "https://cloud.langfuse.com" # 🇪🇺 EU region

# LANGFUSE_BASE_URL = "https://us.cloud.langfuse.com" # 🇺🇸 US regionInitialize OpenTelemetry with Langfuse

Set up the OpenTelemetry SDK with the Langfuse span processor. This captures telemetry data from the Vercel AI SDK and sends it to Langfuse.

import { NodeSDK } from "@opentelemetry/sdk-node";

import { LangfuseSpanProcessor } from "@langfuse/otel";

const sdk = new NodeSDK({

spanProcessors: [new LangfuseSpanProcessor()],

});

sdk.start();Enable telemetry in your AI SDK calls

Pass experimental_telemetry: { isEnabled: true } to your AI SDK functions. The AI SDK automatically creates telemetry spans, which the LangfuseSpanProcessor captures and sends to Langfuse.

import { generateText } from "ai";

import { openai } from "@ai-sdk/openai";

const { text } = await generateText({

model: openai("gpt-4o"),

prompt: "What is the weather like today?",

experimental_telemetry: { isEnabled: true },

});Langfuse’s LangChain integration uses a callback handler to record and send traces to Langfuse. If you already use LangChain, you can start using Langfuse with minimal changes to your code.

First install the Langfuse SDK and your LangChain SDK.

pip install langfuse langchain-openaiAdd your Langfuse credentials as environment variables so the callback handler knows which project to write to.

LANGFUSE_SECRET_KEY = "sk-lf-..."

LANGFUSE_PUBLIC_KEY = "pk-lf-..."

LANGFUSE_BASE_URL = "https://cloud.langfuse.com" # 🇪🇺 EU region

# LANGFUSE_BASE_URL = "https://us.cloud.langfuse.com" # 🇺🇸 US regionInitialize the Langfuse callback handler. LangChain has its own callback system, and Langfuse listens to those callbacks to record what your chains and LLMs are doing.

from langfuse.langchain import CallbackHandler

langfuse_handler = CallbackHandler()Add the Langfuse callback handler to your chain. The Langfuse callback handler plugs into LangChain’s event system. Every time the chain runs or the LLM is called, LangChain emits events, and the handler turns those into traces and observations in Langfuse.

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

llm = ChatOpenAI(model_name="gpt-4o")

prompt = ChatPromptTemplate.from_template("Tell me a joke about {topic}")

chain = prompt | llm

response = chain.invoke(

{"topic": "cats"},

config={"callbacks": [langfuse_handler]})Langfuse’s LangChain integration uses a callback handler to record and send traces to Langfuse. If you already use LangChain, you can start using Langfuse with minimal changes to your code.

First install the Langfuse core SDK and the LangChain integration.

npm install @langfuse/core @langfuse/langchainAdd your Langfuse credentials as environment variables so the integration knows which project to send your traces to.

LANGFUSE_SECRET_KEY = "sk-lf-..."

LANGFUSE_PUBLIC_KEY = "pk-lf-..."

LANGFUSE_BASE_URL = "https://cloud.langfuse.com" # 🇪🇺 EU region

# LANGFUSE_BASE_URL = "https://us.cloud.langfuse.com" # 🇺🇸 US regionInitialize OpenTelemetry

Install the OpenTelemetry SDK, which the Langfuse integration uses under the hood to capture the data from each OpenAI call.

npm install @opentelemetry/sdk-nodeNext is initializing the Node SDK. You can do that either in a dedicated instrumentation file or directly at the top of your main file.

The inline setup is the simplest way to get started. It works well for projects where your main file is executed first and import order is straightforward.

We can now initialize the LangfuseSpanProcessor and start the SDK. The LangfuseSpanProcessor is the part that takes that collected data and sends it to your Langfuse project.

Important: start the SDK before initializing the logic that needs to be traced to avoid losing data.

import { NodeSDK } from "@opentelemetry/sdk-node";

import { LangfuseSpanProcessor } from "@langfuse/otel";

const sdk = new NodeSDK({

spanProcessors: [new LangfuseSpanProcessor()],

});

sdk.start();The instrumentation file often preferred when you’re using frameworks that have complex startup order (Next.js, serverless, bundlers) or if you want a clean, predictable place where tracing is always initialized first.

Create an instrumentation.ts file, which sets up the collector that gathers data about each OpenAI call. The LangfuseSpanProcessor is the part that takes that collected data and sends it to your Langfuse project.

import { NodeSDK } from "@opentelemetry/sdk-node";

import { LangfuseSpanProcessor } from "@langfuse/otel";

const sdk = new NodeSDK({

spanProcessors: [new LangfuseSpanProcessor()],

});

sdk.start();Import the instrumentation.ts file first so all later imports run with tracing enabled.

import "./instrumentation"; // Must be the first importFinally, initialize the Langfuse CallbackHandler and add it to your chain. The CallbackHandler listens to the LangChain agent’s actions and prepares that information to be sent to Langfuse.

import { CallbackHandler } from "@langfuse/langchain";

// Initialize the Langfuse CallbackHandler

const langfuseHandler = new CallbackHandler();The line { callbacks: [langfuseHandler] } is what attaches the CallbackHandler to the agent.

import { createAgent, tool } from "@langchain/core/agents";

import * as z from "zod";

const getWeather = tool(

(input) => `It's always sunny in ${input.city}!`,

{

name: "get_weather",

description: "Get the weather for a given city",

schema: z.object({

city: z.string().describe("The city to get the weather for"),

}),

}

);

const agent = createAgent({

model: "openai:gpt-5-mini",

tools: [getWeather],

});

console.log(

await agent.invoke(

{ messages: [{ role: "user", content: "What's the weather in San Francisco?" }] },

{ callbacks: [langfuseHandler] }

)

);The Langfuse Python SDK gives you full control over how you instrument your application and can be used with any other framework.

1. Install package:

pip install langfuse2. Add credentials:

LANGFUSE_SECRET_KEY = "sk-lf-..."

LANGFUSE_PUBLIC_KEY = "pk-lf-..."

LANGFUSE_BASE_URL = "https://cloud.langfuse.com" # 🇪🇺 EU region

# LANGFUSE_BASE_URL = "https://us.cloud.langfuse.com" # 🇺🇸 US region3. Instrument your application:

Instrumentation means adding code that records what’s happening in your application so it can be sent to Langfuse. There are three main ways of instrumenting your code with the Python SDK.

In this example we will use the context manager. You can also use the decorator or create manual observations.

from langfuse import get_client

langfuse = get_client()

# Create a span using a context manager

with langfuse.start_as_current_observation(as_type="span", name="process-request") as span:

# Your processing logic here

span.update(output="Processing complete")

# Create a nested generation for an LLM call

with langfuse.start_as_current_observation(as_type="generation", name="llm-response", model="gpt-3.5-turbo") as generation:

# Your LLM call logic here

generation.update(output="Generated response")

# All spans are automatically closed when exiting their context blocks

# Flush events in short-lived applications

langfuse.flush()When should I call langfuse.flush()?

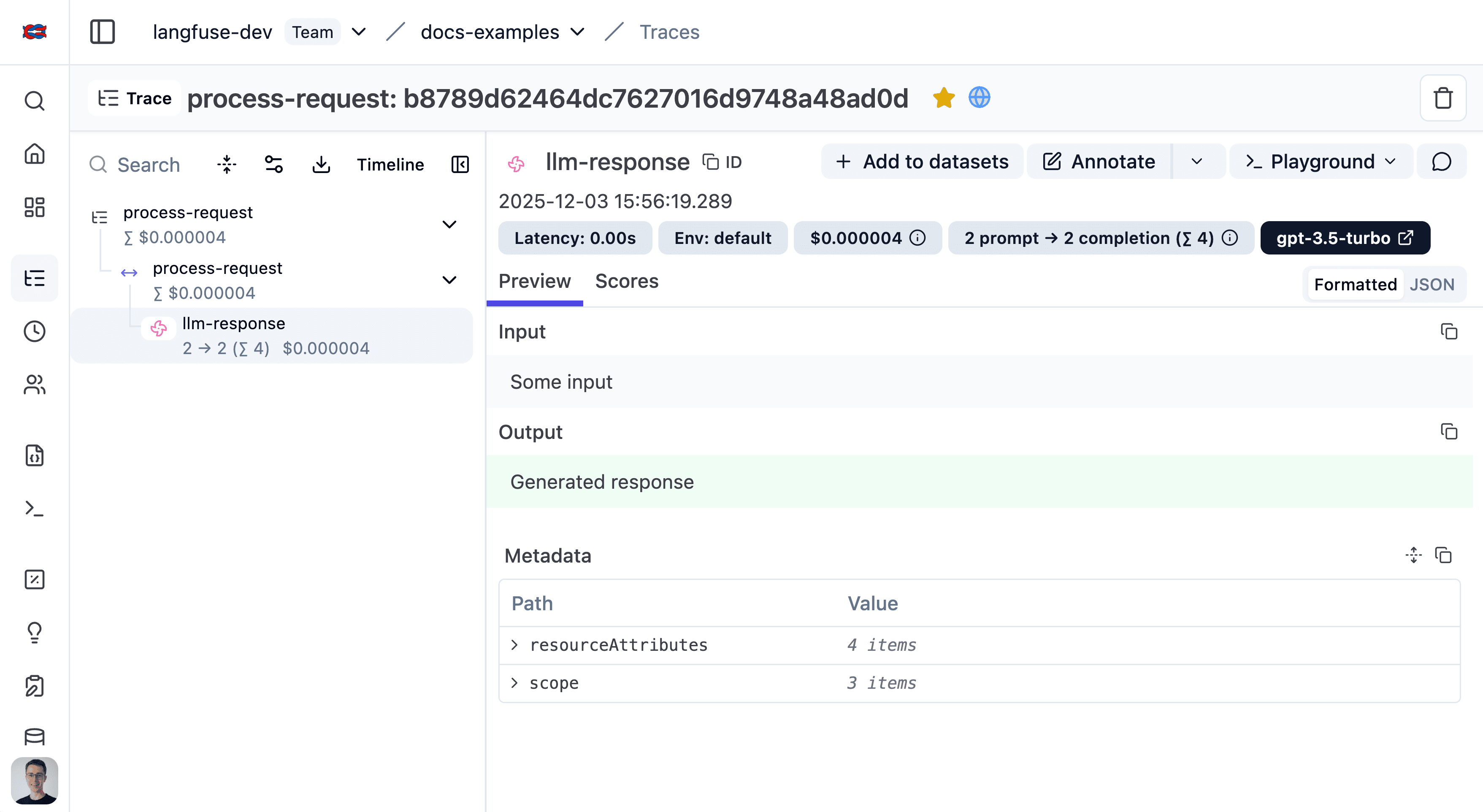

4. Run your application and see the trace in Langfuse:

See the trace in Langfuse.

Use the Langfuse JS/TS SDK to wrap any LLM or Agent

Install packages

Install the Langfuse tracing SDK, the Langfuse OpenTelemetry integration, and the OpenTelemetry Node SDK.

npm install @langfuse/tracing @langfuse/otel @opentelemetry/sdk-nodeAdd credentials

Add your Langfuse credentials to your environment variables so the tracing SDK knows which Langfuse project it should send your recorded data to.

LANGFUSE_SECRET_KEY = "sk-lf-..."

LANGFUSE_PUBLIC_KEY = "pk-lf-..."

LANGFUSE_BASE_URL = "https://cloud.langfuse.com" # 🇪🇺 EU region

# LANGFUSE_BASE_URL = "https://us.cloud.langfuse.com" # 🇺🇸 US regionInitialize OpenTelemetry

Install the OpenTelemetry SDK, which the Langfuse integration uses under the hood to capture the data from each OpenAI call.

npm install @opentelemetry/sdk-nodeNext is initializing the Node SDK. You can do that either in a dedicated instrumentation file or directly at the top of your main file.

The inline setup is the simplest way to get started. It works well for projects where your main file is executed first and import order is straightforward.

We can now initialize the LangfuseSpanProcessor and start the SDK. The LangfuseSpanProcessor is the part that takes that collected data and sends it to your Langfuse project.

Important: start the SDK before initializing the logic that needs to be traced to avoid losing data.

import { NodeSDK } from "@opentelemetry/sdk-node";

import { LangfuseSpanProcessor } from "@langfuse/otel";

const sdk = new NodeSDK({

spanProcessors: [new LangfuseSpanProcessor()],

});

sdk.start();The instrumentation file often preferred when you’re using frameworks that have complex startup order (Next.js, serverless, bundlers) or if you want a clean, predictable place where tracing is always initialized first.

Create an instrumentation.ts file, which sets up the collector that gathers data about each OpenAI call. The LangfuseSpanProcessor is the part that takes that collected data and sends it to your Langfuse project.

import { NodeSDK } from "@opentelemetry/sdk-node";

import { LangfuseSpanProcessor } from "@langfuse/otel";

const sdk = new NodeSDK({

spanProcessors: [new LangfuseSpanProcessor()],

});

sdk.start();Import the instrumentation.ts file first so all later imports run with tracing enabled.

import "./instrumentation"; // Must be the first importInstrument application

Instrumentation means adding code that records what’s happening in your application so it can be sent to Langfuse. Here, OpenTelemetry acts as the system that collects those recordings.

import { startActiveObservation, startObservation } from "@langfuse/tracing";

// startActiveObservation creates a trace for this block of work.

// Everything inside automatically becomes part of that trace.

await startActiveObservation("user-request", async (span) => {

span.update({

input: { query: "What is the capital of France?" },

});

// This generation will automatically be a child of "user-request" because of the startObservation function.

const generation = startObservation(

"llm-call",

{

model: "gpt-4",

input: [{ role: "user", content: "What is the capital of France?" }],

},

{ asType: "generation" },

);

// ... your real LLM call would happen here ...

generation

.update({

output: { content: "The capital of France is Paris." }, // update the output of the generation

})

.end(); // mark this nested observation as complete

// Add final information about the overall request

span.update({ output: "Successfully answered." });

});Use the agent mode of your editor to integrate Langfuse into your existing codebase.

This feature is experimental. Please share feedback or issues on GitHub.

Using the Observability Skill

Install the Langfuse Observability Skill to automatically instrument your LLM application with best practices:

npx skills add langfuse/skills --skill "langfuse-observability"Using the Langfuse Docs MCP Server

Install the Langfuse Docs MCP Server. The agent will use the Langfuse searchLangfuseDocs tool (docs) to find the correct documentation for the integration.

Add Langfuse Docs MCP to Cursor via the one-click install:

Manual configuration

Add the following to your mcp.json:

{

"mcpServers": {

"langfuse-docs": {

"url": "https://langfuse.com/api/mcp"

}

}

}Add Langfuse Docs MCP to Copilot in VSCode via the one-click install:

Manual configuration

Add Langfuse Docs MCP to Copilot in VSCode via the following steps:

- Open Command Palette (⌘+Shift+P)

- Open “MCP: Add Server…”

- Select

HTTP - Paste

https://langfuse.com/api/mcp - Select name (e.g.

langfuse-docs) and whether to save in user or workspace settings - You’re all set! The MCP server is now available in Agent mode

Add Langfuse Docs MCP to Claude Code via the CLI:

claude mcp add \

--transport http \

langfuse-docs \

https://langfuse.com/api/mcp \

--scope userManual configuration

Alternatively, add the following to your settings file:

- User scope:

~/.claude/settings.json - Project scope:

your-repo/.claude/settings.json - Local scope:

your-repo/.claude/settings.local.json

{

"mcpServers": {

"langfuse-docs": {

"transportType": "http",

"url": "https://langfuse.com/api/mcp",

"verifySsl": true

}

}

}One-liner JSON import

claude mcp add-json langfuse-docs \

'{"type":"http","url":"https://langfuse.com/api/mcp"}'Once added, start a Claude Code session (claude) and type /mcp to confirm the connection.

Add Langfuse Docs MCP to Windsurf via the following steps:

-

Open Command Palette (⌘+Shift+P)

-

Open “MCP Configuration Panel”

-

Select

Add custom server -

Add the following configuration:

{ "mcpServers": { "langfuse-docs": { "command": "npx", "args": ["mcp-remote", "https://langfuse.com/api/mcp"] } } }

Langfuse uses the streamableHttp protocol to communicate with the MCP server. This is supported by most clients.

{

"mcpServers": {

"langfuse-docs": {

"url": "https://langfuse.com/api/mcp"

}

}

}If you use a client that does not support streamableHttp (e.g. Windsurf), you can use the mcp-remote command as a local proxy.

{

"mcpServers": {

"langfuse-docs": {

"command": "npx",

"args": ["mcp-remote", "https://langfuse.com/api/mcp"]

}

}

}Using the agent prompt

Copy and execute the following prompt in your editor’s agent mode. The agent can use its native web search capabilities to find documentation.

View prompt

# Langfuse Agentic Onboarding ## Goals Your goal is to help me integrate Langfuse tracing into my codebase. ## Rules Before you begin, you must understand these three fundamental rules: 1. Do Not Change Business Logic: You are strictly forbidden from changing, refactoring, or altering any of my existing code's logic. Your only task is to add the necessary code for Langfuse integration, such as decorators, imports, handlers, and environment variable initializations. 2. Adhere to the Workflow: You must follow the step-by-step workflow outlined below in the exact sequence. 3. If available, use the langfuse-docs MCP server and the `searchLangfuseDocs` tool to retrieve information from the Langfuse docs. If it is not available, please use your websearch capabilities to find the information. ## Integration Workflow ### Step 1: Language and Compatibility Check First, analyze the codebase to identify the primary programming language. - If the language is Python or JavaScript/TypeScript, proceed to Step 2. - If the language is not Python or JavaScript/TypeScript, you must stop immediately. Inform me that the codebase is currently unsupported for this AI-based setup, and do not proceed further. ### Step 2: Codebase Discovery & Entrypoint Confirmation Once you have confirmed the language is compatible, explore the entire codebase to understand its purpose. - Identify all files and functions that contain LLM calls or are likely candidates for tracing. - Present this list of files and function names to me. - If you are unclear about the main entry point of the application (e.g., the primary API route or the main script to execute), you must ask me for confirmation on which parts are most critical to trace before proceeding to the next step. ### Step 3: Discover Available Integrations After I confirm the files and entry points, get a list of available integrations from the Langfuse docs by calling the `getLangfuseOverview` tool. ### Step 4: Analyze Confirmed Files for Technologies Based on the files we confirmed in Step 2, perform a deeper analysis to identify the specific LLM frameworks or SDKs being used (e.g., OpenAI SDK, LangChain, LlamaIndex, Anthropic SDK, etc.). Search the Langfuse docs for the integration instructions for these frameworks via the `searchLangfuseDocs` tool. If you are unsure, repeatedly query the Langfuse docs via the `searchLangfuseDocs` tool. ### Step 5: Propose a Development Plan Before you write or modify a single line of code, you must present me with a clear, step-by-step development plan. This plan must include: - The Langfuse package(s) you will install. - The files you intend to modify. - The specific code changes you will make, showing the exact additions. - Instructions on where I will need to add my Langfuse API keys after your work is done. I will review this plan and give you my approval before you proceed. ### Step 6: Implement the Integration Once I approve your plan, execute it. First, you must use your terminal access to run the necessary package installation command (e.g., pip install langfuse, npm install langfuse) yourself. After the installation is successful, modify the code exactly as described in the plan. When done, please review the code changes. The goal here is to keep the integration as simple as possible. ### Step 7: Request User Review and Wait After you have made all the changes, notify me that your work is complete. Explicitly ask me to run the application and confirm that everything is working correctly and that you can make changes/improvements if needed. ### Step 8: Debug and Fix if Necessary If I report that something is not working correctly, analyze my feedback. Use the knowledge you have to debug the issue. If required, re-crawl the relevant Langfuse documentation to find a solution, propose a fix to me, and then implement it.

Explore all integrations and frameworks that Langfuse supports.

See your trace in Langfuse

After running your application, visit the Langfuse interface to view the trace you just created. (Example LangGraph trace in Langfuse)

Not seeing what you expected?

- I have setup Langfuse, but I do not see any traces in the dashboard. How to solve this?

- Why are the input and output of a trace empty?

- Why do I see HTTP requests or database queries in my Langfuse traces?

Next steps

Now that you’ve ingested your first trace, you can start adding on more functionality to your traces. We recommend starting with the following:

- Group traces into sessions for multi-turn applications

- Split traces into environments for different stages of your application

- Add attributes to your traces so you can filter them in the future

Already know what you want? Take a look under Features for guides on specific topics.