Trace Anthropic Models in Langfuse

Anthropic provides advanced language models like Claude, known for their safety, helpfulness, and strong reasoning capabilities. By combining Anthropic’s models with Langfuse, you can trace, monitor, and analyze your AI workloads in development and production.

This notebook demonstrates two different ways to use Anthropic models with Langfuse:

- OpenTelemetry Instrumentation: Use the

AnthropicInstrumentorlibrary to wrap Anthropic SDK calls and send OpenTelemetry spans to Langfuse. - OpenAI SDK: Use Anthropic’s OpenAI-compatible endpoints via Langfuse’s OpenAI SDK wrapper.

What is Anthropic?

Anthropic is an AI safety company that develops Claude, a family of large language models designed to be helpful, harmless, and honest. Claude models excel at complex reasoning, analysis, and creative tasks.

What is Langfuse?

Langfuse is an open source platform for LLM observability and monitoring. It helps you trace and monitor your AI applications by capturing metadata, prompt details, token usage, latency, and more.

Step 1: Install Dependencies

Before you begin, install the necessary packages in your Python environment:

%pip install anthropic openai langfuse opentelemetry-instrumentation-anthropicStep 2: Configure Langfuse SDK

Next, set up your Langfuse API keys. You can get these keys by signing up for a free Langfuse Cloud account or by self-hosting Langfuse. These environment variables are essential for the Langfuse client to authenticate and send data to your Langfuse project.

Also set your Anthropic API (Anthropic Console).

import os

# Get keys for your project from the project settings page: https://cloud.langfuse.com

os.environ["LANGFUSE_PUBLIC_KEY"] = "pk-lf-..."

os.environ["LANGFUSE_SECRET_KEY"] = "sk-lf-..."

os.environ["LANGFUSE_BASE_URL"] = "https://cloud.langfuse.com" # 🇪🇺 EU region

# os.environ["LANGFUSE_BASE_URL"] = "https://us.cloud.langfuse.com" # 🇺🇸 US region

os.environ["ANTHROPIC_API_KEY"] = "sk-ant-..." # Your Anthropic API keyWith the environment variables set, we can now initialize the Langfuse client. get_client() initializes the Langfuse client using the credentials provided in the environment variables.

from langfuse import get_client

langfuse = get_client()

# Verify connection

if langfuse.auth_check():

print("Langfuse client is authenticated and ready!")

else:

print("Authentication failed. Please check your credentials and host.")Langfuse client is authenticated and ready!

Approach 1: OpenTelemetry Instrumentation

Use the AnthropicInstrumentor library to wrap Anthropic SDK calls and send OpenTelemetry spans to Langfuse.

from opentelemetry.instrumentation.anthropic import AnthropicInstrumentor

AnthropicInstrumentor().instrument()from anthropic import Anthropic

# Initialize the Anthropic client

client = Anthropic(

api_key=os.environ.get("ANTHROPIC_API_KEY")

)

# Make the API call to Anthropic

message = client.messages.create(

model="claude-opus-4-20250514",

max_tokens=1000,

temperature=1,

system="You are a world-class poet. Respond only with short poems.",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": "Why is the ocean salty?"

}

]

}

]

)

print(message.content)Approach 2: OpenAI SDK Drop-in Replacement

Anthropic provides OpenAI-compatible endpoints that allow you to use the OpenAI SDK to interact with Claude models. This is particularly useful if you have existing code using the OpenAI SDK that you want to switch to Claude.

# Langfuse OpenAI client

from langfuse.openai import OpenAI

client = OpenAI(

api_key=os.environ.get("ANTHROPIC_API_KEY"), # Your Anthropic API key

base_url="https://api.anthropic.com/v1/" # Anthropic's API endpoint

)

response = client.chat.completions.create(

model="claude-opus-4-20250514", # Anthropic model name

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Who are you?"}

],

)

print(response.choices[0].message.content)View Traces in Langfuse

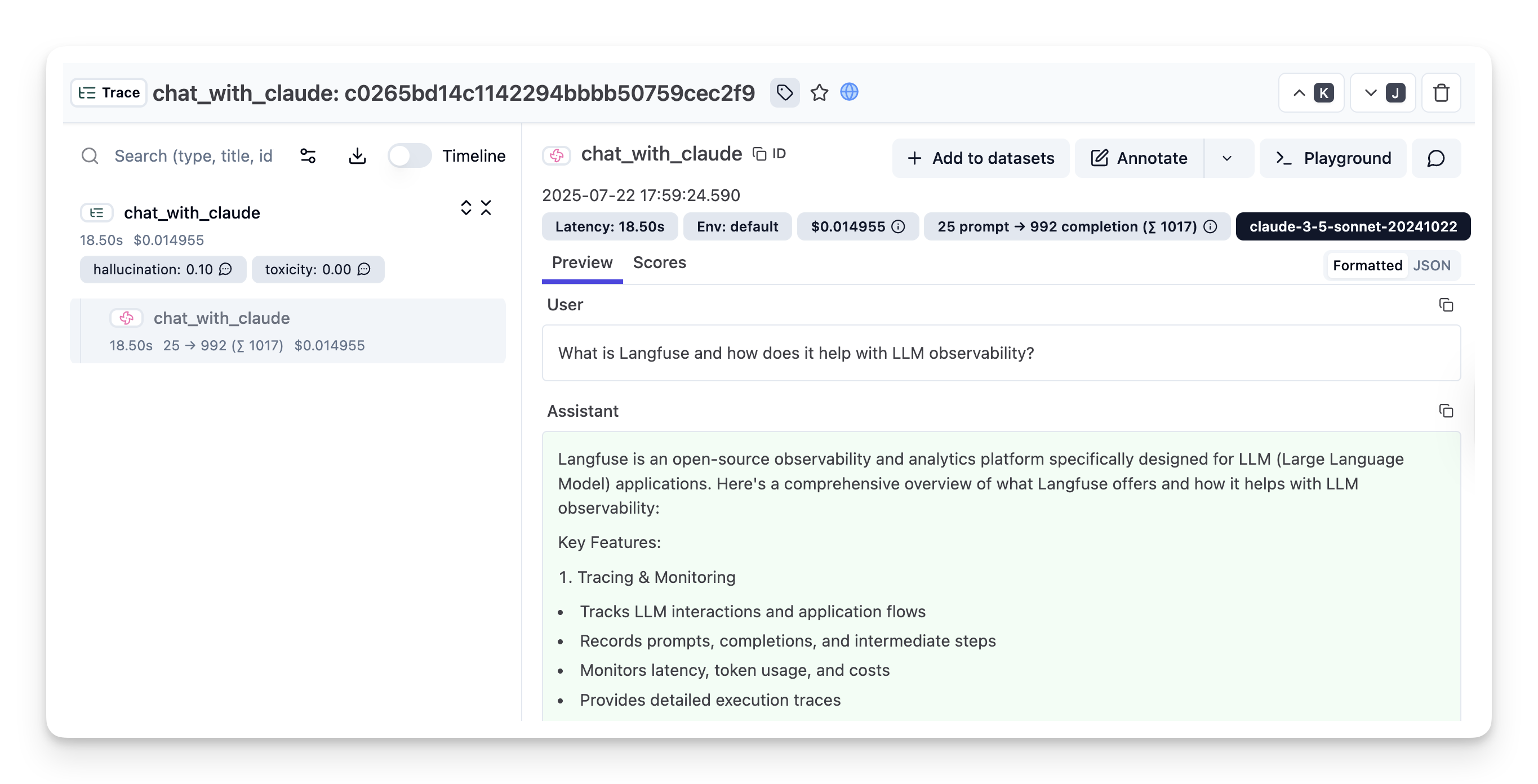

After executing the application, navigate to your Langfuse Trace Table. You will find detailed traces of the application’s execution, providing insights into the agent conversations, LLM calls, inputs, outputs, and performance metrics.

You can also view the trace in Langfuse here:

Interoperability with the Python SDK

You can use this integration together with the Langfuse SDKs to add additional attributes to the trace.

The @observe() decorator provides a convenient way to automatically wrap your instrumented code and add additional attributes to the trace.

from langfuse import observe, propagate_attributes, get_client

langfuse = get_client()

@observe()

def my_llm_pipeline(input):

# Add additional attributes (user_id, session_id, metadata, version, tags) to all spans created within this execution scope

with propagate_attributes(

user_id="user_123",

session_id="session_abc",

tags=["agent", "my-trace"],

metadata={"email": "user@langfuse.com"},

version="1.0.0"

):

# YOUR APPLICATION CODE HERE

result = call_llm(input)

# Update the trace input and output

langfuse.update_current_trace(

input=input,

output=result,

)

return resultLearn more about using the Decorator in the Langfuse SDK instrumentation docs.

Troubleshooting

No traces appearing

First, enable debug mode in the Python SDK:

export LANGFUSE_DEBUG="True"Then run your application and check the debug logs:

- OTel spans appear in the logs: Your application is instrumented correctly but traces are not reaching Langfuse. To resolve this:

- Call

langfuse.flush()at the end of your application to ensure all traces are exported. - Verify that you are using the correct API keys and base URL.

- Call

- No OTel spans in the logs: Your application is not instrumented correctly. Make sure the instrumentation runs before your application code.

Unwanted observations in Langfuse

The Langfuse SDK is based on OpenTelemetry. Other libraries in your application may emit OTel spans that are not relevant to you. These still count toward your billable units, so you should filter them out. See Unwanted spans in Langfuse for details.

Missing attributes

Some attributes may be stored in the metadata object of the observation rather than being mapped to the Langfuse data model. If a mapping or integration does not work as expected, please raise an issue on GitHub.

Next Steps

Once you have instrumented your code, you can manage, evaluate and debug your application: