Trace Google Vertex AI Models in Langfuse

This notebook shows how to trace and observe models queried via the Google Vertex API service.

What is Google Vertex AI? Google Vertex AI is Google Cloud’s unified platform for building, deploying, and managing machine learning and generative AI with managed services, SDKs, and APIs. It streamlines everything from data prep and training to tuning and prediction, and provides access to foundation models like Gemini with enterprise-grade security and MLOps tooling.

What is Langfuse? Langfuse is an open source platform for LLM observability and monitoring. It helps you trace and monitor your AI applications by capturing metadata, prompt details, token usage, latency, and more.

Step 1: Install Dependencies

Before you begin, install the necessary packages in your Python environment:

%pip install langfuse google-cloud-aiplatform openinference-instrumentation-vertexaiStep 2: Configure Langfuse SDK

Next, set up your Langfuse API keys. You can get these keys by signing up for a free Langfuse Cloud account or by self-hosting Langfuse. These environment variables are essential for the Langfuse client to authenticate and send data to your Langfuse project.

Also set your Google Vertex API credentials which uses Application Default Credentials (ADC) from a service account key file.

import os

# Get keys for your project from the project settings page: https://cloud.langfuse.com

os.environ["LANGFUSE_PUBLIC_KEY"] = "pk-lf-..."

os.environ["LANGFUSE_SECRET_KEY"] = "sk-lf-..."

os.environ["LANGFUSE_BASE_URL"] = "https://cloud.langfuse.com" # 🇪🇺 EU region

# os.environ["LANGFUSE_BASE_URL"] = "https://us.cloud.langfuse.com" # 🇺🇸 US region

# Get your Google Vertex API key

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = "your-service-account-key.json"With the environment variables set, we can now initialize the Langfuse client. get_client() initializes the Langfuse client using the credentials provided in the environment variables.

from langfuse import get_client

# Initialise Langfuse client and verify connectivity

langfuse = get_client()

assert langfuse.auth_check(), "Langfuse auth failed - check your keys ✋"Step 3: OpenTelemetry Instrumentation

Use the VertexAIInstrumentor library to wrap Google Vertex SDK calls and send OpenTelemetry spans to Langfuse.

from openinference.instrumentation.vertexai import VertexAIInstrumentor

VertexAIInstrumentor().instrument()Step 4: Run an Example

import vertexai

from vertexai.generative_models import GenerativeModel

# Initialize the SDK (use your project and region)

vertexai.init(project="your-project-id", location="europe-central2")

# Pick a Gemini model available in your region (examples: "gemini-1.5-flash", "gemini-1.5-pro", "gemini-2.5-flash")

model = GenerativeModel("gemini-2.5-flash")

# Single-shot generation

resp = model.generate_content("What is Langfuse?")

print(resp.text)

# (Optional) Streaming

for chunk in model.generate_content("Why is LLM observability important?", stream=True):

print(chunk.text, end="")View Traces in Langfuse

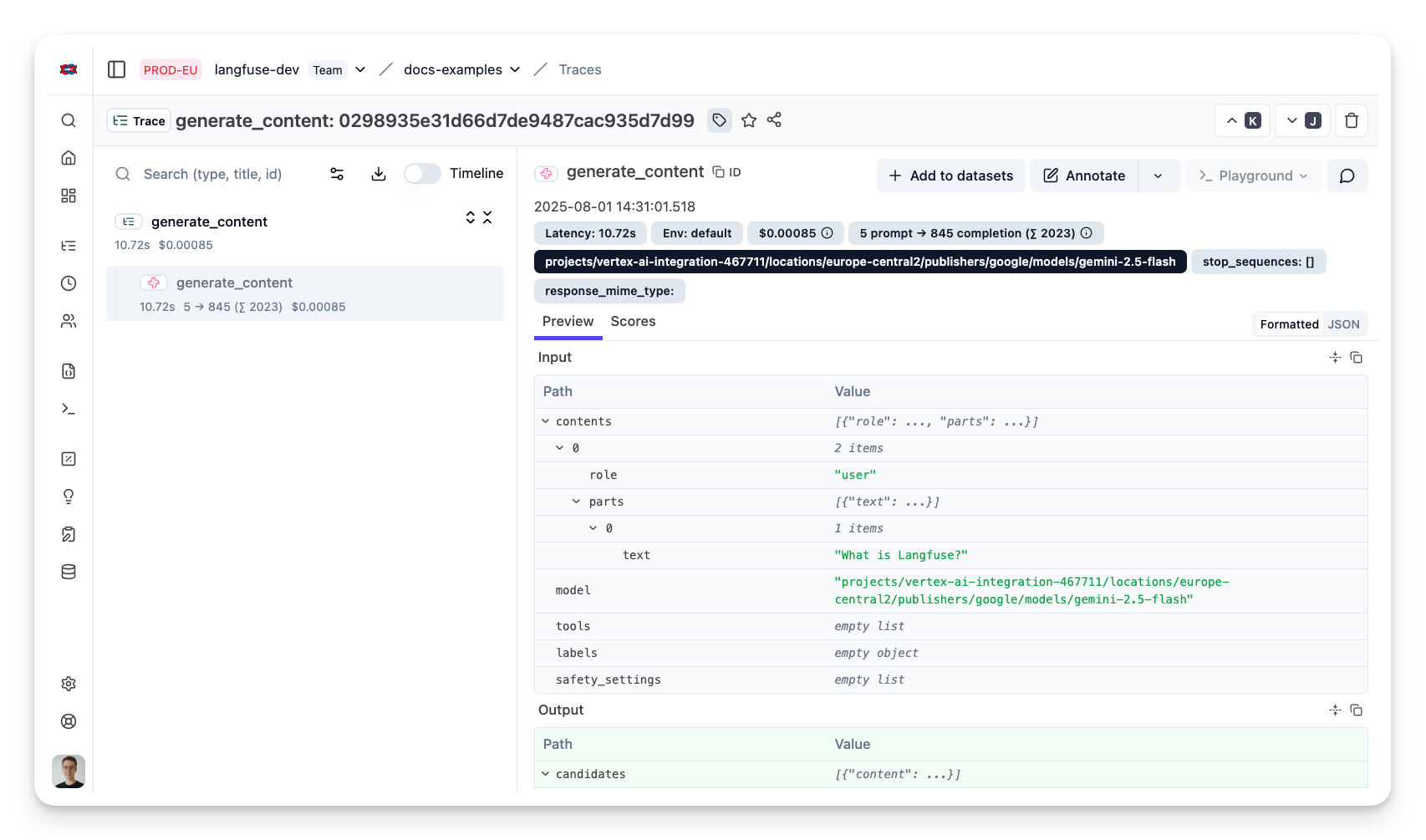

After executing the application, navigate to your Langfuse Trace Table. You will find detailed traces of the application’s execution, providing insights into the agent conversations, LLM calls, inputs, outputs, and performance metrics.

Interoperability with the Python SDK

You can use this integration together with the Langfuse SDKs to add additional attributes to the trace.

The @observe() decorator provides a convenient way to automatically wrap your instrumented code and add additional attributes to the trace.

from langfuse import observe, propagate_attributes, get_client

langfuse = get_client()

@observe()

def my_llm_pipeline(input):

# Add additional attributes (user_id, session_id, metadata, version, tags) to all spans created within this execution scope

with propagate_attributes(

user_id="user_123",

session_id="session_abc",

tags=["agent", "my-trace"],

metadata={"email": "user@langfuse.com"},

version="1.0.0"

):

# YOUR APPLICATION CODE HERE

result = call_llm(input)

# Update the trace input and output

langfuse.update_current_trace(

input=input,

output=result,

)

return resultLearn more about using the Decorator in the Langfuse SDK instrumentation docs.

Next Steps

Once you have instrumented your code, you can manage, evaluate and debug your application: