Model Usage & Cost Tracking

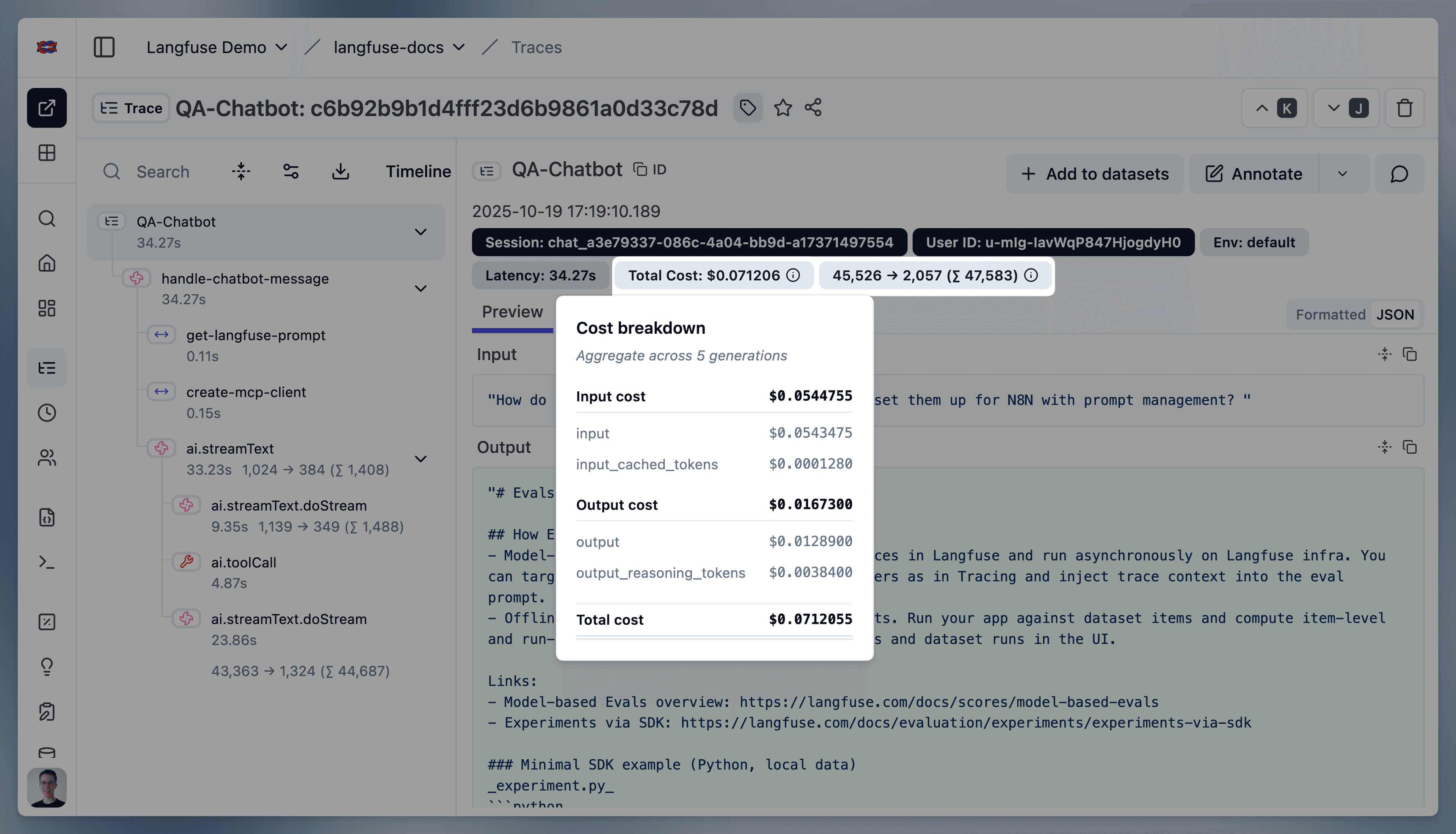

Langfuse tracks the usage and costs of your LLM generations and provides breakdowns by usage types. Usage and cost can be tracked on observations of type generation and embedding.

- Usage details: number of units consumed per usage type

- Cost details: USD cost per usage type

Usage types can be arbitrary strings and differ by LLM provider. At the highest level, they can be simply input and output. As LLMs grow more sophisticated, additional usage types are necessary, such as cached_tokens, audio_tokens, image_tokens.

In the UI, Langfuse summarizes all usage types that include the string input as input usage types, similarlyoutput as output usage types. If no total usage type is ingested, Langfuse sums up all usage type units to a total.

Both usage details and cost details can be either

- ingested via API, SDKs or integrations

- or inferred based on the

modelparameter of the generation. Langfuse comes with a list of predefined popular models and their tokenizers including OpenAI, Anthropic, and Google models. You can also add your own custom model definitions or request official support for new models via GitHub. Inferred cost are calculated at the time of ingestion with the model and price information available at that point in time.

Ingested usage and cost are prioritized over inferred usage and cost:

Via the Daily Metrics API, you can retrieve aggregated daily usage and cost metrics from Langfuse for downstream use in analytics, billing, and rate-limiting. The API allows you to filter by application type, user, or tags.

Ingest usage and/or cost

If available in the LLM response, ingesting usage and/or cost is the most accurate and robust way to track usage in Langfuse.

Many of the Langfuse integrations automatically capture usage details and cost details data from the LLM response. If this does not work as expected, please create an issue on GitHub.

When using the @observe() decorator:

from langfuse import observe, get_client

import anthropic

langfuse = get_client()

anthropic_client = anthropic.Anthropic()

@observe(as_type="generation")

def anthropic_completion(**kwargs):

# optional, extract some fields from kwargs

kwargs_clone = kwargs.copy()

input = kwargs_clone.pop('messages', None)

model = kwargs_clone.pop('model', None)

langfuse.update_current_generation(

input=input,

model=model,

metadata=kwargs_clone

)

response = anthropic_client.messages.create(**kwargs)

langfuse.update_current_generation(

usage_details={

"input": response.usage.input_tokens,

"output": response.usage.output_tokens,

"cache_read_input_tokens": response.usage.cache_read_input_tokens

# "total": int, # if not set, it is derived from input + cache_read_input_tokens + output

},

# Optionally, also ingest usd cost. Alternatively, you can infer it via a model definition in Langfuse.

cost_details={

# Here we assume the input and output cost are 1 USD each and half the price for cached tokens.

"input": 1,

"cache_read_input_tokens": 0.5,

"output": 1,

# "total": float, # if not set, it is derived from input + cache_read_input_tokens + output

}

)

# return result

return response.content[0].text

@observe()

def main():

return anthropic_completion(

model="claude-3-opus-20240229",

max_tokens=1024,

messages=[

{"role": "user", "content": "Hello, Claude"}

]

)

main()When creating manual generations:

from langfuse import get_client

import anthropic

langfuse = get_client()

anthropic_client = anthropic.Anthropic()

with langfuse.start_as_current_observation(

as_type="generation",

name="anthropic-completion",

model="claude-3-opus-20240229",

input=[{"role": "user", "content": "Hello, Claude"}]

) as generation:

response = anthropic_client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1024,

messages=[{"role": "user", "content": "Hello, Claude"}]

)

generation.update(

output=response.content[0].text,

usage_details={

"input": response.usage.input_tokens,

"output": response.usage.output_tokens,

"cache_read_input_tokens": response.usage.cache_read_input_tokens

# "total": int, # if not set, it is derived from input + cache_read_input_tokens + output

},

# Optionally, also ingest usd cost. Alternatively, you can infer it via a model definition in Langfuse.

cost_details={

# Here we assume the input and output cost are 1 USD each and half the price for cached tokens.

"input": 1,

"cache_read_input_tokens": 0.5,

"output": 1,

# "total": float, # if not set, it is derived from input + cache_read_input_tokens + output

}

)Compatibility with OpenAI

For increased compatibility with OpenAI, you can also use the OpenAI Usage schema. prompt_tokens will be mapped to input, completion_tokens will be mapped to output, and total_tokens will be mapped to total. The keys nested in prompt_tokens_details will be flattened with an input_ prefix and completion_tokens_details will be flattened with an output_ prefix.

from langfuse import get_client

langfuse = get_client()

with langfuse.start_as_current_observation(

as_type="generation",

name="openai-style-generation",

model="gpt-4o"

) as generation:

# Simulate LLM call

# response = openai_client.chat.completions.create(...)

generation.update(

usage_details={

# usage (OpenAI-style schema)

"prompt_tokens": 10,

"completion_tokens": 25,

"total_tokens": 35,

"prompt_tokens_details": {

"cached_tokens": 5,

"audio_tokens": 2,

},

"completion_tokens_details": {

"reasoning_tokens": 15,

},

}

)You can also ingest OpenAI-style usage via generation.update() and generation.end().

Infer usage and/or cost

If either usage or cost are not ingested, Langfuse will attempt to infer the missing values based on the model parameter of the generation at the time of ingestion. This is especially useful for some model providers or self-hosted models which do not include usage or cost in the response.

Langfuse comes with a list of predefined popular models and their tokenizers including OpenAI, Anthropic, Google. Check out the full list (you need to sign-in).

You can also add your own custom model definitions (see below) or request official support for new models via GitHub.

Usage

If a tokenizer is specified for the model, Langfuse automatically calculates token amounts for ingested generations.

The following tokenizers are currently supported:

| Model | Tokenizer | Used package | Comment |

|---|---|---|---|

gpt-4o | o200k_base | tiktoken | |

gpt* | cl100k_base | tiktoken | |

claude* | claude | @anthropic-ai/tokenizer | According to Anthropic, their tokenizer is not accurate for Claude 3 models. If possible, send us the tokens from their API response. |

Cost

Model definitions include prices per usage type. Usage types must match exactly with the keys in the usage_details object of the generation.

Langfuse automatically calculates cost for ingested generations at the time of ingestion if (1) usage is ingested or inferred, (2) and a matching model definition includes prices.

Pricing Tiers

Some model providers charge different rates depending on the number of input tokens used. For example, Anthropic’s Claude Sonnet 4.5 and Google’s Gemini 2.5 Pro apply higher pricing when more than 200K input tokens are used.

Langfuse supports pricing tiers for models, enabling accurate cost calculation for these context-dependent pricing structures.

How tier matching works

Each model can have multiple pricing tiers, each with:

- Name: A descriptive name (e.g., “Standard”, “Large Context”)

- Priority: Evaluation order (0 is reserved for default tier)

- Conditions: Rules that determine when the tier applies

- Prices: Cost per usage type for this tier

When calculating cost, Langfuse evaluates tiers in priority order (excluding the default tier). The first tier whose conditions are satisfied is used. If no conditional tier matches, the default tier is applied.

Condition format:

usageDetailPattern: A regex pattern to match usage detail keys (e.g.,inputmatchesinput_tokens,input_cached_tokens, etc.)operator: Comparison operator (gt,gte,lt,lte,eq,neq)value: The threshold value to compare againstcaseSensitive: Whether the pattern matching is case-sensitive (default: false)

For example, the “Large Context” tier for Claude Sonnet 4.5 has a condition: input > 200000, meaning it applies when the sum of all usage details matching the pattern “input” exceeds 200,000 tokens.

Custom model definitions

You can flexibly add your own model definitions (incl. pricing tiers) to Langfuse. This is especially useful for self-hosted or fine-tuned models which are not included in the list of Langfuse maintained models.

To add a custom model definition in the Langfuse UI, you can either click on the ”+” sign next to the model name or navigate to the Project Settings > Models to add a new model definition.

Then you can add the prices per token type and save the model definition. Now all new traces with this model will have the correct token usage and cost inferred.

Models are matched to generations based on:

| Generation Attribute | Model Attribute | Notes |

|---|---|---|

model | match_pattern | Uses regular expressions, e.g. (?i)^(gpt-4-0125-preview)$ matches gpt-4-0125-preview. |

User-defined models take priority over models maintained by Langfuse.

Further details

When using the openai tokenizer, you need to specify the following tokenization config. You can also copy the config from the list of predefined OpenAI models. See the OpenAI documentation for further details. tokensPerName and tokensPerMessage are required for chat models.

{

"tokenizerModel": "gpt-3.5-turbo", // tiktoken model name

"tokensPerName": -1, // OpenAI Chatmessage tokenization config

"tokensPerMessage": 4 // OpenAI Chatmessage tokenization config

}Cost inference for reasoning models

Cost inference by tokenizing the LLM input and output is not supported for reasoning models such as the OpenAI o1 model family. That is, if no token counts are ingested, Langfuse cannot infer cost for reasoning models.

Reasoning models take multiple steps to arrive at a response. The result from each step generates reasoning tokens that are billed as output tokens. So the cost-effective output token count is the sum of all reasoning tokens and the token count for the final completion. Since Langfuse does not have visibility into the reasoning tokens, it cannot infer the correct cost for generations that have no token usage provided.

To benefit from Langfuse cost tracking, please provide the token usage when ingesting o1 model generations. When utilizing the Langfuse OpenAI wrapper or integrations such as for Langchain, LlamaIndex or LiteLLM, token usage is collected and provided automatically for you.

For more details, see the OpenAI guide on how reasoning models work.

Troubleshooting

- If you change the model definition, the updated costs will only be applied to new generations logged to Langfuse.

- Only observations of type

generationandembeddingcan track costs and usage. - If you use OpenRouter, Langfuse can directly capture the OpenRouter cost information. Learn more here.

- If you use LiteLLM, Langfuse directly captures the cost information returned in each LiteLLM response.