Annotation Queues

Annotation Queues are a manual evaluation method which is build for domain experts to add scores and comments to traces, observations or sessions.

Why use Annotation Queues?

- Manually explore application results and add scores and comments to them

- Allow domain experts to add scores and comments to a subset of traces

- Add corrected outputs to capture what the model should have generated

- Align your LLM-as-a-Judge evaluation with human annotation

Set up step-by-step

Create a new Annotation Queue

- Click on

New Queueto create a new queue. - Select the

Score Configsyou want to use for this queue. - Set the

Queue nameandDescription(optional). - Assign users to the queue (optional).

An Annotation Queue requires a score config that defines the scoring dimensions for the annotation tasks. See how to create and manage Score Configs for details.

Add Traces, Observations or Sessions to the Queue

Once you have created annotation queues, you can assign traces, observations or sessions to them.

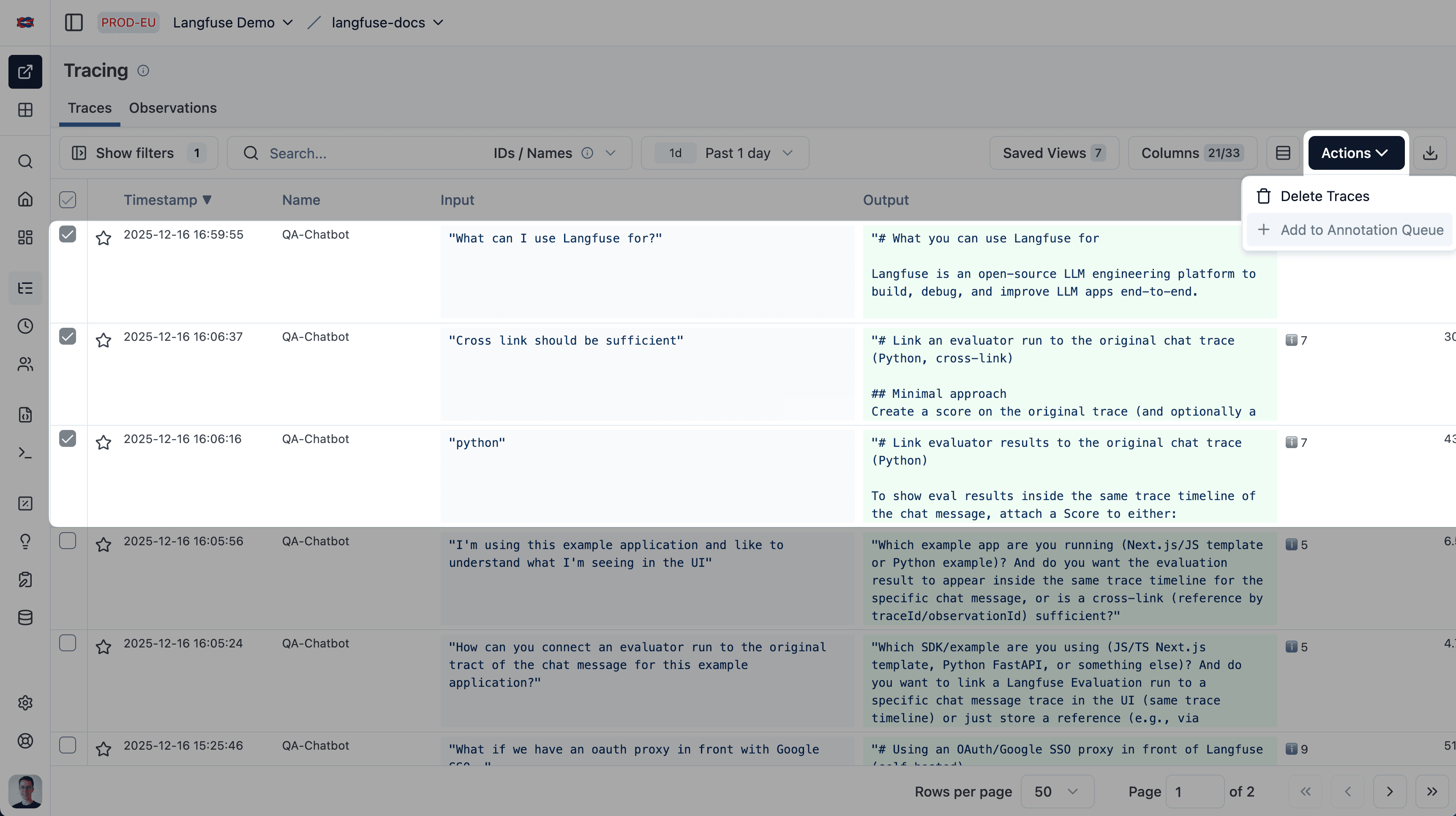

To add multiple traces, sessions or observations to a queue:

- Select Traces, Observations or Sessions via the checkboxes.

- Click on the “Actions” dropdown menu

- Click on

Add to queueto add the selected traces, sessions or observations to the queue. - Select the queue you want to add the traces, sessions or observations to.

Process Annotation Queue

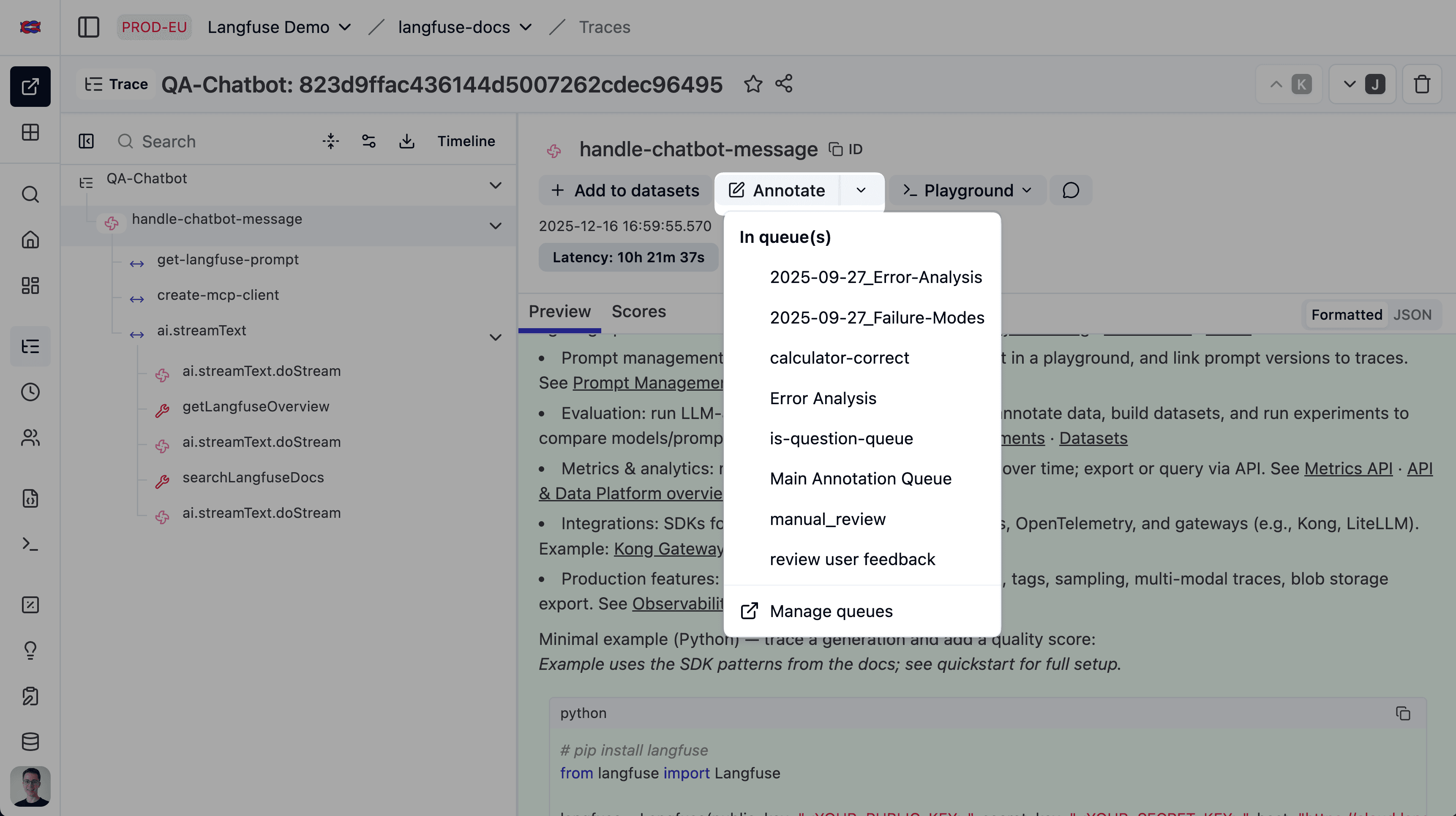

You will see an annotation task for each item in the queue.

- On the

AnnotateCard add scores on the defined dimensions - Click on

Complete + nextto move to the next annotation task or finish the queue

Manage Annotation Queues via API

You can manage annotation queues via the API. This allows for scaling and automating your annotation workflows or using Langfuse as the backbone for a custom vibe coded annotation tool.

Was this page helpful?