Trace the OpenAI Agents SDK with Langfuse

This notebook demonstrates how to integrate Langfuse into your OpenAI Agents workflow to monitor, debug and evaluate your AI agents.

What is the OpenAI Agents SDK?: The OpenAI Agents SDK is a lightweight, open-source framework that lets developers build AI agents and orchestrate multi-agent workflows. It provides building blocks—such as tools, handoffs, and guardrails to configure large language models with custom instructions and integrated tools. Its Python-first design supports dynamic instructions and function tools for rapid prototyping and integration with external systems.

What is Langfuse?: Langfuse is an open-source observability platform for AI agents. It helps you visualize and monitor LLM calls, tool usage, cost, latency, and more.

1. Install Dependencies

Below we install the openai-agents library (the OpenAI Agents SDK), and the OpenInference OpenAI Agents instrumentation library.

%pip install openai-agents langfuse nest_asyncio openinference-instrumentation-openai-agents2. Configure Environment & Langfuse Credentials

Next, set up your Langfuse API keys. You can get these keys by signing up for a free Langfuse Cloud account or by self-hosting Langfuse. These environment variables are essential for the Langfuse client to authenticate and send data to your Langfuse project.

import os

# Get keys for your project from the project settings page: https://cloud.langfuse.com

os.environ["LANGFUSE_PUBLIC_KEY"] = "pk-lf-..."

os.environ["LANGFUSE_SECRET_KEY"] = "sk-lf-..."

os.environ["LANGFUSE_BASE_URL"] = "https://cloud.langfuse.com" # 🇪🇺 EU region

# os.environ["LANGFUSE_BASE_URL"] = "https://us.cloud.langfuse.com" # 🇺🇸 US region

# Your openai key

os.environ["OPENAI_API_KEY"] = "sk-proj-..."3. Instrumenting the Agent

import nest_asyncio

nest_asyncio.apply()Now, we initialize the OpenInference OpenAI Agents instrumentation. This third-party instrumentation automatically captures OpenAI Agents operations and exports OpenTelemetry (OTel) spans to Langfuse.

from openinference.instrumentation.openai_agents import OpenAIAgentsInstrumentor

OpenAIAgentsInstrumentor().instrument()Now initialize the Langfuse client. get_client() initializes the Langfuse client using the credentials provided in the environment variables.

from langfuse import get_client

langfuse = get_client()

# Verify connection

if langfuse.auth_check():

print("Langfuse client is authenticated and ready!")

else:

print("Authentication failed. Please check your credentials and host.")4. Hello World Example

Below we create an OpenAI Agent that always replies in haiku form. We run it with Runner.run and print the final output.

import asyncio

from agents import Agent, Runner

async def main():

agent = Agent(

name="Assistant",

instructions="You only respond in haikus.",

)

result = await Runner.run(agent, "Tell me about recursion in programming.")

print(result.final_output)

loop = asyncio.get_running_loop()

await loop.create_task(main())

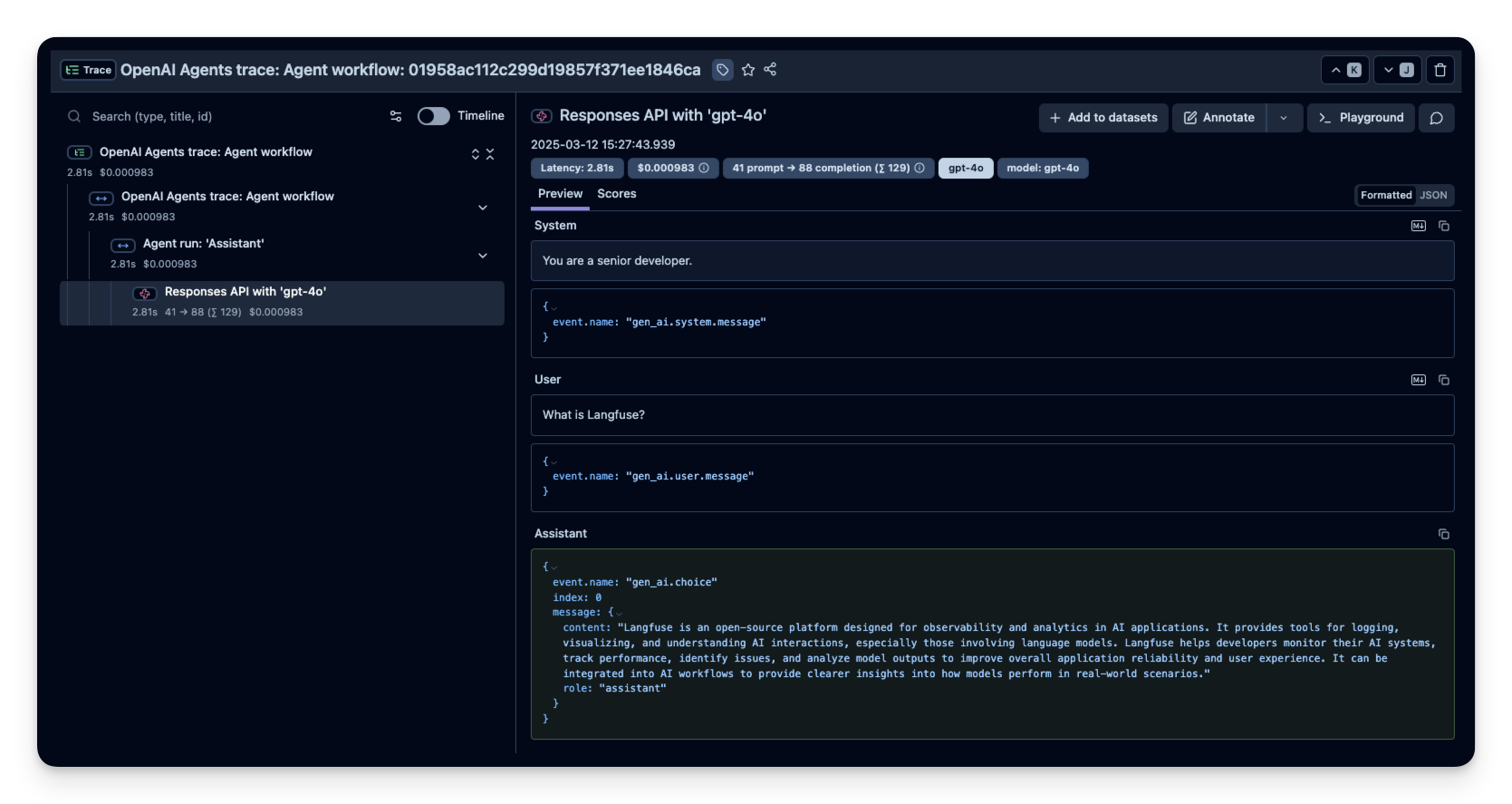

Example: Langfuse Trace

Clicking the link above (or your own project link) lets you view all sub-spans, token usage, latencies, etc., for debugging or optimization.

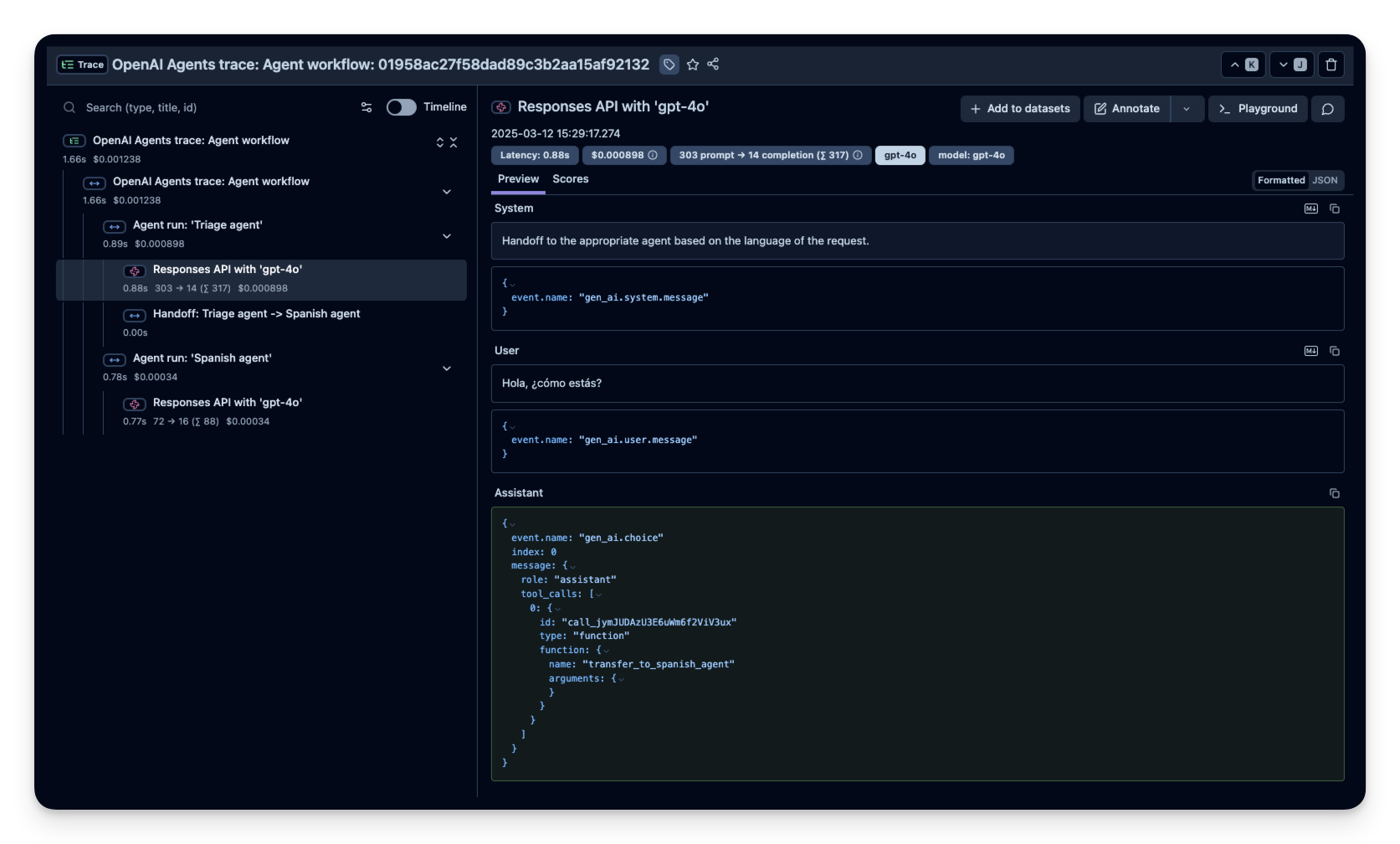

5. Multi-agent Handoff Example

Here we create:

- A Spanish agent that responds only in Spanish.

- An English agent that responds only in English.

- A Triage agent that routes the request to the correct agent based on the input language.

Any calls or handoffs are captured as part of the trace. That way, you can see which sub-agent or tool was used, as well as the final result.

from agents import Agent, Runner

import asyncio

spanish_agent = Agent(

name="Spanish agent",

instructions="You only speak Spanish.",

)

english_agent = Agent(

name="English agent",

instructions="You only speak English",

)

triage_agent = Agent(

name="Triage agent",

instructions="Handoff to the appropriate agent based on the language of the request.",

handoffs=[spanish_agent, english_agent],

)

result = await Runner.run(triage_agent, input="Hola, ¿cómo estás?")

print(result.final_output)

Example: Langfuse Trace

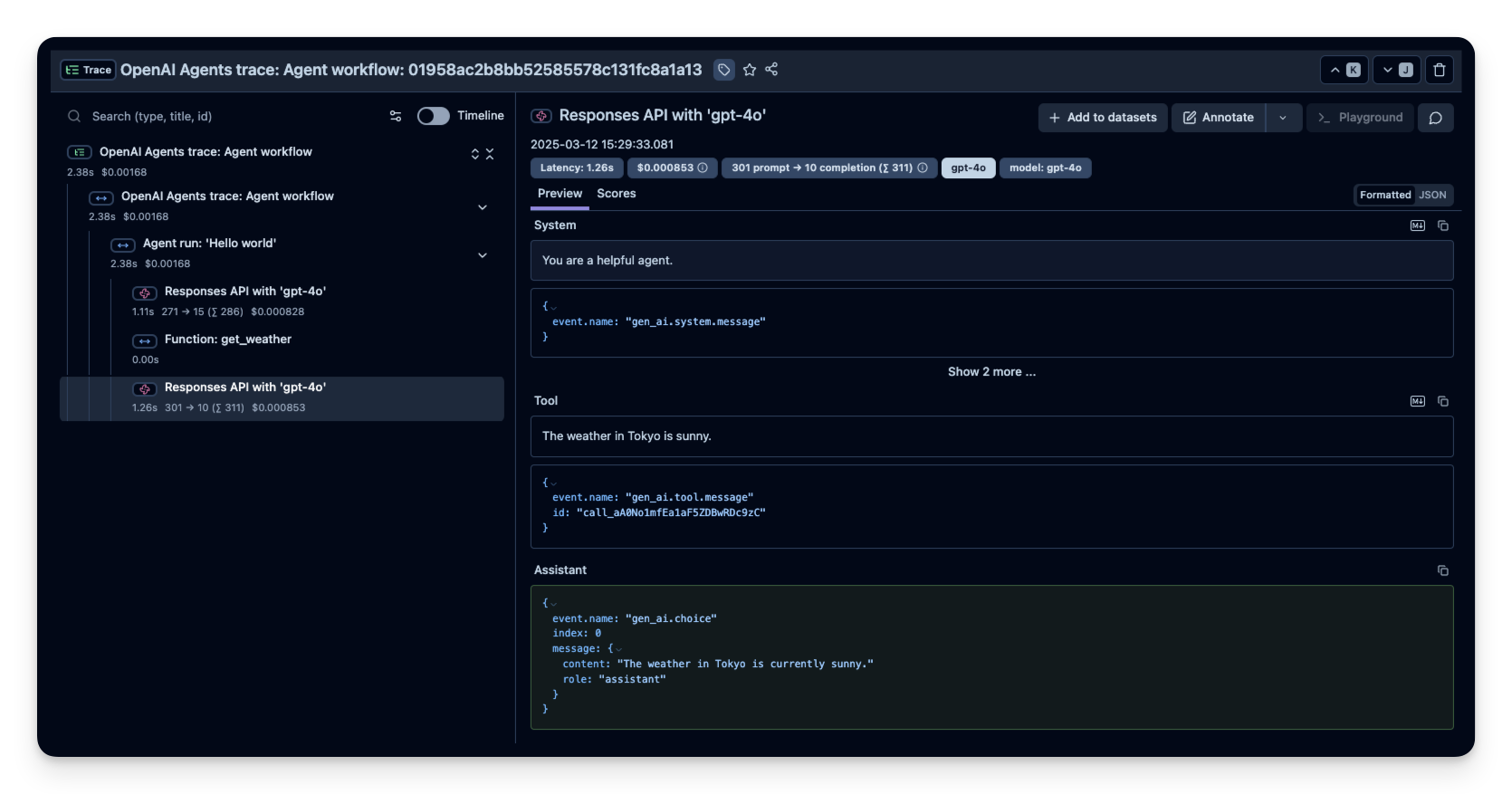

6. Functions Example

The OpenAI Agents SDK allows the agent to call Python functions. With Langfuse instrumentation, you can see which functions are called, their arguments, and the return values. Here we define a simple function get_weather(city: str) and add it as a tool.

import asyncio

from agents import Agent, Runner, function_tool

# Example function tool.

@function_tool

def get_weather(city: str) -> str:

return f"The weather in {city} is sunny."

agent = Agent(

name="Hello world",

instructions="You are a helpful agent.",

tools=[get_weather],

)

async def main():

result = await Runner.run(agent, input="What's the weather in Tokyo?")

print(result.final_output)

loop = asyncio.get_running_loop()

await loop.create_task(main())

Example: Langfuse Trace

When viewing the trace, you’ll see a span capturing the function call get_weather and the arguments passed.

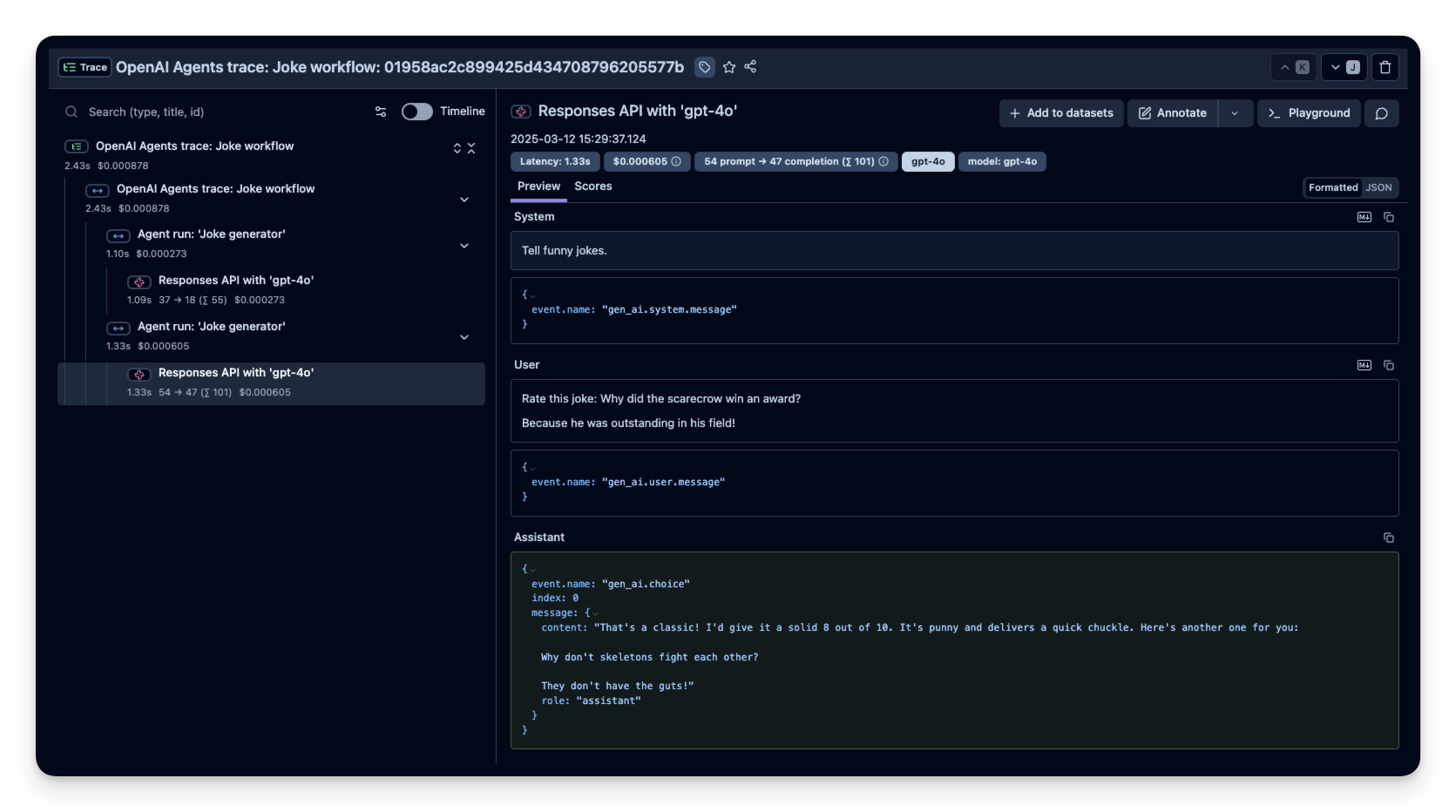

7. Grouping Agent Runs

In some workflows, you want to group multiple calls into a single trace—for instance, when building a small chain of prompts that all relate to the same user request. You can use a trace(...) context manager to nest multiple calls under one top-level trace.

from agents import Agent, Runner, trace

import asyncio

async def main():

agent = Agent(name="Joke generator", instructions="Tell funny jokes.")

with trace("Joke workflow"):

first_result = await Runner.run(agent, "Tell me a joke")

second_result = await Runner.run(agent, f"Rate this joke: {first_result.final_output}")

print(f"Joke: {first_result.final_output}")

print(f"Rating: {second_result.final_output}")

loop = asyncio.get_running_loop()

await loop.create_task(main())

Example: Langfuse Trace

Each child call is represented as a sub-span under the top-level Joke workflow span, making it easy to see the entire conversation or sequence of calls.

Link Langfuse Prompt

If you manage your prompt with Langfuse Prompt Management, you can link the used prompt to the trace by setting up an OTel Span processor.

Limitation: This method links the Langfuse Prompt to all generation spans in the trace that start with the defined string (see next cell).

from contextvars import ContextVar

from typing import Optional

from opentelemetry import context as context_api, trace

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import Span, SpanProcessor

prompt_info_var = ContextVar("prompt_info", default=None)

# Make sure to set the name of the generation spans in your trace

class LangfuseProcessor(SpanProcessor):

def on_start(self, span: "Span", parent_context: Optional[context_api.Context] = None) -> None:

if span.name.startswith("response"): # The name of the generation spans in your trace

prompt_info = prompt_info_var.get()

if prompt_info:

span.set_attribute("langfuse.prompt.name", prompt_info.get("name"))

span.set_attribute("langfuse.prompt.version", prompt_info.get("version"))

from langfuse import get_client

langfuse = get_client()

trace.get_tracer_provider().add_span_processor(LangfuseProcessor())from openinference.instrumentation.openai_agents import OpenAIAgentsInstrumentor

OpenAIAgentsInstrumentor().instrument()# Fetch the prompt from Langfuse Prompt Management

langfuse_prompt = langfuse.get_prompt("movie-critic")

system_prompt = langfuse_prompt.compile(criticlevel = "expert", movie = "Dune 2")

# Pass the prompt to the SpanProcessor

prompt_info_var.set({

"name": langfuse_prompt.name,

"version": langfuse_prompt.version,

})

# Run the agent ...Resources

- Example notebook on evaluating agents with Langfuse.

Interoperability with the Python SDK

You can use this integration together with the Langfuse SDKs to add additional attributes to the trace.

The @observe() decorator provides a convenient way to automatically wrap your instrumented code and add additional attributes to the trace.

from langfuse import observe, propagate_attributes, get_client

langfuse = get_client()

@observe()

def my_llm_pipeline(input):

# Add additional attributes (user_id, session_id, metadata, version, tags) to all spans created within this execution scope

with propagate_attributes(

user_id="user_123",

session_id="session_abc",

tags=["agent", "my-trace"],

metadata={"email": "user@langfuse.com"},

version="1.0.0"

):

# YOUR APPLICATION CODE HERE

result = call_llm(input)

# Update the trace input and output

langfuse.update_current_trace(

input=input,

output=result,

)

return resultLearn more about using the Decorator in the Langfuse SDK instrumentation docs.

Troubleshooting

No traces appearing

First, enable debug mode in the Python SDK:

export LANGFUSE_DEBUG="True"Then run your application and check the debug logs:

- OTel spans appear in the logs: Your application is instrumented correctly but traces are not reaching Langfuse. To resolve this:

- Call

langfuse.flush()at the end of your application to ensure all traces are exported. - Verify that you are using the correct API keys and base URL.

- Call

- No OTel spans in the logs: Your application is not instrumented correctly. Make sure the instrumentation runs before your application code.

Unwanted observations in Langfuse

The Langfuse SDK is based on OpenTelemetry. Other libraries in your application may emit OTel spans that are not relevant to you. These still count toward your billable units, so you should filter them out. See Unwanted spans in Langfuse for details.

Missing attributes

Some attributes may be stored in the metadata object of the observation rather than being mapped to the Langfuse data model. If a mapping or integration does not work as expected, please raise an issue on GitHub.

Next Steps

Once you have instrumented your code, you can manage, evaluate and debug your application: