Cookbook: Observability for Groq Models (Python)

This cookbook shows two ways to interact with Groq models and trace them with Langfuse:

- Using the OpenAI SDK to interact with the Groq model

- Using the OpenInference instrumentation library to interact with Groq models

By following these examples, you’ll learn how to log and trace interactions with Groq language models, enabling you to debug and evaluate the performance of your AI-driven applications.

Note: Langfuse is also natively integrated with LangChain, LlamaIndex, LiteLLM, and other frameworks. If you use one of them, any use of Groq models is instrumented right away.

To get started, set up your environment variables for Langfuse and Groq:

import os

# Get keys for your project from the project settings page: https://cloud.langfuse.com

os.environ["LANGFUSE_PUBLIC_KEY"] = "pk-lf-..."

os.environ["LANGFUSE_SECRET_KEY"] = "sk-lf-..."

os.environ["LANGFUSE_BASE_URL"] = "https://cloud.langfuse.com" # 🇪🇺 EU region

# os.environ["LANGFUSE_BASE_URL"] = "https://us.cloud.langfuse.com" # 🇺🇸 US region

# Your Groq API key

os.environ["GROQ_API_KEY"] = "gsk_..."Option 1: Using the OpenAI SDK to interact with the Groq model

Note: This example shows how to use the OpenAI Python SDK. If you use JS/TS, have a look at our OpenAI JS/TS SDK.

Install Required Packages

%pip install langfuse openai --upgradeImport Necessary Modules

Instead of importing openai directly, import it from langfuse.openai. Also, import any other necessary modules.

# Instead of: import openai

from langfuse.openai import OpenAIInitialize the OpenAI Client for the Groq Model

Initialize the OpenAI client but point it to the Groq model endpoint. Replace the access token with your own.

client = OpenAI(

base_url="https://api.groq.com/openai/v1",

api_key=os.environ.get("GROQ_API_KEY")

)Chat Completion Request

Use the client to make a chat completion request to the Groq model.

completion = client.chat.completions.create(

model="llama3-8b-8192",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{

"role": "user",

"content": "Write a poem about language models"

}

]

)

print(completion.choices[0].message.content)Option 2: Using the OpenInference instrumentation

This option will use the OpenInference instrumentation library to send traces to Langfuse.

For more detailed guidance on the Groq SDK, please refer to the Groq Documentation and the Langfuse Documentation.

Install Required Packages

%pip install groq langfuse openinference-instrumentation-groqimport os

# Get keys for your project from the project settings page: https://cloud.langfuse.com

os.environ["LANGFUSE_PUBLIC_KEY"] = "pk-lf-..."

os.environ["LANGFUSE_SECRET_KEY"] = "sk-lf-..."

os.environ["LANGFUSE_BASE_URL"] = "https://cloud.langfuse.com" # 🇪🇺 EU region

# os.environ["LANGFUSE_BASE_URL"] = "https://us.cloud.langfuse.com" # 🇺🇸 US region

# Your Groq API key

os.environ["GROQ_API_KEY"] = "gsk_..."With the environment variables set, we can now initialize the Langfuse client. get_client() initializes the Langfuse client using the credentials provided in the environment variables.

from langfuse import get_client

# Initialise Langfuse client and verify connectivity

langfuse = get_client()

assert langfuse.auth_check(), "Langfuse auth failed - check your keys ✋"OpenTelemetry Instrumentation

Use the OpenInference instrumentation library to wrap the Groq SDK calls and send OpenTelemetry spans to Langfuse.

from openinference.instrumentation.groq import GroqInstrumentor

GroqInstrumentor().instrument()Example LLM Call

from groq import Groq

# Initialize Groq client

groq_client = Groq(api_key=os.environ["GROQ_API_KEY"])chat_completion = groq_client.chat.completions.create(

messages=[

{

"role": "user",

"content": "Explain the importance of fast language models",

}

],

model="llama-3.3-70b-versatile",

)

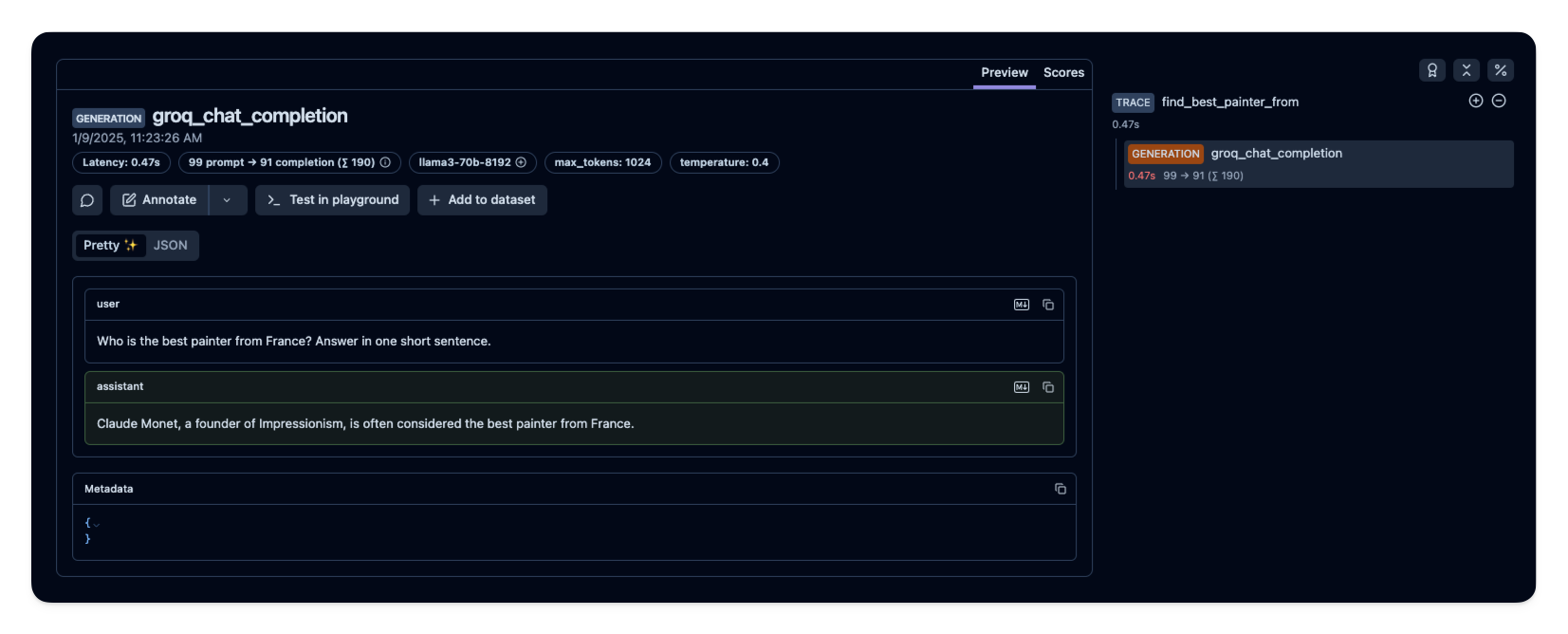

print(chat_completion.choices[0].message.content)See Traces in Langfuse

After running the example model call, you can see the traces in Langfuse. You will see detailed information about your Groq API calls, including:

- Request parameters (model, messages, temperature, etc.)

- Response content

- Token usage statistics

- Latency metrics

Interoperability with the Python SDK

You can use this integration together with the Langfuse SDKs to add additional attributes to the trace.

The @observe() decorator provides a convenient way to automatically wrap your instrumented code and add additional attributes to the trace.

from langfuse import observe, propagate_attributes, get_client

langfuse = get_client()

@observe()

def my_llm_pipeline(input):

# Add additional attributes (user_id, session_id, metadata, version, tags) to all spans created within this execution scope

with propagate_attributes(

user_id="user_123",

session_id="session_abc",

tags=["agent", "my-trace"],

metadata={"email": "user@langfuse.com"},

version="1.0.0"

):

# YOUR APPLICATION CODE HERE

result = call_llm(input)

# Update the trace input and output

langfuse.update_current_trace(

input=input,

output=result,

)

return resultLearn more about using the Decorator in the Langfuse SDK instrumentation docs.

Next Steps

Once you have instrumented your code, you can manage, evaluate and debug your application: