Observability for Portkey LLM Gateway with Langfuse

This guide shows you how to integrate Portkey’s AI gateway with Langfuse. Portkey’s API endpoints are fully compatible with the OpenAI SDK, allowing you to trace and monitor your AI applications seamlessly.

What is Portkey? Portkey is an AI gateway that provides a unified interface to interact with 250+ AI models, offering advanced tools for control, visibility, and security in your Generative AI apps.

What is Langfuse? Langfuse is an open source LLM engineering platform that helps teams trace LLM calls, monitor performance, and debug issues in their AI applications.

Step 1: Install Dependencies

%pip install openai langfuse portkey_aiStep 2: Set Up Environment Variables

Next, set up your Langfuse API keys. You can get these keys by signing up for a free Langfuse Cloud account or by self-hosting Langfuse. These environment variables are essential for the Langfuse client to authenticate and send data to your Langfuse project.

import os

# Get keys for your project from the project settings page: https://cloud.langfuse.com

os.environ["LANGFUSE_PUBLIC_KEY"] = "pk-lf-..."

os.environ["LANGFUSE_SECRET_KEY"] = "sk-lf-..."

os.environ["LANGFUSE_HOST"] = "https://cloud.langfuse.com" # 🇪🇺 EU region

# os.environ["LANGFUSE_HOST"] = "https://us.cloud.langfuse.com" # 🇺🇸 US regionfrom langfuse import get_client

get_client().auth_check()True

Step 3: Use Langfuse OpenAI Drop-in Replacement

Next, you can use Langfuse’s OpenAI-compatible client (from langfuse.openai import OpenAI) to trace all requests sent through the Portkey gateway. For detailed setup instructions on the LLM gateway and virtual LLM keys, refer to the Portkey documentation.

from langfuse.openai import OpenAI

from portkey_ai import createHeaders, PORTKEY_GATEWAY_URL

client = OpenAI(

api_key="xxx", #Since we are using a virtual key we do not need this

base_url = PORTKEY_GATEWAY_URL,

default_headers = createHeaders(

api_key = "***",

virtual_key = "***"

)

)Step 4: Run an Example

response = client.chat.completions.create(

model="gpt-4o", # Or any model supported by your chosen provider

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "What are the benefits of using an AI gateway?"},

],

)

print(response.choices[0].message.content)

# Flush via global client

langfuse = get_client()

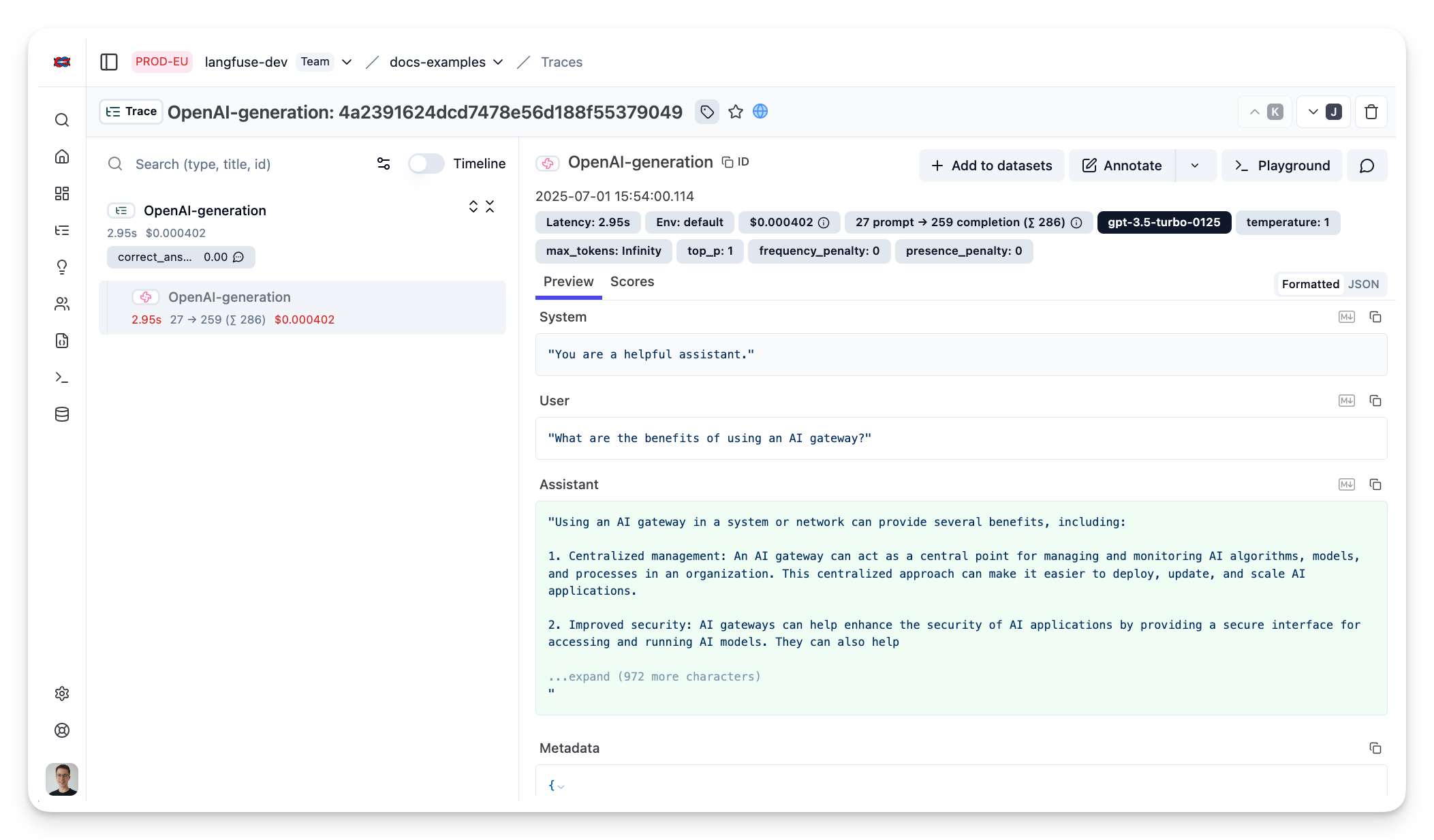

langfuse.flush()Step 5: See Traces in Langfuse

After running the example, log in to Langfuse to view the detailed traces, including:

- Request parameters

- Response content

- Token usage and latency metrics

- LLM model information through Portkey gateway

Interoperability with the Python SDK

You can use this integration together with the Langfuse Python SDK to add additional attributes to the trace.

The @observe() decorator provides a convenient way to automatically wrap your instrumented code and add additional attributes to the trace.

from langfuse import observe, get_client

langfuse = get_client()

@observe()

def my_instrumented_function(input):

output = my_llm_call(input)

langfuse.update_current_trace(

input=input,

output=output,

user_id="user_123",

session_id="session_abc",

tags=["agent", "my-trace"],

metadata={"email": "[email protected]"},

version="1.0.0"

)

return outputLearn more about using the Decorator in the Python SDK docs.

Next Steps

Once you have instrumented your code, you can manage, evaluate and debug your application: