Integrate Langfuse with CrewAI

This notebook provides a step-by-step guide on integrating Langfuse with CrewAI to achieve observability and debugging for your LLM applications.

What is CrewAI? CrewAI (GitHub) is a framework for orchestrating autonomous AI agents. CrewAI enables you to create AI teams where each agent has specific roles, tools, and goals, working together to accomplish complex tasks. Each member (agent) brings unique skills and expertise, collaborating seamlessly to achieve your objectives.

What is Langfuse? Langfuse is an open-source LLM engineering platform. It offers tracing and monitoring capabilities for AI applications. Langfuse helps developers debug, analyze, and optimize their AI systems by providing detailed insights and integrating with a wide array of tools and frameworks through native integrations, OpenTelemetry, and dedicated SDKs.

Getting Started

Let’s walk through a practical example of using CrewAI and integrating it with Langfuse for comprehensive tracing.

Step 1: Install Dependencies

Note: This notebook utilizes the Langfuse OTel Python SDK v3. For users of Python SDK v2, please refer to our legacy CrewAI integration guide.

%pip install langfuse crewai openinference-instrumentation-crewai openinference-instrumentation-litellmStep 2: Configure Langfuse SDK

Next, set up your Langfuse API keys. You can get these keys by signing up for a free Langfuse Cloud account or by self-hosting Langfuse. These environment variables are essential for the Langfuse client to authenticate and send data to your Langfuse project.

import os

# Get keys for your project from the project settings page: https://cloud.langfuse.com

os.environ["LANGFUSE_PUBLIC_KEY"] = "pk-lf-..."

os.environ["LANGFUSE_SECRET_KEY"] = "sk-lf-..."

os.environ["LANGFUSE_HOST"] = "https://cloud.langfuse.com" # 🇪🇺 EU region

# os.environ["LANGFUSE_HOST"] = "https://us.cloud.langfuse.com" # 🇺🇸 US region

# Your OpenAI key

os.environ["OPENAI_API_KEY"] = "sk-proj-..."With the environment variables set, we can now initialize the Langfuse client. get_client() initializes the Langfuse client using the credentials provided in the environment variables.

from langfuse import get_client

langfuse = get_client()

# Verify connection

if langfuse.auth_check():

print("Langfuse client is authenticated and ready!")

else:

print("Authentication failed. Please check your credentials and host.")

Step 3: Initialize CrewAI Instrumentation

Now, we initialize the OpenInference instrumentation SDK to automatically capture CrewAI operations and export OpenTelemetry (OTel) spans to Langfuse.

from openinference.instrumentation.crewai import CrewAIInstrumentor

from openinference.instrumentation.litellm import LiteLLMInstrumentor

CrewAIInstrumentor().instrument(skip_dep_check=True)

LiteLLMInstrumentor().instrument()Step 4: Basic CrewAI Application

Let’s create a straightforward CrewAI application. In this example, we’ll create a simple crew with agents that can collaborate to complete tasks. This will serve as the foundation for demonstrating Langfuse tracing.

from crewai import Agent, Task, Crew

# Define your agents with roles and goals

coder = Agent(

role='Software developer',

goal='Write clear, concise code on demand',

backstory='An expert coder with a keen eye for software trends.',

)

# Create tasks for your agents

task1 = Task(

description="Define the HTML for making a simple website with heading- Hello World! Langfuse monitors your CrewAI agent!",

expected_output="A clear and concise HTML code",

agent=coder

)

# Instantiate your crew

crew = Crew(

agents=[coder],

tasks=[task1],

)

with langfuse.start_as_current_span(name="crewai-index-trace"):

result = crew.kickoff()

print(result)

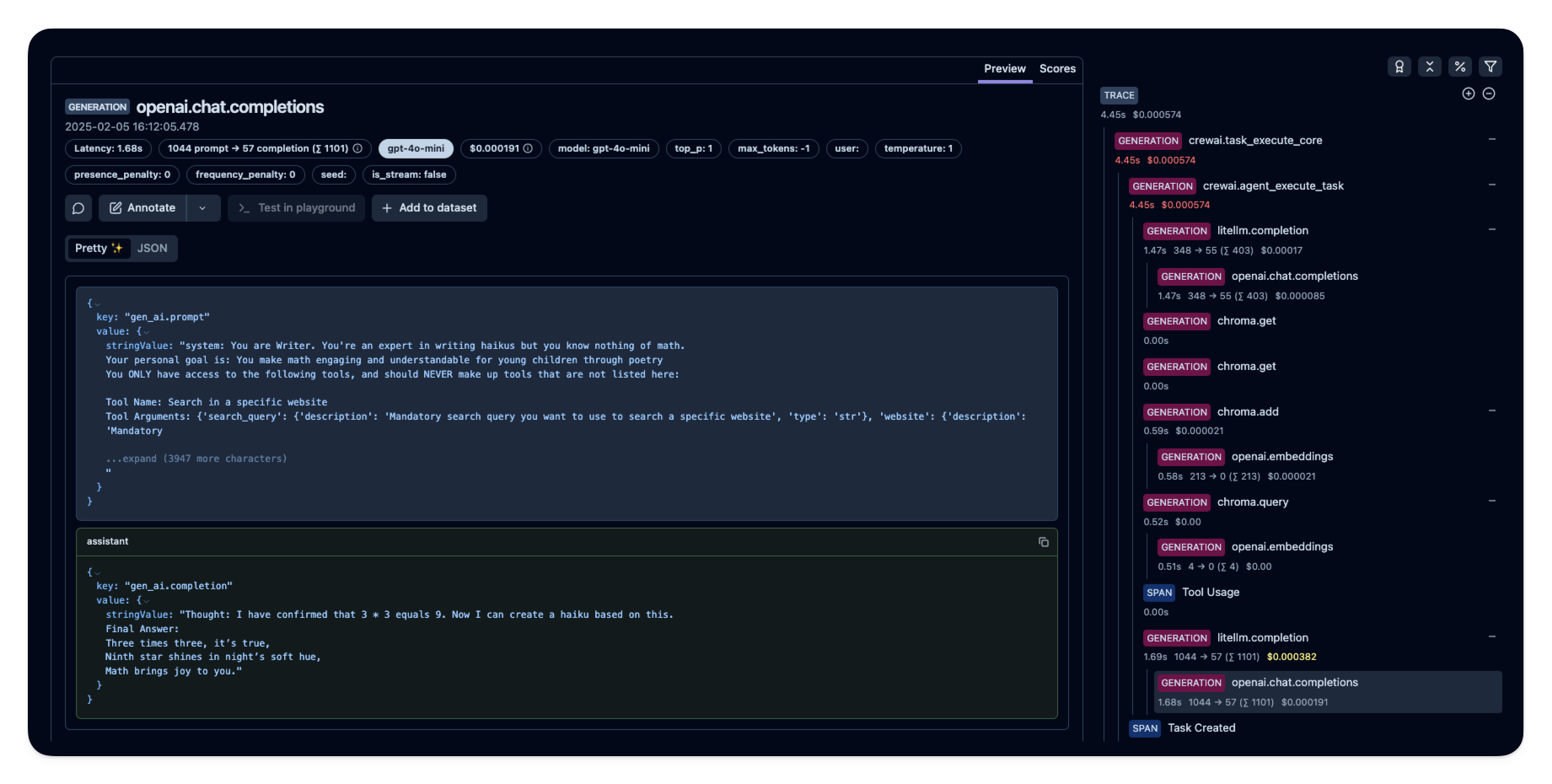

langfuse.flush()Step 5: View Traces in Langfuse

After executing the application, navigate to your Langfuse Trace Table. You will find detailed traces of the application’s execution, providing insights into the LLM calls, agent operations, inputs, outputs, and performance metrics. The trace will show the complete flow from task processing through agent collaboration to response generation.

Add Additional Attributes

Langfuse allows you to pass additional attributes to your spans. These can include user_id, tags, session_id, and custom metadata. Enriching traces with these details is important for analysis, debugging, and monitoring of your application’s behavior across different users or sessions.

The following code demonstrates how to start a custom span with langfuse.start_as_current_span and then update the trace associated with this span using span.update_trace().

→ Learn more about Updating Trace and Span Attributes.

with langfuse.start_as_current_span(

name="crewai-index-trace",

) as span:

# Run your application here

task_description = "Define the HTML for making a simple website with heading- Hello World! Langfuse monitors your CrewAI agent!"

result = crew.kickoff()

print(result)

# Pass additional attributes to the span

span.update_trace(

input=task_description,

output=str(result),

user_id="user_123",

session_id="session_abc",

tags=["dev", "crewai"],

metadata={"email": "[email protected]"},

version="1.0.0"

)

# Flush events in short-lived applications

langfuse.flush()

Score Traces and Spans

Langfuse lets you ingest custom scores for individual spans or entire traces. This scoring workflow enables you to implement custom quality checks at runtime or facilitate human-in-the-loop evaluation processes.

In the example below, we demonstrate how to score a specific span for relevance (a numeric score) and the overall trace for feedback (a categorical score). This helps in systematically assessing and improving your application.

→ Learn more about Custom Scores in Langfuse.

with langfuse.start_as_current_span(

name="crewai-index-trace",

) as span:

# Run your application here

result = crew.kickoff()

print(result)

# Score this specific span

span.score(name="relevance", value=0.9, data_type="NUMERIC")

# Score the overall trace

span.score_trace(name="feedback", value="positive", data_type="CATEGORICAL")

# Flush events in short-lived applications

langfuse.flush()

Manage Prompts with Langfuse

Langfuse Prompt Management allows you to collaboratively create, version, and deploy prompts. You can manage prompts either through the Langfuse SDK or directly within the Langfuse UI. These managed prompts can then be fetched into your application at runtime.

The code below illustrates fetching a prompt named crewai-task from Langfuse, compiling it with an input variable (task_type), and then using this compiled prompt in the CrewAI application.

→ Get started with Langfuse Prompt Management.

Note: Linking the Langfuse Prompt and the Generation is currently not possible. This is on our roadmap and we are tracking this here.

# Fetch the prompt from langfuse

langfuse_prompt = langfuse.get_prompt(name = "crewai-task")

# Compile the prompt with the input

compiled_prompt = langfuse_prompt.compile(task_type = "HTML website")

# Create a task with the managed prompt

task_with_prompt = Task(

description=compiled_prompt,

expected_output="A clear and concise HTML code",

agent=coder

)

# Update crew with new task

crew_with_prompt = Crew(

agents=[coder],

tasks=[task_with_prompt],

)

# Run your application

with langfuse.start_as_current_span(

name="crewai-index-trace",

) as span:

# Run your application here

result = crew_with_prompt.kickoff()

print(result)

# Flush events in short-lived applications

langfuse.flush()

Dataset Experiments

Offline evaluation using datasets is a critical part of the LLM development lifecycle. Langfuse supports this through Dataset Experiments. The typical workflow involves:

- Benchmark Dataset: Defining a dataset with input prompts and their corresponding expected outputs.

- Application Run: Running your LLM application against each item in the dataset.

- Evaluation: Comparing the generated outputs against the expected results or using other scoring mechanisms (e.g., model-based evaluation) to assess performance.

The following example demonstrates how to use a pre-existing dataset containing development tasks to run an experiment with your CrewAI application.

→ Learn more about Langfuse Dataset Experiments.

from langfuse import get_client

langfuse = get_client()

# Fetch an existing dataset

dataset = langfuse.get_dataset(name="capital_cities_11")

for item in dataset.items:

print(f"Input: {item.input['country']}, Expected Output: {item.expected_output}")

Next, we iterate through each item in the dataset, run our CrewAI application (your_application) with the item’s input, and log the results as a run associated with that dataset item in Langfuse. This allows for structured evaluation and comparison of different application versions or prompt configurations.

The item.run() context manager is used to create a new trace for each dataset item processed in the experiment. Optionally you can score the dataset runs.

from crewai import Agent, Task, Crew

dataset_name = "capital_cities_11"

current_run_name = "dev_tasks_run-crewai_03" # Identifies this specific evaluation run

current_run_metadata={"model_provider": "OpenAI", "temperature_setting": 0.7}

current_run_description="Evaluation run for CrewAI model on June 4th"

# Assume 'your_application' is your instrumented application function

def your_application(country):

with langfuse.start_as_current_span(name="crewai-trace") as span:

# Define your agents with roles and goals

geography_expert = Agent(

role='Geography Expert',

goal='Answer questions about geography',

backstory='A geography expert with a keen eye for details.',

)

# Create tasks for your agents

task1 = Task(

description = f"What is the capital of {country}?",

expected_output="The name of the capital in one word.",

agent=geography_expert

)

# Instantiate your crew

crew = Crew(

agents=[geography_expert],

tasks=[task1],

)

# Run the crew

result = crew.kickoff()

print(result)

# Update the trace with the input and output

span.update_trace(

input = task1.description,

output = result.raw,

)

return str(result)

dataset = langfuse.get_dataset(name=dataset_name) # Fetch your pre-populated dataset

for item in dataset.items:

print(f"Running evaluation for item: {item.id} (Input: {item.input})")

# Use the item.run() context manager

with item.run(

run_name=current_run_name,

run_metadata=current_run_metadata,

run_description=current_run_description

) as root_span:

# All subsequent langfuse operations within this block are part of this trace.

generated_answer = your_application(

country=item.input["country"],

)

# Optionally, score the result against the expected output

if item.expected_output and generated_answer == item.expected_output:

root_span.score_trace(name="exact_match", value=1.0)

else:

root_span.score_trace(name="exact_match", value=0.0)

print(f"\nFinished processing dataset '{dataset_name}' for run '{current_run_name}'.")

Explore More Langfuse Features

Langfuse offers more features to enhance your LLM development and observability workflow:

- LLM-as-a-Judge Evaluators

- Custom Dashboards

- LLM Playground

- Prompt Management

- Prompt Experiments

- More Integrations