Cookbook: Monitor 🤗 Hugging Face Models with 🪢 Langfuse

This cookbook shows you how to monitor Hugging Face models using the OpenAI SDK integration with Langfuse. This allows you to collaboratively debug, monitor and evaluate your LLM applications.

With this integration, you can test and evaluate different models, monitor your application’s cost and assign scores such as user feedback or human annotations.

Note: In this example, we use the OpenAI SDK to access the Hugging Face inference APIs. You can also use other frameworks, such as Langchain, or ingest the data via our API.

Setup

Install Required Packages

%pip install langfuse openai --upgradeSet Environment Variables

Set up your environment variables with the necessary keys. Get keys for your Langfuse project from Langfuse Cloud. Also, obtain an access token from Hugging Face.

import os

# Get keys for your project from https://cloud.langfuse.com

os.environ["LANGFUSE_SECRET_KEY"] = "sk-lf-..." # Private Project

os.environ["LANGFUSE_PUBLIC_KEY"] = "pk-lf-..." # Private Project

os.environ["LANGFUSE_HOST"] = "https://cloud.langfuse.com" # 🇪🇺 EU region

# os.environ["LANGFUSE_HOST"] = "https://us.cloud.langfuse.com" # 🇺🇸 US region

os.environ["HUGGINGFACE_ACCESS_TOKEN"] = "hf_..."Import Necessary Modules

Instead of importing openai directly, import it from langfuse.openai. Also, import any other necessary modules.

# Instead of: import openai

from langfuse.openai import OpenAI

from langfuse import observeInitialize the OpenAI Client for Hugging Face Models

Initialize the OpenAI client but point it to the Hugging Face model endpoint. You can use any model hosted on Hugging Face that supports the OpenAI API format. Replace the model URL and access token with your own.

For this example, we use the Meta-Llama-3-8B-Instruct model.

# Initialize the OpenAI client, pointing it to the Hugging Face Inference API

client = OpenAI(

base_url="https://api-inference.huggingface.co/models/meta-llama/Meta-Llama-3-8B-Instruct" + "/v1/", # replace with your endpoint url

api_key= os.getenv('HUGGINGFACE_ACCESS_TOKEN'), # replace with your token

)Examples

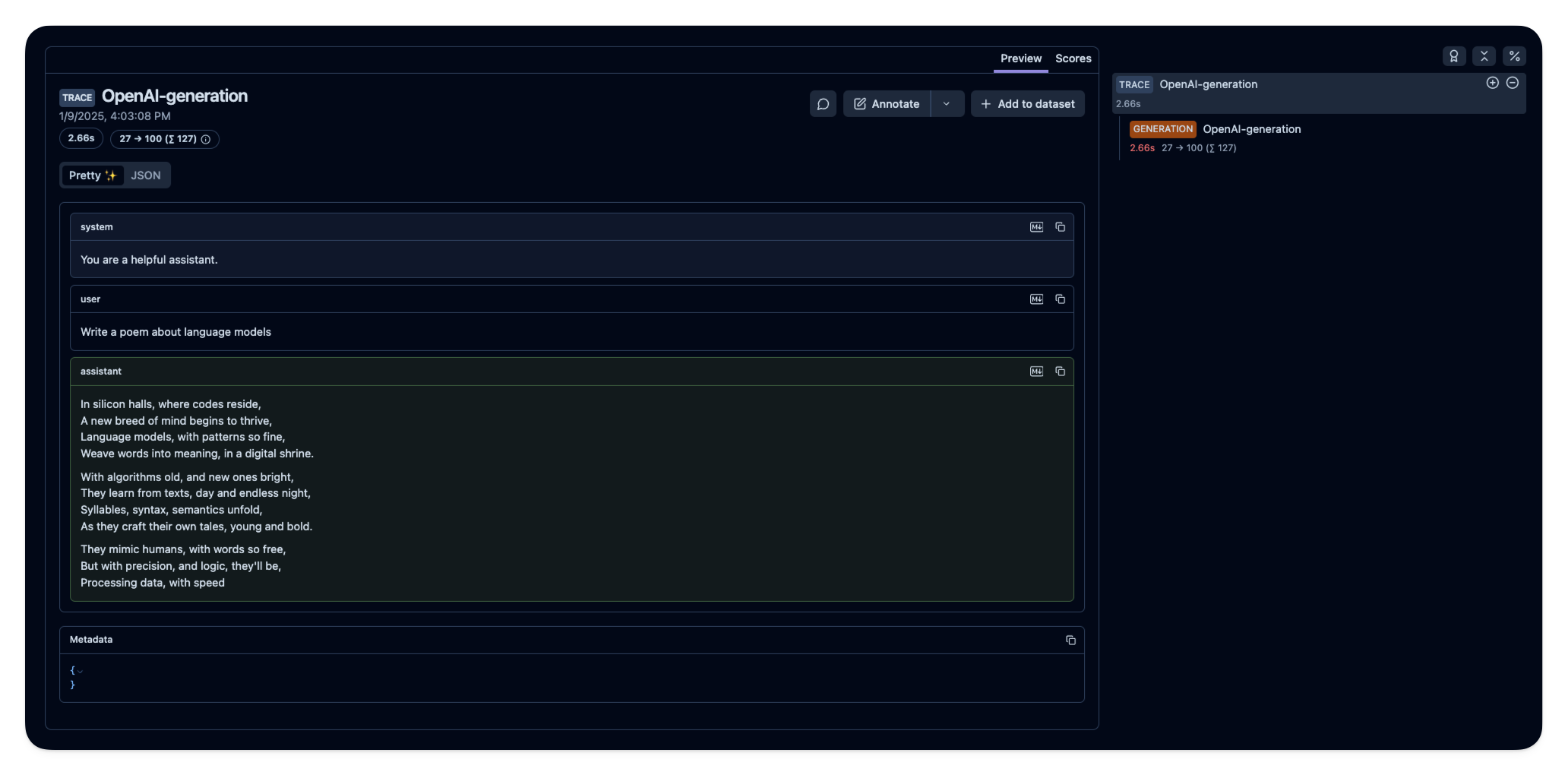

Chat Completion Request

Use the client to make a chat completion request to the Hugging Face model. The model parameter can be any identifier since the actual model is specified in the base_url. In this example, we set the model variable tgi, short for Text Generation Inference.

completion = client.chat.completions.create(

model="model-name",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{

"role": "user",

"content": "Write a poem about language models"

}

]

)

print(completion.choices[0].message.content)

Observe the Request with Langfuse

By using the OpenAI client from langfuse.openai, your requests are automatically traced in Langfuse. You can also use the @observe() decorator to group multiple generations into a single trace.

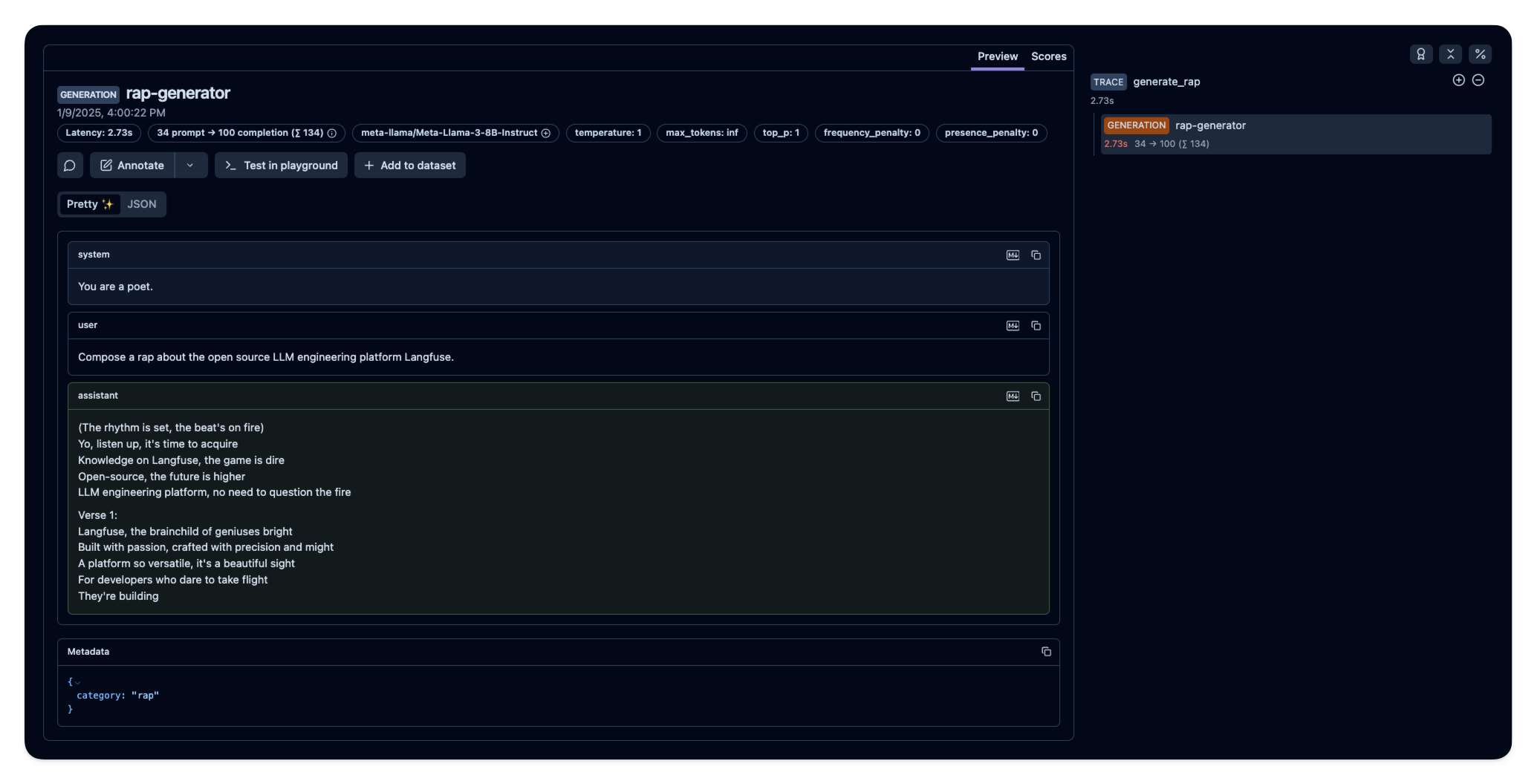

@observe() # Decorator to automatically create a trace and nest generations

def generate_rap():

completion = client.chat.completions.create(

name="rap-generator",

model="tgi",

messages=[

{"role": "system", "content": "You are a poet."},

{"role": "user", "content": "Compose a rap about the open source LLM engineering platform Langfuse."}

],

metadata={"category": "rap"},

)

return completion.choices[0].message.content

rap = generate_rap()

print(rap)

Interoperability with the Python SDK

You can use this integration together with the Langfuse Python SDK to add additional attributes to the trace.

The @observe() decorator provides a convenient way to automatically wrap your instrumented code and add additional attributes to the trace.

from langfuse import observe, get_client

langfuse = get_client()

@observe()

def my_instrumented_function(input):

output = my_llm_call(input)

langfuse.update_current_trace(

input=input,

output=output,

user_id="user_123",

session_id="session_abc",

tags=["agent", "my-trace"],

metadata={"email": "[email protected]"},

version="1.0.0"

)

return outputLearn more about using the Decorator in the Python SDK docs.

Next Steps

Once you have instrumented your code, you can manage, evaluate and debug your application: